Introduction to Zero Trust Security

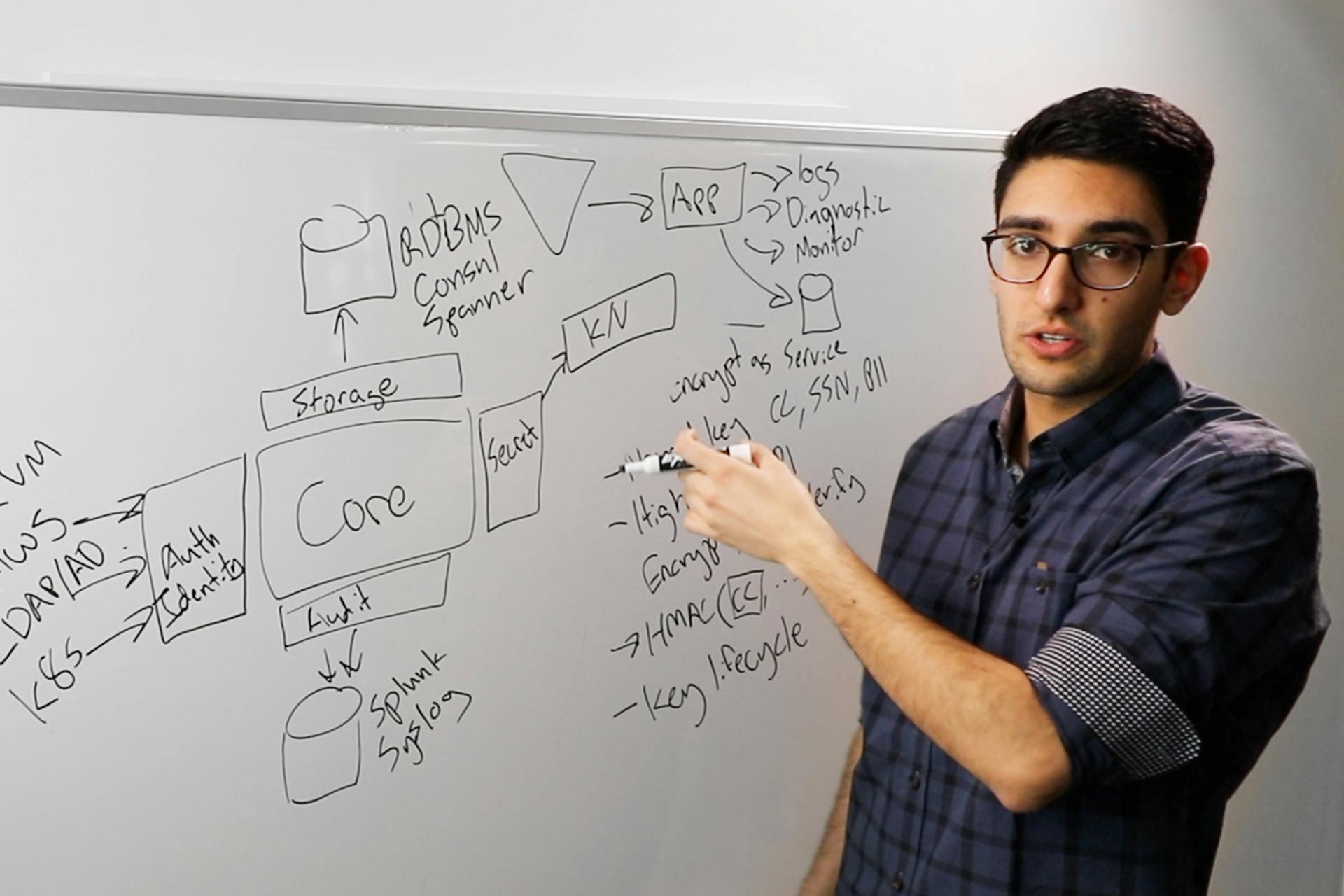

In this whiteboard introduction, learn how Zero Trust Security is achieved with HashiCorp tools that provide machine identity brokering, machine to machine access, and human to machine access.

In this whiteboard video, Armon Dadgar answers the question: What is Zero Trust Security and Zero Trust Networking? How do I do it? and Why should I do it? If you’re moving your applications to cloud environments, this is a critical aspect of cloud security that you must understand.

See how HashiCorp Vault, Consul, and Boundary paired with a human authentication and authorization (SSO, etc.) provider can build a Zero Trust Network.

Visit the Zero Trust Security guide for more follow up actions.

»Transcript

Hi. I want to spend a little bit of time today talking about the HashiCorp view on zero trust security, what it means, and what the implications of it are.

»The Traditional Approach To Security

When we talk about zero trust networking, it’s helpful to almost take a step back and talk about the traditional approach to security and the implications of that.

When we talk about the existing model that you predominantly see, it’s very much what I like to call a castle and moat approach. You have the four walls of the datacenter, and we bring all of our traffic over the front door. This is where our ingress and egress take place. This becomes the single choke point where we deploy everything from firewalls, web application filters, SIMS, etc. This is where all of our networking gear goes.

And we create this binary distinction — outside is bad and untrusted. Inside is good and high trust. In a large network, we’d also segment this. We might have some core screen networks: This might be development, staging, production, PCI, etc. But these are still large green network segments. They might have 1,000’s of nodes in each of them.

»Moving To The Cloud

As we try and go to cloud, we add these nice fluffy cloud regions in. And each of these, we connect together into effectively the super network. The super network can be constructed from different networking technologies. It could be SD-WAN, VPN overlay, direct connect, express route, etc. It’s this concept that we’re bridging it all together into one giant network.

Now, I think what you often see is how do we take this perimeter-based approach and extend it to these clouds — and get to a point where we have a 100% effective perimeter here. And I think you find very quickly if I’m going to give you any capability in the cloud — I’m going to give you any IAM permissions — I’m giving you enough rope to hang yourself.

I mean, I’m going to give you the right to create an S3 bucket, for example. You might define that S3 bucket and mark it as public — in which case it’s on the outside of your perimeter. In the same way that if you change it to private, it’s on the inside of the perimeter.

You find in cloud that the perimeter is an illogical concept. It doesn’t exist in the same way it did on-premise when you literally had four walls and a pipe coming in. Everything was inside your premise unless you explicitly made an effort to make it publicly accessible.

You quickly start to realize that you need to be comfortable making this 80% effective. And you could argue on exactly what that number is. If you’re a pessimist, you might say it’s only 50%. If you’re an optimist, you might say it’s 99+% effective. As long as you pick any number that’s not 100%, you break this model of the world.

»A Flawed Model

I think this model fundamentally was flawed to begin with. When we said it was 100% effective, there are two critical assumptions here. One is that of perfection — that I’m never going to make a mistake in my firewall and my web app filter. I’m never going to have an out-of-date vulnerability. I’m never going to have a zero-day that’s being exploited. It requires a certain level of perfection that’s not realistic.

The second is that it never contemplates an insider threat. It doesn’t matter how tall my walls are or how effective my firewall is — if I’ve already given you a VPN credential and you work for me, and you’re a malicious employee or contractor or subcontractor.

I think there were two critical flaws in this model to begin with. In many ways, I think it’s not a bad thing that — as an industry — we’re now moving away from it. When we start to move towards this model where we say, “My perimeter is less than 100% effective,” whatever that number is, and I consider the fact that my adversary might be on the network already — this is what brings you to fundamentally a zero trust networking model.

And you can hear it be called different things, low trust networking, no trust networking, etc. The idea is that your core assumption is your attacker is on your network, so you don’t trust it. It means we go away from this binary distinction of public/untrusted, private/trusted. That binary distinction goes away. Yes, I still have a private network. No, I’m not making a distinction that this is a high trust environment in a way that the internet is not. They’re all low trust, no trust, zero trust.

»The Pillars of Zero Trust

As we make this transition to zero trust, our view is that there are a few different pillars of it to consider. Starting at zero trust, I think the next piece, the question, is, “Well, what do we use as the unit of control?” And what I mean by that is in this world — in our traditional world — the IP was the unit of control. If you were within our private network CIDR or subnets, those were considered high trust, and you were authenticated. If you’re coming from the public internet, those IPs were untrusted. This might get expressed as something like a firewall control. The firewall is filtering which set of subnets and IPs are trusted, but we’re managing it through a set of IPs.

Versus as we move towards the a zero trust setting, we don’t necessarily care about the IP — because again, we’re moving away from this distinction at the same time as we go to these cloud environments. They’re much more dynamic, much more ephemeral. We’re spinning workloads up and down. It’s harder to manage a static set of IPs. And additionally, as we’re crossing these different networking boundaries, IPs might be getting rewritten. We’re going through NATS — we’re going through different middleware appliances. The IP is not a particularly portable or stable unit in that setting.

»Identity vs IP Controls

Instead, we want to hang our controls on identity. And when I talk about identity, I mean it could be application identity, meaning this thing is a web server. It could be human identity and saying, “This is Armon. Armon’s a database administrator,” as an example. We’re using identity as the basis of how we’re defining these controls. We would say our set of database administrators has access to our set of databases. That’s an identity based control versus saying IP 1 can talk to IP 2 as an IP-based control.

»Machine Identity

To make this work, our view is that there are four distinct pillars to think about. Pillar one is how do I think about machine identity. To make machine identity work, I need a strong notion of what is a web server, what is a database, what is an API.

And here is where Vault plays. When we think about the role of Vault, it’s first and foremost to provide a sense of application identity. And we have application identity — we can do this by integrating with all the different kinds of platforms. Vault will integrate with AWS and Google and Azure, etc. It will also integrate with our platforms, things like Kubernetes, Pivotal Cloud Foundry, etc.

»Secrets Management

So, no matter where these applications run, we can map them in a consistent way into an identity and then define authorization rules on top of it. The most obvious application of this is — once we know that this thing is a webserver and this thing is a database — we can use that for secret management. This is the common use case that people think about when they talk about Vault is, “I can use this to define you’re a webserver.” You get access to a database key. You get a TLS certificate. You get an API token.’ And Vault can broker and manage the distribution of those secrets and credentials." That becomes an obvious use case.

»Data Protection

The next one becomes data protection. Data protection goes back to this model we have here. If we consider in this environment how we used to protect data. We used to maybe have a web server, and the users would give us — let’s say — credit card or some social security or something. Then we’d write that to our database in plain text. Our database would store the credit card number or the address, etc.

And if we were regulated, we might use something like an HSM device and turn on transparent disk encryption, so the database is encrypting its data at rest. The threat model for this is that someone knocks down the door of my datacenter, finds the hard disk that has customer data, pulls it out, and exfiltrates it.

It doesn’t contemplate a network-based attack. In this scenario, if I’m an attacker, I can get to the database on the network, and I can select data out from that. Well, transparent disk encrypt is also transparent disk decrypt. The database will happily read it from disk, decrypt it and send it out over the wire. It’s clear in that case it didn’t add much from a data protection standpoint — if our threat is one of network and not one of a physical breach.

»Data Encryption

In this last use case, you think about Vault as a way of encrypting that data). When that webserver gets the unencrypted data — it gets the credit card number, the social security number, etc. — that goes to Vault. Vault then encrypts it with a set of encryption keys that it has. It does not expose to the application — and then it hands the safe ciphertext back to the web server. The web server can store that data in the database.

In this case, what we’re storing in the database has already either been encrypted or tokenized. So, even if an attacker can get to the database, they need a second-factor attack where they can be authenticated against Vault, and they need to be authorized to decrypt it or detokenize that data.

Looking at this in a zero trust world, how do we think about data protection and how that evolves. It’s not enough for us to consider that our database is behind the four walls or behind the perimeter. We add those additional layers of you need specific authentication and authorization to decrypt that data as well. That adds an additional layer of defense. This is where Vault sits.

»Human Identity

Then the next challenge that’s way on the other side of the spectrum. On the other side of the spectrum is how do you think about human identity. And this is a much more solved problem. Ultimately, this boils down to single sign-on, as well as having a common directory.

In a previous generation, this would have been largely active directory on-premise serving this role. In a cloud world, it might be Azure AD, might be Okta, might be Ping, might be Centrify. There are a lot of different answers. But what it boils down to is I want a common directory of all my employees and maybe what groups they’re in — so I know this is a set of DBAs, for example. And then the single sign-on is I need some cryptographic way to assert that identity in other systems. That might be an OIDC, and I have a JWT token that’s signed. It might be SAML, and I’m making an assertion about these users.

There are a number of different tools and technologies, but it boils down to the same thing. I need a common directory, and I need some form of SSO, OIDC, SAML, etc. — that I can consume that identity in other systems.

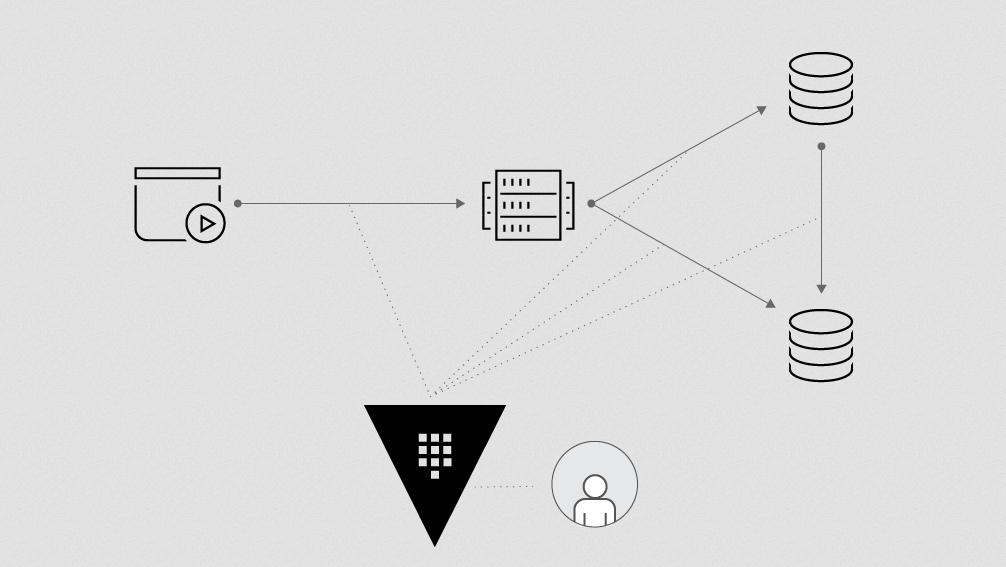

Once I have these two pieces, I have a strong notion of who the people are that are identifying themselves and logging in. And I have a strong notion of the applications on the machines and what their identities are. Then I have the two middle workflows. And there are only two — if we complete this matrix. One is machine-to-machine — or app-to-app, you might call it — and the other is human-to-machine.

In both of these two use cases, our challenge is how do we use the identities that we have and then use that to enforce, control, and restrict who’s allowed to talk to who? The authentication might be coming from the identities that we already have. But then we’re layering an authorization layer to make sure that only the apps that should talk to other apps do so — and only the people that talk to apps talk to the apps they’re supposed to.

»Using Consul To Solve Three Key Problems

Here is where Consul fits. Consul is HashiCorp’s service networking tool. And there are three key problems this solves.

»Service Discovery

The first is service discovery. With service discovery, we start with the challenge of how do we even know what all of our applications are? Where are they? In most environments, there isn’t really a catalog that says these are where all my apps are — these are all the services that I have. It’s an emergent property. There are things all over the place. The first thing Consul solves is it gives us a consistent catalog where we can integrate it with all of our different systems. Be they mainframe VM, containerized, serverless, etc. — Consul integrates with all of the different platforms. It gives us this bird’s eye view of what everything is.

»Service Mesh

Then on top of that, you can implement a service mesh. Consul can act as the central control plane, where then we can define these central routing rules. We can define rules and say, “My webserver is allowed to talk to my database. My API is allowed to talk to my web.”

That control again is an identity thing — we’re saying webserver-to-database is allowed — I’m talking about it at an identity level; and then how do we enforce that? Well, the way service meshes work under the hood is we’re distributing a set of certificates to the different applications. That certificate encodes the identity — the webserver gets a cert that says it’s a webserver, or the database gets a certificate that says it’s a database, and it’s cryptographically signed against a certificate authority that’s trusted by our different nodes.

Then the proxies, when they communicate between one another — when the web server talks to the database — it’s going through a proxy. Similarly, the database has a proxy terminating on behalf of it — it could be Envoy, HAProxy, Nginx. The first thing those proxies do is authenticate — is this a valid certificate that’s signed? Yes, or no? We have a strong notion of the identity on the two sides. We know it’s a webserver talking to a database.

Then the next layer is, are they authorized? That’s when the proxy will interact with Consul as that central source of truth to say, “I’m a database. A webserver’s connecting to me. Is that allowed? Yes or no?” Now you can see what we’re getting at — we have an explicit control that we defined that says webserver-to-database is allowed. We’re doing it at this identity-based level — we don’t talk about IP 1 to IP 2. And we’re using this mesh-based approach to enforce that control.

»Network Infrastructure Automation

It would be nice if all of our networks could live in this mesh-based world, but in reality, we have existing networks — they operate in an IP-centric way. We might have firewalls and gateways, and WAFs already implemented. The third piece here with Consul is what we refer to as network middleware automation — or network infrastructure automation. And the challenge here is I still want to define this identity-based control that says webserver talks to the database, but it might be that I’m not using a service mesh. It might be that I have a firewall. I have a Palo Alto firewall, as an example, in between them.

In this use case, we would write a bit of Terraform configuration — going as infrastructure as code, to say, “My source inputs from Consul are the set of webservers and the set of databases. And then given that, I’m going to define how should my Palo Alto firewall be configured.” We give that configuration to Consul. So, the moment a new webserver is registered or a webserver’s de-registered or its health status changes, Consul will detect that, “Oh, this bit of Terraform code needs to be re-executed, because it has a subscription or a dependency on the set of webservers or the set of databases.”

This allows us to start with that catalog of we know all the different services. As those things come and go, we can trigger automation and use Terraform to update our firewall, our gateways, our various networking middleware devices. And as part of this network infrastructure automation, we’ve partnered closely with all of the major networking providers, Cisco, Juniper, A10, Check Point, Palo Alto. There’s a longer list available on our website.

You can see how this then enables us to operate in this hybrid mode. Where Consul’s giving us the central catalog, we can have our new greenfield applications operating on a pure service mesh. We can have that integrated into our more traditional networking environments using network infrastructure automation. It gives us a holistic way of moving towards this identity-based model of handling the machine-to-machine networking.

»Human-to-Machine Networking

And then, as we talk about the last use case, it’s about human-to-machine. And this is where our newest product, Boundary, fits in. When we talk about human to machine, often there’s a gauntlet of steps users have to go through. They first have to connect to a VPN, or maybe they’re going through an SSH bastion that puts them on the private network. Because we don’t want them to have access to everything, there’s typically a firewall in place to restrict — if you’re coming in from a VPN, what set of network resources are you allowed to connect to. You have to manage a set of firewall rules around who can connect to what.

And then from there, if I’m connecting to — let’s say — a database, I might want to do session recording, or I need to have privileged access management to restrict who has access to database passwords. I have to log in and use a privileged access management tool. That might be imposing session recording. And then, finally, I connect to my target destination, which might be the database.

We looked at that and said is there a way to simplify this where you have a single point of entry? And that’s what Boundary is. The idea is I don’t have a separate set of VPN credentials, and I don’t have to have a separate set of SSH keys. I do a single sign on via my IDP — to tie it back into this human identity we already have. And that’s how I authenticate. I don’t need a separate VPN or SSH credential.

The second piece is I don’t want to be in the business of managing three different sets of overlapping controls — my VPN plus my firewall plus my PAM plus my database. I want a logical control that says my database admins are allowed to talk to my databases — again, going back to an identity-based, service-based control.

That’s how the authorization works here. I have a logical AuthZ that operates at that service level. And that’s how I specify who’s allowed to do what. The advantage is that Boundary, unlike a VPN or SSH, doesn’t give you direct network access. It won’t put you on the network. I don’t need a separate set of firewall controls. Boundary will only allow the user based on their authorization to talk directly to a target instance. And that’s happening from the Boundary gateway directly to that instance. There is no private network access. We don’t need a second level of firewall to restrict what you can do.

This eliminates multiple layers. I don’t need to first have a VPN and SSH. I talk directly to the Boundary gateway. I don’t need another layer of firewall because I don’t give you network access. And then Boundary can directly inject the credentials and — in the future — provide the session recording as well.

I don’t need a separate layer of privilege access management either. I talk from a user’s point of view directly to the Boundary gateway. It authenticates me. It authorizes me and connects directly to the target instance. And because it’s in that middle point, it can inject the credentials, as well as perform the session recording. It simplifies that end-to-end access. It’s a simple solution that then covers the end-to-end use case. Otherwise, we’d have three or four different pieces of control we’d have to interpose.

»Review of Zero Trust

Taking a zoom out, again as we talk about zero trust, it’s a much bigger space. But it starts with this idea of I don’t want to provide a high trust assumption — I don’t trust my network. That means everything needs to be explicitly authenticated, explicitly authorized. If we’re going to hang everything off of identity, then first starting points are do I have an understanding of human identity? Do I have an understanding of my machine identity?

Once I have that, I can leverage those to solve the two key networking floats, app-to-app or machine-to-machine, using Consul, and human-to-machine using Boundary. Hopefully, that gives you a sense of how we think about zero trust and what we need in terms of the four pillars as we’re transitioning to that. Thanks.