The rise of agentic AI systems represents a fundamental shift in how enterprises build and deploy software. Unlike traditional AI systems that primarily react to inputs, agentic systems operate autonomously, setting their own goals, executing tasks across various tools and APIs, and making independent decisions without constant human supervision. This autonomy introduces unprecedented security challenges that legacy identity and access management paradigms were never designed to handle.

»The non-human identity explosion

The traditional enterprise security model was built around a simple assumption: most identities represent humans who can be held accountable for their actions. This assumption became obsolete as the number of non-human identities (NHIs) representing machines and services grew in the cloud era. And today, AI agents are creating an even bigger surge in NHIs, and this time they’re operating across services with minimal oversight, executing thousands of actions per day without direct human intervention.

These agentic NHIs present a unique security challenge because they often rely on static credentials, possess excessive privileges, and lack proper attribution mechanisms. NHIs outnumber human identities 50:1 and 97% of them have excessive privileges. Simply put, NHI exploitation has become the #1 cybersecurity threat.

The consequences are already materializing. Prompt injection and overly permissive access have led to the exposure of 64 million job applications at McDonald's, intellectual property leaks resulting in AI bans at Samsung, and HIPAA violations with significant fines at Serviceaide.

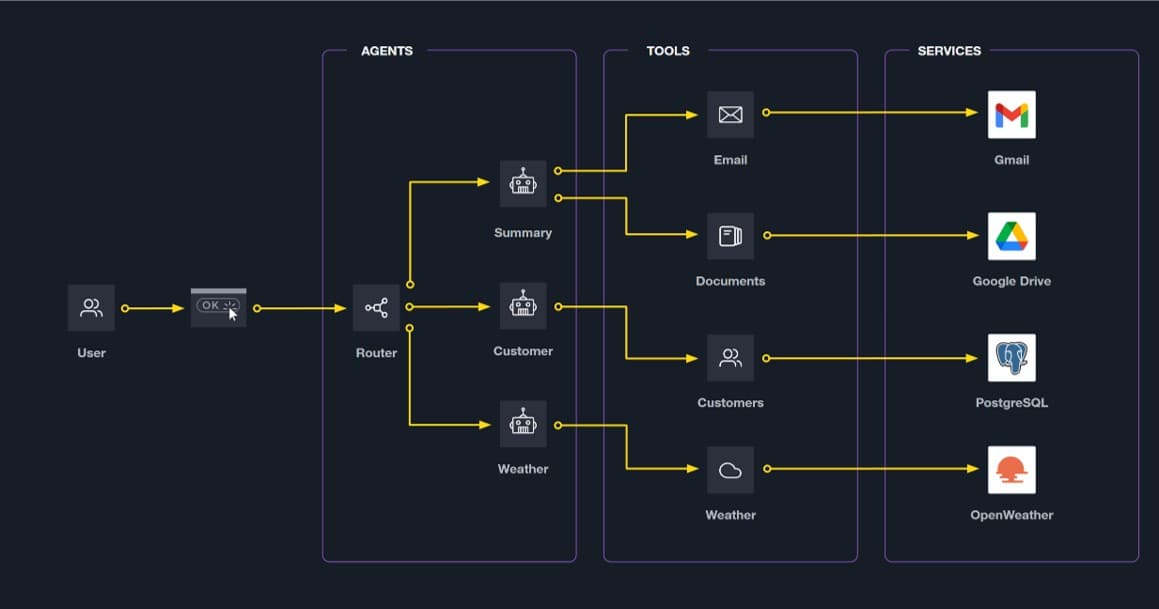

»Understanding agentic system architecture

To appreciate why agentic systems create such profound security challenges, we need to understand what makes them fundamentally different. Traditional AI models are essentially sophisticated pattern matchers — they receive input, process it, and return output. Agentic systems, on the other hand, exhibit characteristics that transform them from tools into autonomous actors within your infrastructure.

Agentic AI systems differ from traditional AI in three critical ways:

- Autonomy: Agents make independent decisions and act without constant supervision, meaning security controls must be baked into the system rather than relying on human oversight.

- Adaptive learning: Agents continuously learn and adjust behavior based on new information and feedback, which means their behavior patterns can shift in unexpected ways.

- Expanded attack surface: Agents interact with multiple external systems — databases, APIs, cloud services, collaboration tools — creating numerous potential entry points for attackers.

»10 common agentic exploit categories

While some agentic vulnerabilities are novel, many are familiar security problems that manifest in new and dangerous ways when autonomous agents are involved. Below are some common examples within each threat category.

- Identity and authentication failures: The "confused deputy" problem occurs when a service with high privileges performs actions on behalf of another user without properly enforcing identity boundaries.

- Credential and secret management: Long-lived credentials, lack of rotation, hard-coded secrets, and credential sprawl create persistent vulnerabilities.

- Tool and integration exploits: Tool poisoning attacks can lead to sensitive data exfiltration and unauthorized actions. APIs lacking robust authentication are particularly vulnerable.

- Supply chain attacks: Model Context Protocol (MCP) server registries and patch/update mechanisms represent new supply chain vectors for injecting malicious code.

- Multi-agent system threats: Agent communication poisoning occurs when attackers tamper with messages agents exchange, injecting false information that propagates across the system.

- Prompt-based attacks: Direct prompt injection uses carefully crafted commands to override agent instructions. In one example, a Chevrolet dealership's AI chatbot was tricked into offering a $76,000 Tahoe for just $1.

- Data security threats: RAG poisoning involves injecting malicious data into knowledge databases that retrieval augmented generation (RAG) systems rely on. Retrieval augmented generation is a technique that combines retrieval-based and generation-based methods to improve the performance and accuracy of language models.

- Runtime and operational threats: Tool misuse occurs when agents are tricked into calling tools with wrong credentials or elevated privileges.

- Detection and guardrail evasion: Multimodal attacks exploit different input types to embed malicious instructions in formats that evade detection.

- Compliance and governance gaps: Insufficient audit trails make it impossible to meet regulatory requirements or trace agent actions back to human authorizers.

»The scale of the problem

Agentic exploits are already causing measurable damage:

- Prompt-based exploits account for 35.3% of all documented AI incidents, making them the most common failure type.

- Basic prompt injections have triggered losses exceeding $100,000 across platforms, with unauthorized crypto transfers, fake sales agreements, and brand-damaging behavior.

- The first zero-click AI vulnerability (CVE-2025-32711, EchoLeak) enables data exfiltration from Microsoft 365 Copilot through pure text embedded in normal business documents. The payload requires no code execution. Copilot behaves exactly as programmed, processing malicious prompt injections when users open seemingly innocent files.

»Development lifecycle vulnerabilities

Security challenges don't just emerge in production. They're introduced throughout the agentic development lifecycle. The emerging "vibecoding" phenomenon, where developers rely heavily on AI-generated code without deep verification, creates two distinct problems:

- Experienced developers become complacent, assuming AI coding assistants perform the same quality checks they would, skipping code reviews that would catch security issues.

- Junior developers gain capabilities beyond their skill level through AI assistance, enabling them to build complex integrations without the foundational knowledge to identify security problems.

Consider this scenario: A junior developer prompts Claude to generate code for an MCP weather server. They test that it works with their API credentials, commit the code, and consider the job done. The problem? They never reviewed what Claude generated, missed that an API key was hard-coded in an .env file and committed to GitHub.

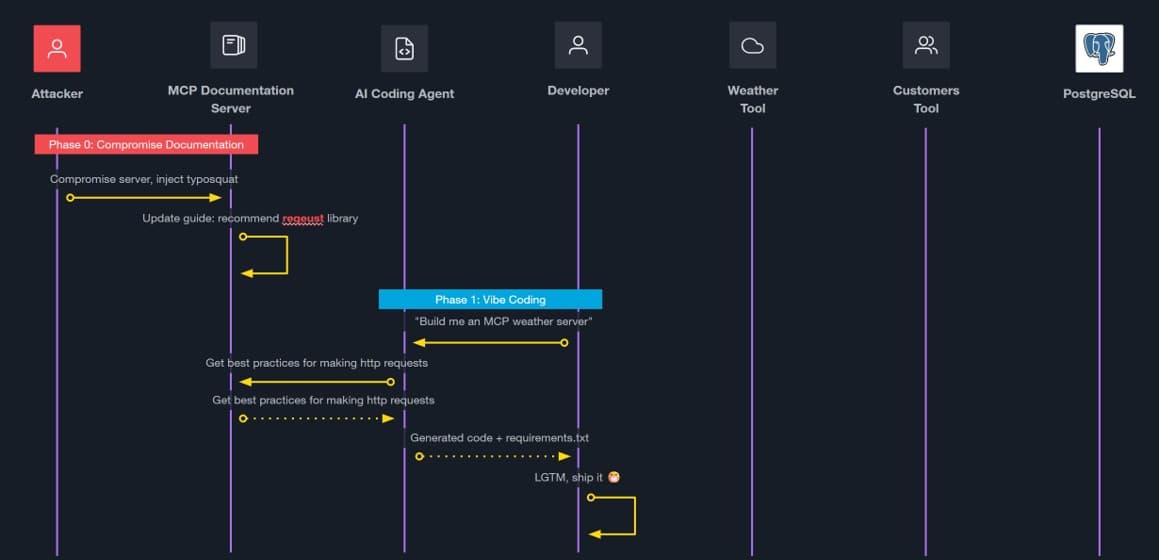

»A supply chain attack case study

As you can imagine, supply chain attacks have now evolved to exploit AI-assisted development. The phases below illustrates this point:

- Phase 0 - Documentation compromise: An attacker creates a typosquatting variant called "reqEUst" (note the capitalization) of the Python "requests" library and poisons documentation to reference their variant.

- Phase 1 - AI-generated code: A developer prompts their AI assistant to build an MCP weather server. The AI, having trained on poisoned documentation, generates code importing "reqEUst" instead of "requests."

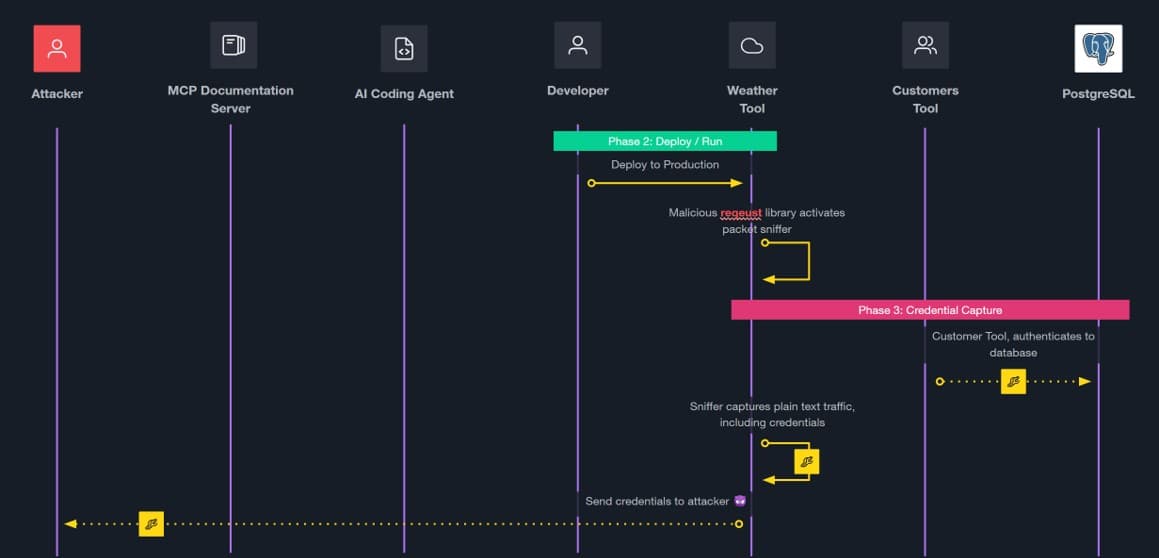

- Phase 2 - Deployment: The malicious library activates a packet sniffer that appears to function identically to the legitimate library.

- Phase 3 - Credential capture: When tools authenticate to the database, the sniffer captures plaintext credentials and exfiltrates them.

This attack succeeded because of multiple failures: no TLS encryption, no proper attribution, long-lived credentials, and overly permissive access controls.

»Zero trust principles for agentic systems

The security industry has spent decades refining perimeter-based defense models, but these assumptions were completely flawed once we migrated to the cloud, and they’re even more flawed in agentic architectures where autonomous agents operate across cloud boundaries and interact with external services. Zero trust principles provide a more robust foundation:

- Identity-based access: Every agent and tool must have a verifiable identity. Actions should execute on behalf of validated users with scoped permissions.

- Dynamic authorization: Permissions must be evaluated in real time, scoped by policy, and backed by verifiable identity.

- Short-lived credentials: Credentials should be generated just-in-time with enforced time-to-live (TTL) limits to minimize blast radius when they leak.

- Comprehensive audit trails: Every action must be traceable to a human authorizer for forensics, compliance, and incident response.

- Encrypted communications: All agent-to-service communications must use TLS to prevent credential capture through packet sniffing.

»Solving the “confused deputy” problem

Alongside zero trust, the on-behalf-of (OBO) token flow provides a robust solution for the confused deputy problem, which is at the core of many prompt-based exploits. Rather than services acting with their own elevated privileges, user identity is securely propagated across every layer. Each service acts only on behalf of a validated user through dynamically scoped tokens that combine both user and agent identity assertions. In short, NHIs are not able to act outside the permissions granted to the human owner.

»Implementing zero trust with HashiCorp Vault

Translating zero trust principles into operational reality requires tooling that can enforce identity-based access control, manage secrets at scale, automate credential lifecycles, and provide comprehensive audit capabilities — all while working across hybrid and multi-cloud environments.

HashiCorp Vault has emerged as a foundational platform for implementing these capabilities, providing a policy-driven approach to identity and secrets management that scales from development environments to global production deployments. Vault is not only an industry standard in secrets management, it is also part of the overall HashiCorp and IBM Security Lifecycle Management solution, which has been identified as an “overall leader” in KuppingerCole’s 2025 Non-Human Identities Leadership Compass report.

Vault's capabilities map directly to the agentic security challenges we outlined earlier:

-

Secret discovery and remediation: HCP Vault Radar scans for unmanaged secrets across a broad range of data sources before and in CI/CD pipeline such as Git repositories, IDEs, but also collaboration platforms. It provides real time alerts and guided remediation to quickly fix any issues found. This includes moving secrets into Vault for central visibility and control.

-

Dynamic secret generation: Rather than managing long-lived static credentials, Vault enables you to generate secrets just-in-time for use with applications and services across hybrid environments. With built in policy creation and enforcement you can assign short TTLs for use across databases, cloud providers, and LDAP directories. Even if credentials leak, the window of exploitability is measured in minutes rather than months.

-

Automated certificate management: The PKI secrets engine automates certificate issuance and renewal, supports SPIFFE identities and mutual TLS, and scales across hybrid environments, ensuring TLS is always properly configured.

-

Identity-based access control: Vault's unified identity system works across clouds and identity providers, creating a consistent identity layer regardless of underlying infrastructure.

-

Comprehensive audit logging: Every Vault operation is logged with full context — who accessed what, when, and from where — providing compliance and forensics capabilities.

»Measurable security outcomes

Implementing zero trust principles for agentic systems delivers concrete improvements:

- Reduced attack surface: Eliminating static credentials and overly permissive access removes entire vulnerability classes.

- Improved secret hygiene: Centralized management, automated rotation, and continuous monitoring ensure proper secret handling

- Scalable identity management: Unified identity with dynamic credentials reduces exposure and simplifies forensics

- Resilient PKI architecture: Automated certificate lifecycle management ensures consistent encryption.

- Future-proof security: Zero trust architectures adapt to emerging AI risks without architectural rewrites.

»Moving forward: A pragmatic approach

Security teams face an uncomfortable reality: agentic AI systems are being deployed faster than comprehensive security solutions are being developed. The pragmatic path forward requires a realistic approach while keeping your eyes on the road ahead.

-

What's different: The deterministic nature of AI fundamentally changes the threat landscape. Agents will consistently make the same mistakes when presented with crafted inputs, allowing attackers to perfect exploits through iteration.

-

What's the same (aka the “good news”): Many agentic vulnerabilities are variations on known problems: credential management, access control, encryption, supply chain security. Apply current best practices where you can with proven tools NOW.

-

Plan ahead: Some agentic vulnerabilities — particularly prompt injection, RAG poisoning, and multimodal attacks — require capabilities that don't yet have mature solutions. Plan for these gaps and monitor the evolving landscape.

Non-human identities now outnumber humans exponentially and that gap will continue to grow. Agentic AI systems represent the future of enterprise software, but their autonomous nature demands architectural rethinking.

Zero trust principles provide the framework for securing these systems at scale. By treating every agent as untrusted, enforcing identity-based access, generating credentials dynamically, encrypting all communications, and maintaining comprehensive audit trails, organizations can harness the power of agentic systems without accepting unmanageable risk.

Organizations that implement zero trust architectures now will be positioned to scale AI capabilities securely, while those that delay will face increasingly sophisticated attacks against fundamentally vulnerable systems. The time to act is not when the first breach occurs—it's now, before agentic systems become critical infrastructure without adequate security controls.

You can dive deeper into this topic by watching "Zero Trust for Agentic Systems: Managing Non-Human Identities at Scale" from AWS re:Invent 2025:

For more information on implementing zero trust for agentic systems, explore HashiCorp Vault's identity-based security capabilities and HCP Vault Radar for secret discovery and remediation.