Terraform Stacks are a feature intended to simplify infrastructure provisioning and management at scale, providing a built-in way to scale without complexity. This blog covers the challenges Terraform Stacks solve, their benefits, use cases, how they work, their features, and future roadmap.

»What challenges do Terraform Stacks solve?

There are a number of benefits to using small modules and workspaces to build a composable infrastructure. Splitting up your Terraform code into manageable pieces helps:

- Limit the blast radius of resource changes

- Reduce run time

- Separate management responsibilities across team boundaries

- Work around multi-step use cases, such as provisioning a Kubernetes cluster

Terraform’s ability to take code, build a graph of dependencies, and turn it into infrastructure is extremely powerful. However, once you split your infrastructure across multiple Terraform configurations, the isolation between states means you must stitch together and manage dependencies yourself.

Additionally, when deploying and managing infrastructure at scale, teams usually need to provision the same infrastructure multiple times with different input values, across multiple:

- Cloud provider accounts

- Environments (dev, staging, production)

- Regions

- Landing zones

Before Terraform Stacks, there was no built-in way to provision and manage the lifecycle of these instances as a single unit in Terraform, making it difficult to manage each infrastructure root module individually.

We knew these challenges could be solved in a better and more valuable way than just wrapping Terraform with bespoke scripting and external tooling, which requires heavy lifting and is error-prone and risky to set up and manage.

»What are Terraform Stacks and what are their benefits?

Stacks help users automate and optimize the coordination, deployment, and lifecycle management of interdependent Terraform configurations, reducing the time and overhead of managing infrastructure. Key benefits include:

- Simplified management: Stacks eliminate the need to manually track and manage cross-configuration dependencies. Multiple Terraform modules sharing the same lifecycle can be organized and deployed together using components in a Stack.

- Improved productivity: Stacks empower users to rapidly create and modify consistent infrastructure setups with differing inputs, all with one simple action. Users can leverage deployments in a Stack to effortlessly repeat their infrastructure and can set up orchestration rules to automate the rollout of changes across these repeated infrastructure instances.

Stacks aim to be a natural next step in extending infrastructure as code to a higher layer using the same Terraform shared modules users enjoy today.

»Common use cases for Terraform Stacks

Here are the common use cases for Stacks, out of the box:

- Deploy an entire application with components like networking, storage, and compute as a single unit without worrying about dependencies. A Stack configuration describes a full unit of infrastructure as code and can be handed to users who don’t have advanced Terraform experience, allowing them to easily stand up a complex infrastructure deployment with a single action.

- Deploy across multiple regions, availability zones, and cloud provider accounts without duplicating effort/code. Deployments in a Stack let you define multiple instances of the same configuration without needing to copy and paste configurations, or manage configurations separately. When a change is made to the Stack configuration, it can be rolled out across all, some, or none of the deployments in a Stack.

- Provision and manage Kubernetes workloads. Stacks streamline the provisioning and management of Kubernetes workloads by allowing customers to deploy Kubernetes in one single configuration instead of managing multiple, independent Terraform configurations. We see Kubernetes deployments that often have too many unknown variables to properly complete a plan. With Stacks, customers can drive a faster time-to-market with Kubernetes deployments at scale without going through a layered approach that is hard to complete within Terraform.

To learn more, read the Terraform Stacks use cases documentation.

»How do I use a Terraform Stack?

Stacks introduce a new configuration layer that sits on top of Terraform modules and is written as code.

»Components

The first part of this configuration layer, declared with a .tfcomponent.hcl file extension, tells Terraform what infrastructure, or components, should be part of the Stack. You can compose and deploy multiple modules that share a lifecycle together using what are called components in a Stack. Add a component block to the components.tfcomponent.hcl configuration for every module you'd like to include in the Stack. Specify the source module, inputs, and providers for each component.

components.tfcomponent.hcl

component "cluster" {

source = "./eks"

inputs = {

aws_region = var.aws_region

cluster_name_prefix = var.prefix

instance_type = "t2.medium"

}

providers = {

aws = provider.aws.this

random = provider.random.this

tls = provider.tls.this

cloudinit = provider.cloudinit.this

}

}

You don’t need to rewrite any modules since components can simply leverage your existing ones.

»Deployments

The second part of this configuration layer, which uses a .tfdeploy.hcl file extension, tells Terraform where and how many times to deploy the infrastructure in the Stack. For each instance of the infrastructure, you add a deployment block with the appropriate input values and Terraform will take care of repeating that infrastructure for you.

deployments.tfdeploy.hcl

deployment "west-coast" {

inputs = {

aws_region = "us-west-1"

instance_count = 2

}

}

deployment "east-coast" {

inputs = {

aws_region = "us-east-1"

instance_count = 1

}

}

When a new version of the Stack configuration is available, plans are initiated for each deployment in the Stack. Once the plan is complete, you can approve the change in all, some, or none of the deployments in the Stack.

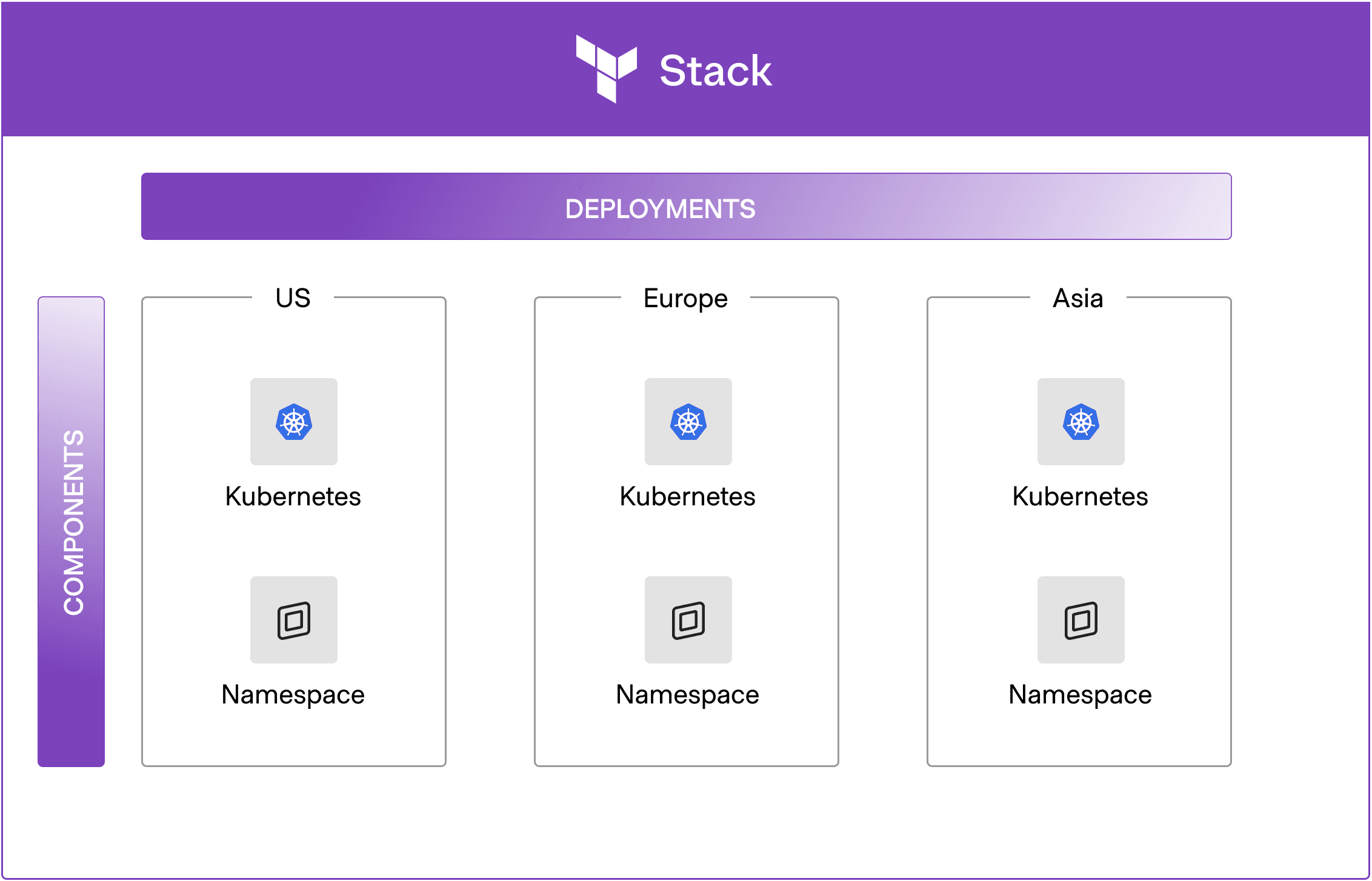

Example: The Kubernetes and namespace components are repeated across three regions using three deployments.

»Deployment group orchestration rules

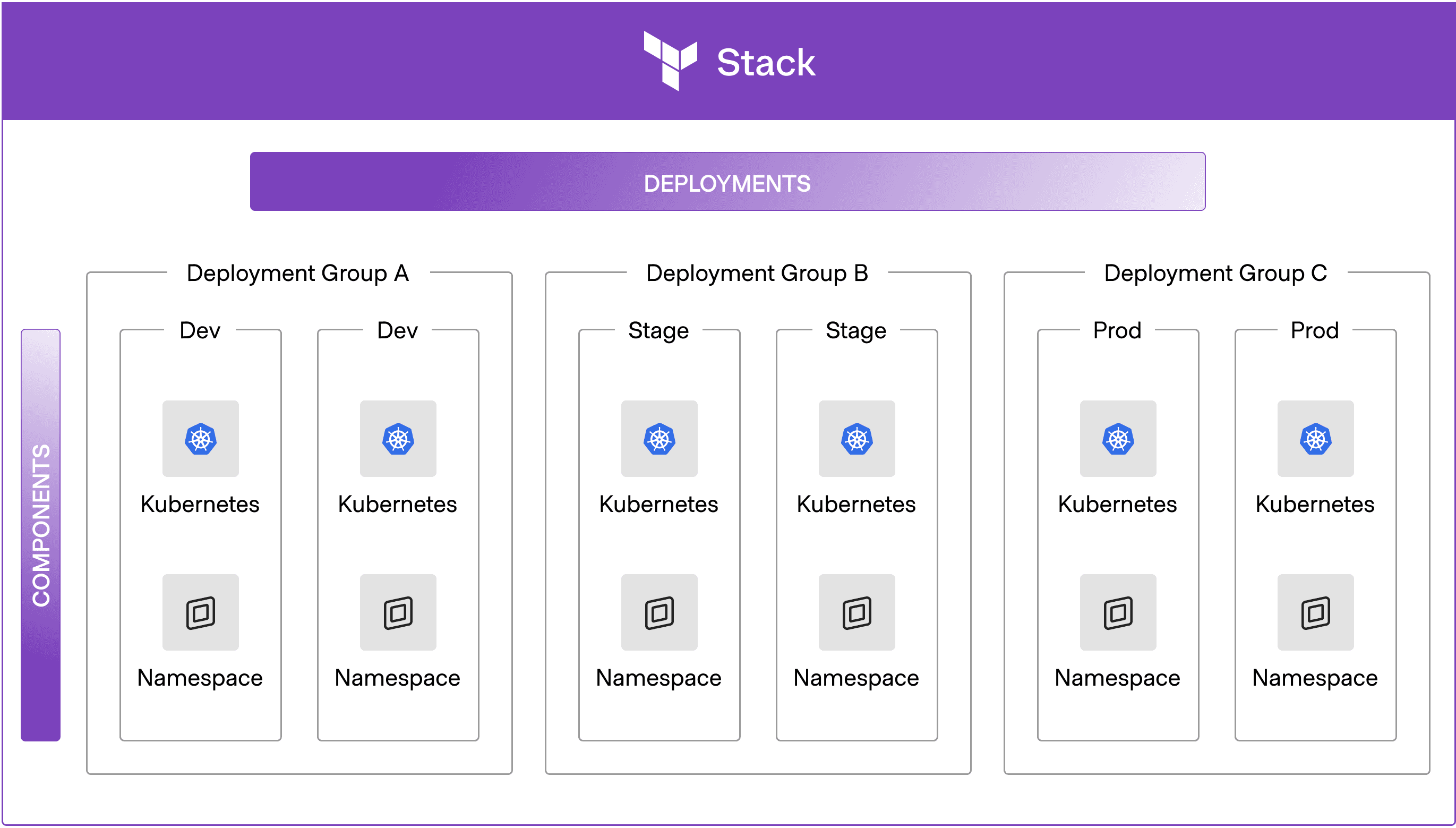

As your usage of Stacks grows, manually managing and approving every deployment can become a significant bottleneck. To manage deployments more effectively at scale, Stacks has a deployment groups feature, which replaces the public beta's orchestration rules. Deployment groups allow you to logically group deployments by environment, team, or application, then assign automation rules at the group level.

Example: The Kubernetes and namespace components are repeated across three deployment groups with multiple instances for development, staging, and production environments.

Custom deployment groups are an additional feature that let you include auto-approve checks in their configurations, which are conditions that determine if a deployment can be safely approved without manual intervention. If conditions are met, the deployment group is auto-approved.

You could, for example, write a check that auto-approves a deployment if its plan contains no resource deletions. The example below shows what this looks like. It defines a custom deployment group called canary with an auto-approve check that ensures no resources are removed in the plan before automatically approving it.

deployment "canary" {

deployment_group = deployment_group.canary

}

deployment_group "canary" {

auto_approve_checks = [deployment_auto_approve.no_deletes]

}

deployment_auto_approve "no_deletes" {

check {

condition = context.plan.changes.remove == 0

reason = "Plan has ${context.plan.changes.remove} resources to be removed."

}

}

Each deployment group can have its own unique set of checks, enabling tailored automation for different environments like development, staging, or production. This means you don't have to repeatedly configure the same logic for every single deployment, leading to faster, safer, and more consistent deployments across your organization.

Custom deployment groups are available in the HCP Terraform Premium plan. Default deployment groups are available in all plans. To learn more about deployment group orchestration rules, please refer to this documentation: Set conditions for deployment plans.

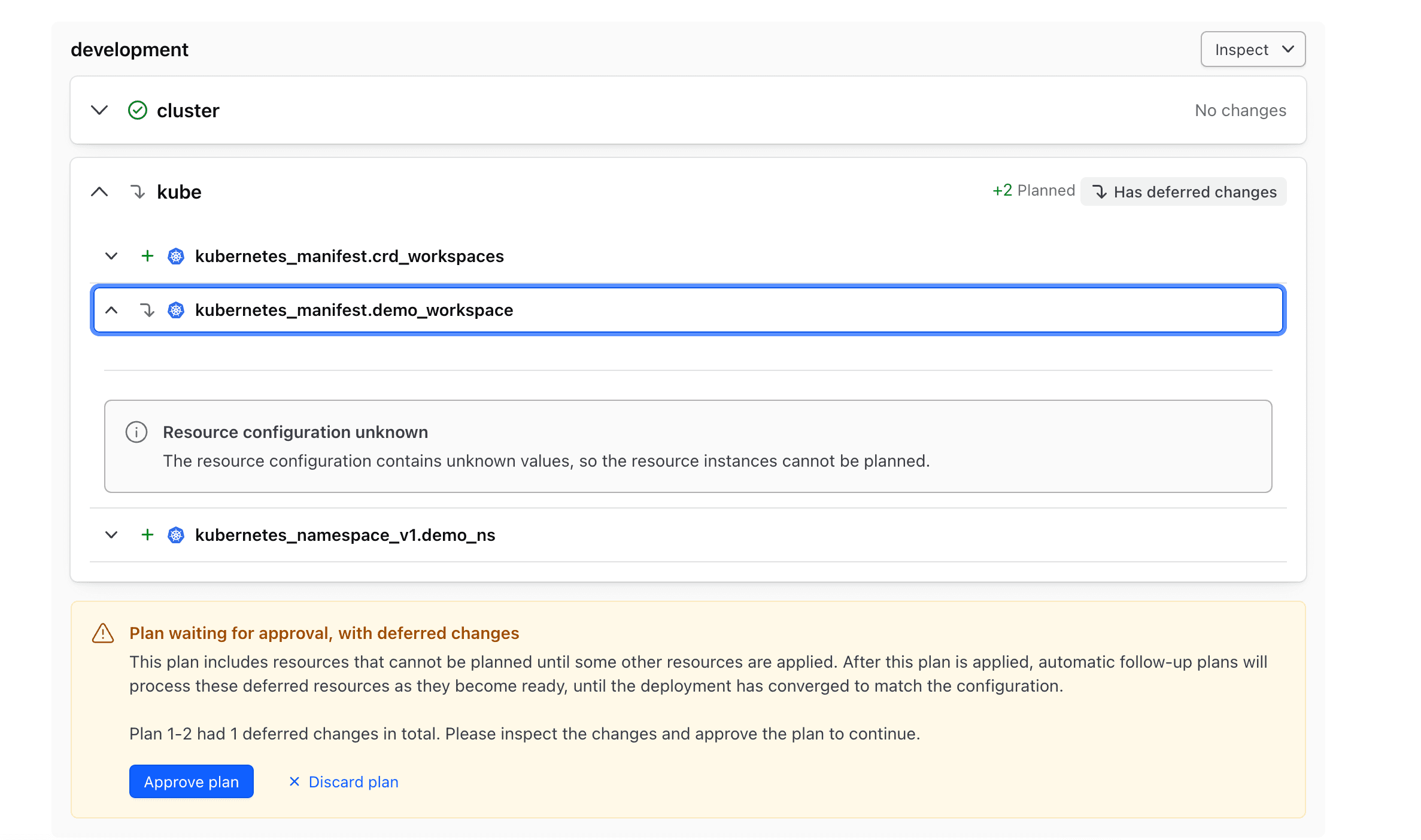

»Deferred changes

This is a feature of Stacks that allows Terraform to produce a partial plan when it encounters too many unknown values — without halting the operations. This helps users work through these situations more easily, accelerating the deployment of specific workloads with Terraform. Deferred changes allow users to enable the Kubernetes use case mentioned in the common use cases section above.

Terraform has generated a partial plan, deferring the remaining resources that cannot be planned yet.

Consider an example of deploying three Kubernetes clusters, each with one or more namespaces, into three different geographies. In a Stack, you would use one component to reference a module for deploying the Kubernetes cluster, and another component for a module that creates a namespace in it. In order to repeat this Kubernetes cluster across three geographies, you would simply define a deployment for each geography and pass in the appropriate inputs for each, such as region identifiers.

If you decided to add a new namespace to each of your Kubernetes clusters, it would result in plans queued across all three geographies. To test this change before propagating it to multiple geographies, you could add the namespace to the US geo first. After validating everything worked as expected, you could approve the change in the Europe geo next. You have the option to save the plan in the Asia geo for later. Having changes in the Stack that are not applied in one or more deployments does not prevent those changes from being planned.

See how Kubernetes clusters are deployed in Terraform Stacks by watching this video:

»Unified CLI experience

Developers can create, manage, and iterate on Terraform Stacks directly from the command line without a connected VCS. This streamlines local development, allowing engineers to run quick experiments with Stacks (initialize new stacks, create starter configuration files, validate your setup, and upload source bundles to a stack) before integrating them into standard workflows.

For platform teams, the CLI gives you greater control over your deployments. You can trigger plan and apply operations for some or all deployments in a Stack, allowing you to integrate Terraform Stacks into your CI/CD pipelines while still using VCS as your source of truth. As of GA, the standalone terraform-stacks-cli has been merged into the main Terraform CLI, unifying Stacks development workflows with the rest of Terraform. To learn more about the new terraform stacks commands, refer to the Terraform CLI documentation.

»Linked Stacks

Managing large-scale infrastructure often requires separating foundational components — like networking, identity, or landing zones — into their own Stacks. To support these kinds of architectures, linked Stacks offer a way to define and manage cross-stack dependencies directly in configuration. This feature simplifies the flow of information by automatically triggering updates in downstream stacks when upstream changes are detected.

Linked Stacks help preserve separation of concerns while keeping dependent infrastructure consistently up to date. This reduces complexity and manual overhead, allowing teams to build more modular, scalable, and automated infrastructure.

For a deeper dive into linked Stacks and other recent enhancements, check out our blog post: New in HCP Terraform: Linked Stacks, enhanced tags, and module lifecycle management GA and our documentation: Pass data from one Stack to another.

»Self-host HCP Terraform agents

Some infrastructure must be managed within tightly controlled environments — where security, compliance, or networking constraints require all Terraform operations to run inside private networks.

To support these use cases, Stacks include full self-hosted agent support. This support is especially useful for enterprise teams that need to use Stacks across production environments where network isolation and regulatory constraints are non-negotiable. Self-hosted agent support enables:

- Execution of Stack deployments behind firewalls or in air-gapped environments

- Alignment with internal compliance and audit requirements

- Greater flexibility for hybrid and on-premises infrastructure use cases

Customers can also scope agent pools to specific Stacks, aligning execution settings so that plan and apply operations are securely run inside their own infrastructure.

Overall, Stacks is aligned with the execution model already used across HCP Terraform, allowing organizations to maintain control over how and where sensitive infrastructure changes are applied.

»Expanded VCS support

Stacks support secure, enterprise-grade integration with all major VCS providers, including GitHub, GitLab, Azure DevOps services, and Bitbucket. This feature includes IP allowlist support for VCS connections, so customers can ensure that their version control systems are only accessible from trusted HCP Terraform IP addresses.

»Stack component configurations in the private registry (GA)

When building Terraform Stacks at scale, users need a centralized and standardized way to share infrastructure best practices that go beyond a single module. This is the value of the private registry. It’s a central place to store your organization’s custom, approved Terraform modules and providers.

The private registry can also house approved Terraform Stack component configurations. These configurations represent the most reusable aspect of a Stack's definition, allowing platform engineers to publish versioned, composite configurations where the outputs of one component become the inputs of another.

Note that the private registry stores only the component configuration, which defines the reusable pattern itself and excludes the deployment-specific details found in the deployment configuration. This clear separation accelerates developer velocity by letting application developers source and deploy these complex, compliant patterns reliably from the registry with minimal effort.

To learn more about how to write and publish Stack component configurations, check out the Publish component config documentation.

»RUM visibility

Resources within your Stacks — as well as combined Stacks and workspaces views — are available in Terraform’s Usage tab. There, you can view two key Stacks datapoints:

- Billable Stacks resources: Shows all resources in your Stacks

- Billable managed resources: Combines Stacks and workspace resources for a complete view of your infrastructure usage

This ensures that Stacks aren’t a black box in your RUM visibility dashboards.

»How can I migrate my existing workspaces to Terraform Stacks?

Migrating existing Terraform workloads from workspaces to Stacks can be complex. A key challenge has been the lack of a clear, automated path to migrate existing Terraform workloads while maintaining state integrity and minimizing disruption.

To address this, the existing Terraform migrate tool includes a workspace-to-stacks migration workflow (beta as of December 2025) that enables a CLI-driven migration and automates the key steps of the transition, including:

- Extracting configuration from existing workspaces.

- Generating valid Stack configuration that reflects the workspace's settings.

- Transferring Terraform state to the new stack deployment.

- Creating and initializing the new stack.

By automating a previously manual process, operational overhead is reduced and the scalability and organizational benefits of Stacks can be quickly leveraged with minimal disruption.

The workflow is also safe to test, allowing users to perform dry runs and validate results without impacting existing workspaces, ensuring a smooth and confident migration experience.

To learn more, please check out this workspace-to-stacks migration documentation and tutorial.

»Get started with Terraform Stacks

Terraform Stacks are available on all HCP Terraform plans based on resources under management (RUM). Stacks are production-ready with backward-compatible APIs, so you can safely integrate them — along with the Terraform Enterprise CLI — into your CI/CD pipelines.

You can adopt Stacks incrementally alongside your existing workspaces while taking advantage of features built for large-scale, multi-team environments. To learn more about the features that are currently supported, you can refer to the documentation and tutorials available on HashiCorp Developer to help you get started.

We’re excited to see how Stacks help teams simplify operations, improve collaboration, and scale infrastructure management with confidence.