We’re pleased to announce that HashiCorp Consul 1.13 is now generally available to all users. This release is yet another step forward in our effort to help organizations reduce operational complexity, run Consul efficiently at scale, and securely integrate service mesh into their application workflows.

Important new features in Consul 1.13 include a Consul on Kubernetes CNI plugin, CLI enhancements for Envoy troubleshooting, enhancements to terminating gateways, and cluster peering (in beta). Let’s run through what’s new.

»Consul on Kubernetes CNI Plugin

By default, Consul on Kubernetes injects an init container, consul-connect-inject-init, that sets up transparent proxy traffic redirection by configuring sidecar proxies so that applications can easily communicate within the mesh without any modification. The deployment of the init container within a pod to set up a transparent proxy requires that pods have sufficient Kubernetes RBAC privileges to deploy containers with the CAP_NET_ADMIN Linux capabilities. But configuring pods to deploy with such escalated privileges is problematic for organizations with very strong security standards and thus an obstacle for mesh adoption.

As part of the Consul 1.13 release, Consul on Kubernetes will now distribute a chained CNI (container network interface) plugin that handles the traffic redirection configuration for the pod’s lifecycle network setup phase. This replaces the requirement to use the init container to apply traffic redirection rules and removes any requirement to allow for CAP_NET_ADMIN privileges when deploying workloads onto the service mesh. The CNI plugin, is available in Consul on Kubernetes 0.48.0, and supported in Consul 1.13.

»Consul on Kubernetes CLI Enhancements for Envoy Troubleshooting

When diagnosing traffic management problems within a service mesh, users typically rely on the Envoy proxy configuration to quickly identify and troubleshoot configuration issues that surface when deploying applications onto the mesh. Consul on Kubernetes now provides additional CLI commands to quickly help troubleshoot Envoy configuration issues via the consul-k8s proxy list and the consul-k8s proxy read <pod name> command.

The consul-k8s proxy list command provides a list of all pods that have Envoy proxies managed by Consul. Pods are listed along with their Type, which determines whether the proxy is a sidecar or a proxy deployed as part of gateways deployed by Consul.

Namespace: All Namespaces

Namespace Name Type

consul consul-ingress-gateway-6fb5544485-br6fl Ingress Gateway

consul consul-ingress-gateway-6fb5544485-m54sp Ingress Gateway

default backend-658b679b45-d5xlb Sidecar

default client-767ccfc8f9-6f6gx Sidecar

default client-767ccfc8f9-f8nsn Sidecar

default client-767ccfc8f9-ggrtx Sidecar

default frontend-676564547c-v2mfq Sidecar

The consul-k8s proxy read <pod name> command allows you to inspect the configuration for any Envoy running for a given pod. By default, the command lists the available Envoy clusters, listeners, endpoints, routes, and secrets that the Envoy proxy has configured, as shown below:

Envoy configuration for backend-658b679b45-d5xlb in namespace default:

==> Clusters (5)

Name FQDN Endpoints Type Last Updated

local_agent local_agent 192.168.79.187:8502 STATIC 2022-05-13T04:22:39.553Z

client client.default.dc1.internal.bc3815c2-1a0f-f3ff-a2e9-20d791f08d00.consul 192.168.18.110:20000, 192.168.52.101:20000, 192.168.65.131:20000 EDS 2022-08-10T12:30:32.326Z

frontend frontend.default.dc1.internal.bc3815c2-1a0f-f3ff-a2e9-20d791f08d00.consul 192.168.63.120:20000 EDS 2022-08-10T12:30:32.233Z

local_app local_app 127.0.0.1:8080 STATIC 2022-05-13T04:22:39.655Z

original-destination original-destination ORIGINAL_DST 2022-05-13T04:22:39.743Z

==> Endpoints (6)

Address:Port Cluster Weight Status

192.168.79.187:8502 local_agent 1.00 [32mHEALTHY[0m

192.168.18.110:20000 client.default.dc1.internal.bc3815c2-1a0f-f3ff-a2e9-20d791f08d00.consul 1.00 [32mHEALTHY[0m

192.168.52.101:20000 client.default.dc1.internal.bc3815c2-1a0f-f3ff-a2e9-20d791f08d00.consul 1.00 [32mHEALTHY[0m

192.168.65.131:20000 client.default.dc1.internal.bc3815c2-1a0f-f3ff-a2e9-20d791f08d00.consul 1.00 [32mHEALTHY[0m

192.168.63.120:20000 frontend.default.dc1.internal.bc3815c2-1a0f-f3ff-a2e9-20d791f08d00.consul 1.00 [32mHEALTHY[0m

127.0.0.1:8080 local_app 1.00 [32mHEALTHY[0m

==> Listeners (2)

Name Address:Port Direction Filter Chain Match Filters Last Updated

public_listener 192.168.69.179:20000 INBOUND Any * to local_app/ 2022-08-10T12:30:47.142Z

outbound_listener 127.0.0.1:15001 OUTBOUND 10.100.134.173/32, 240.0.0.3/32 to client.default.dc1.internal.bc3815c2-1a0f-f3ff-a2e9-20d791f08d00.consul 2022-07-18T15:31:03.246Z

10.100.31.2/32, 240.0.0.5/32 to frontend.default.dc1.internal.bc3815c2-1a0f-f3ff-a2e9-20d791f08d00.consul

Any to original-destination

==> Routes (1)

Name Destination Cluster Last Updated

public_listener local_app/ 2022-08-10T12:30:47.141Z

==> Secrets (0)

Name Type Last Updated

»Terminating Gateways Enhancements

Users want the ability to route connections to all external destinations (i.e services registered to Consul and ones not registered to Consul) through the terminating gateway. The goal is to establish well-known egress points for traffic leaving their mesh, authorize connections based on defined access policies, and ensure traffic satisfies security requirements before allowing it to egress the service mesh. In version 1.13, Consul’s terminating gateway has been enhanced to communicate to external services using transparent proxy.

Prior to Consul 1.13, downstream services could access external services via the terminating gateway by exposing the service through a statically configured upstream, or by using transparent proxy and connecting to the service using its Consul-assigned virtual service hostname. In some scenarios it may be necessary or desirable to communicate to the external service using its true hostname (e.g. www.example.com) instead of an address that is internal to the service mesh.

Consul 1.13 enables seamless communication with external services by allowing administrators to create an allowed list of external hostnames or IP addresses that can be accessed by downstream services, and funnels those connections to the terminating gateway, which acts as a central egress point for traffic exiting the service mesh.

»Cluster Peering (Beta)

In larger enterprises, platform teams are often tasked with providing a standard networking solution to independent teams across the organization. Often these teams have made their own choices about cloud providers and runtime platforms, but the platform team still needs to enable secure cross-team connectivity. To make it work, the organization needs a shared networking technology like Consul that it can build upon that works everywhere. Consul has enhanced its cross-team capabilities in 1.13 with a new feature called cluster peering.

»Before Cluster Peering

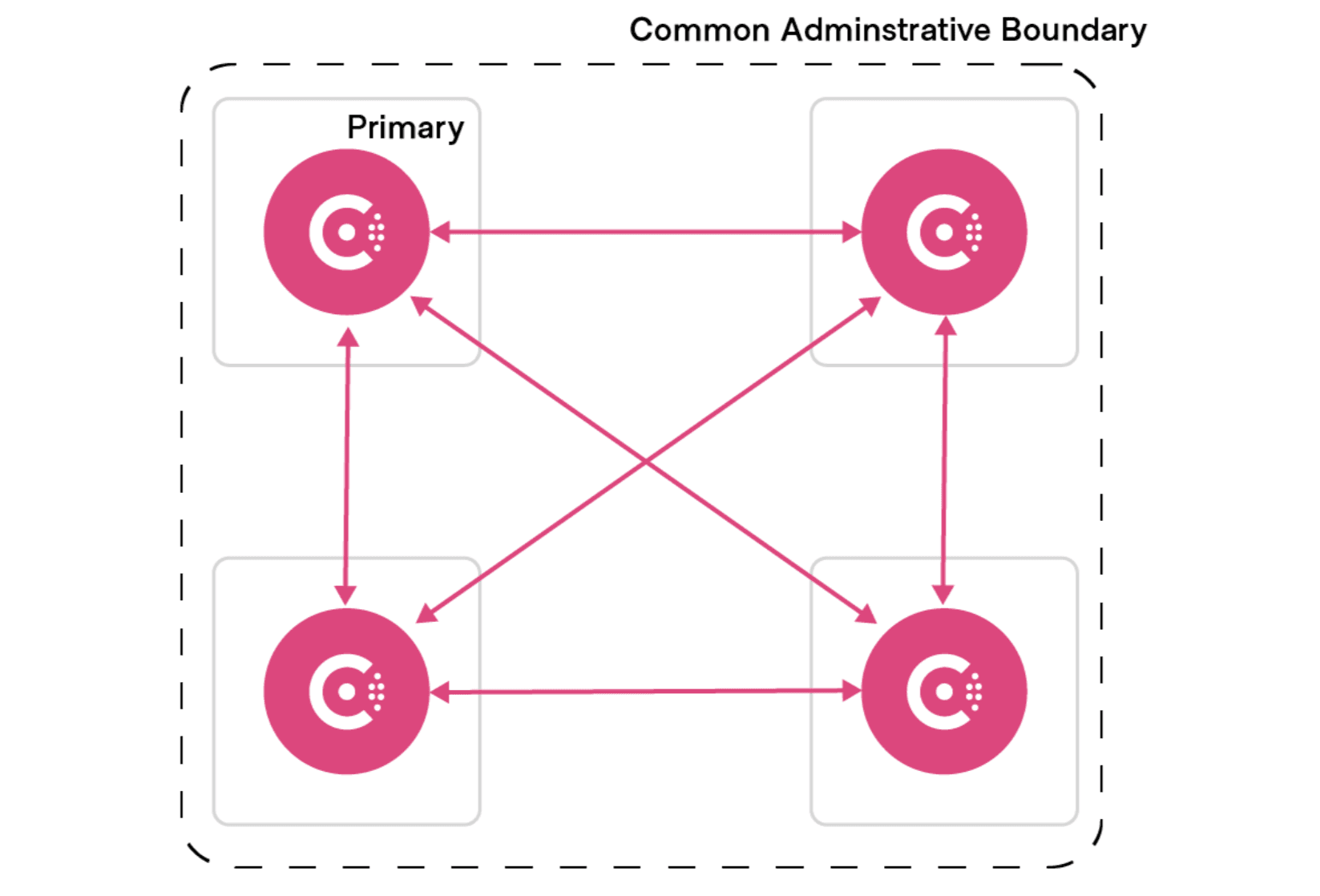

Consul’s current federation model is based on the idea that all Consul datacenters (also known as clusters) are managed by a common administrative control. Security keys, policies, and upgrade activities are assumed to be coordinated across the federation. Mesh configuration and service identities are also global, which means that service-specific configurations, routing, and intentions are assumed to be managed by the same team, with a single source of truth across all datacenters.

This model also requires a full mesh of network connectivity between datacenters, as well as relatively stable connections to remote datacenters. If that matches how you manage infrastructure, WAN federation provides a relatively simple solution: with a small amount of configuration every service can connect to every other service across all your datacenters.

The advantages of WAN federation include:

- Shared keys

- Coordinated upgrades

- Static primary datacenter

However, many organizations are deploying Consul into environments managed by independent teams across different networking boundaries. These teams often require the ability to establish service connectivity with some, but not all, clusters, while retaining the operational autonomy to define a service mesh configuration specific to their needs without conflicting with a configuration defined in other clusters across the federation. That highlights some of the limitations in the WAN federation model:

- Reliance on connectivity to a primary datacenter.

- Assumption of resource sameness across the federation.

- Difficult to support complex hub-and-spoke topologies.

»With Cluster Peering

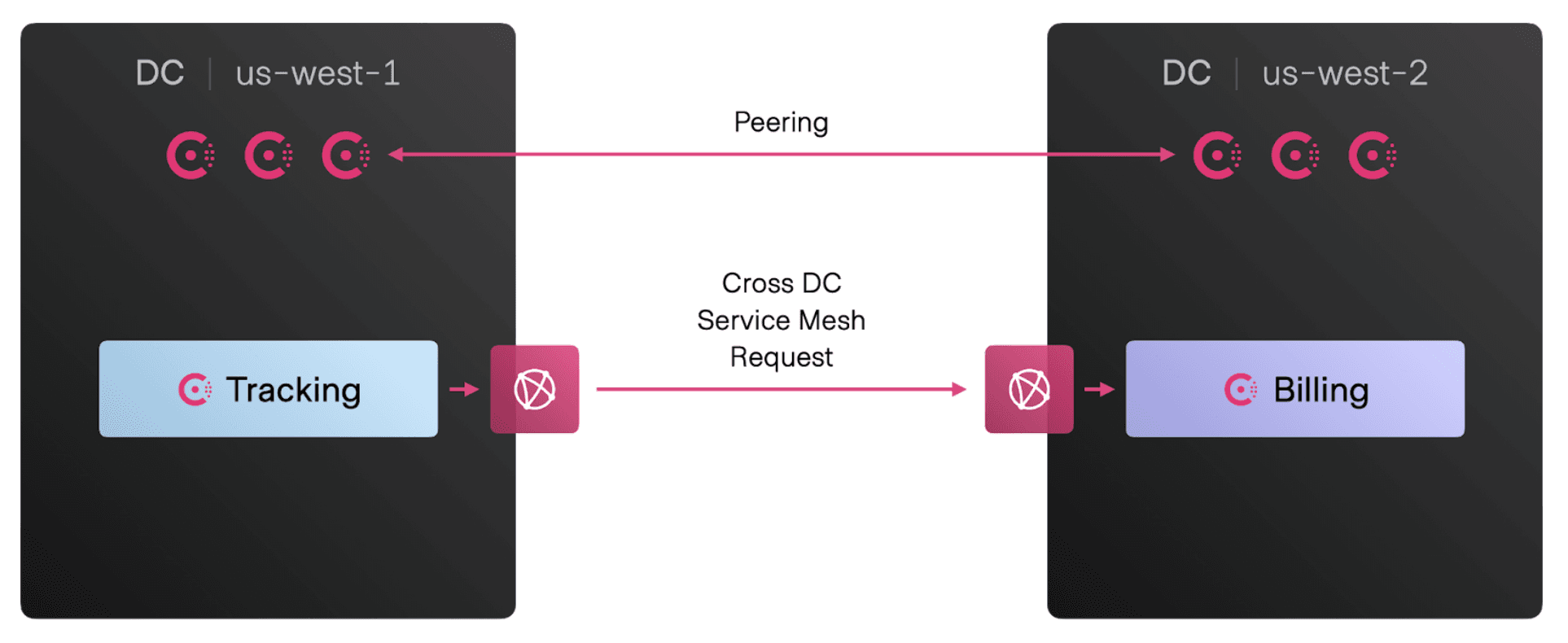

With cluster peering, each cluster is autonomous with its own keys, catalog, and access control list (ACL) information. There is no concept of a primary datacenter.

Cluster administrators explicitly establish relationships (or “peerings”) with clusters they need to connect to. Peered clusters automatically exchange relevant catalog information for the services that are explicitly exposed to other peers.

In the open source version of Consul 1.13, cluster peering will enable operators to establish secure service connectivity between Consul datacenters, no matter what the network topology or team ownership looks like.

Cluster peering provides the flexibility to connect services across any combination of team, cluster, and network boundaries. The benefits include:

- Fine-grained connectivity

- Minimal coupling

- Operational autonomy

- Support for hub-and-spoke peering relationships

Cluster peering enables users to securely connect applications across internal and external organizational boundaries while maintaining the security offered by mutual TLS, and autonomy between independent service meshes.

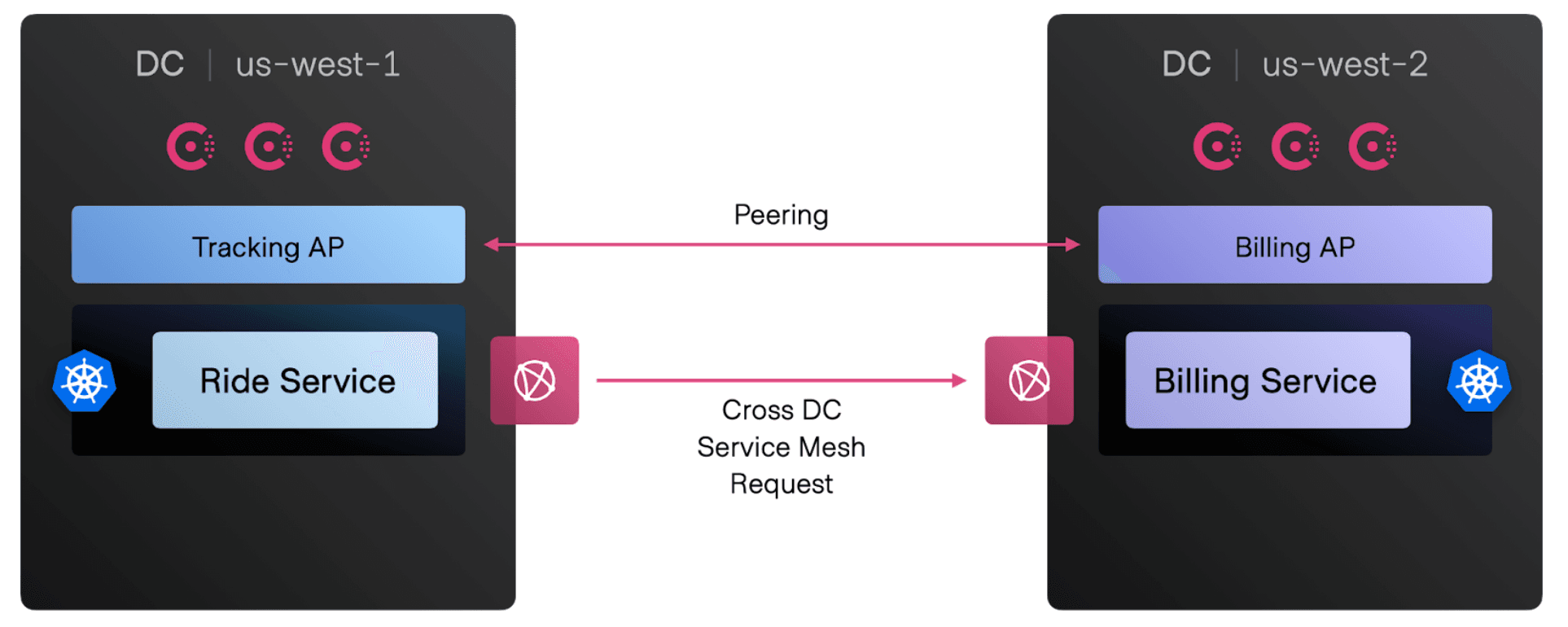

»Cluster Peering in Consul Enterprise

Admin Partitions, an enterprise feature, introduced in Consul 1.11, provides improved multi-tenancy for different teams to develop, test, and run their production services using a single, shared Consul control plane. Admin Partitions lets your platform team operate shared servers to support multiple application teams and clusters. However, Admin Partitions in Consul 1.11 supports connecting partitions only on the same servers in a single region.

Now, with cluster peering, partition owners can establish peering with clusters or partitions located in the same Consul datacenter or in different regions. Peering relationships are independent from any other team’s partitions, even if they share the same Consul servers.

Cluster peering is an exciting new addition to Consul that gives operators greater flexibility in connecting services across organizational boundaries.

Note that cluster peering is not intended to immediately replace WAN federation. In the long term, additional functionality is needed before cluster peering has feature parity with all of the features of WAN federation. If you are already using WAN federation, there is no immediate need to migrate your existing clusters to cluster peering.

»Next Steps

Our goal forwith HashiCorp Consul is to provide an enterprise-ready, consistent control plane to discover and securely connect any application. For more information, please visit the Consul documentation. To get started with Consul 1.13, please download the appropriate operating system binaries from our release page or install the latest Helm chart that supports Consul 1.13 for Kubernetes. Multi-tenancy with Admin Partitions is part of the Consul Enterprise binaries, and you can get started with a free Consul Enterprise trial here.

The HashiCorp Consul team would love your feedback — please share your thoughts with the team using this Consul feedback form.