We are excited to announce the general availability of HashiCorp Nomad 1.2. Nomad is a simple and flexible orchestrator used to deploy and manage containers and non-containerized applications. Nomad works across on-premises and cloud environments. It is widely adopted and used in production by organizations such as Cloudflare, Q2, Pandora, and GitHub.

In beta release since October, the 1.2 release of Nomad introduces important new capabilities to Nomad and the Nomad ecosystem, including:

- System Batch jobs

- User interface and CLI upgrades

- Nomad Pack

»System Batch Jobs

Nomad 1.2 introduces a new type of job to Nomad called sysbatch. This is short for “System Batch”. These jobs are meant for “one-off,” cluster-wide, short-lived, tasks. System Batch jobs are an excellent option for regularly upgrading software that runs on your client nodes, triggering garbage collection or backups on a schedule, collecting client metadata, or doing one-off client maintenance tasks.

Like System jobs, System Batch jobs work without an update stanza and will run on any node in the cluster that is not excluded via constraints. Unlike System jobs, System Batch jobs will run only on clients that are ready at the time the job was submitted to Nomad.

Like Batch jobs, System Batch jobs are meant to run to completion, can be run on a scheduled basis, and support dispatch execution with per-run parameters.

If you want to run a simple sysbatch job, the job specification might look something like this:

job "sysbatchjob" {

datacenters = ["dc1"]

type = "sysbatch"

constraint {

attribute = "${attr.kernel.name}"

value = "linux"

}

group "sysbatch_job_group" {

count = 1

task "sysbatch_task" {

driver = "docker"

config {

image = "busybox:1"

command = "/bin/sh"

args = ["-c", "echo hi; sleep 1"]

}

}

}

}

This will run a short-lived Docker task on every client node in the cluster that is running Linux.

To run this job at regular intervals, you would add a periodic stanza:

periodic {

cron = "0 0 */2 ? * *"

prohibit_overlap = true

}

For instance, the stanza above instructs Nomad to re-run the sysbatch job every hour.

Additionally, sysbatch jobs can be parameterized and then invoked later using the dispatch command. These specialized jobs act less like regular Nomad jobs and more like cluster-wide functions.

Adding a parameterized stanza defines the arguments that can be passed into the job. For example, a sysbatch job that upgrades Consul to a different version might have a parameterized stanza that looks like this:

parameterized {

payload = "forbidden"

meta_required = ["consul_version"]

meta_optional = ["retry_count"]

}

This sysbatch job could then be registered using the run command, and executed using the dispatch command:

$ nomad job run upgrade_consul

$ nomad job dispatch upgrade_consul -meta consul_version=1.11.0

»User Interface and CLI Upgrades

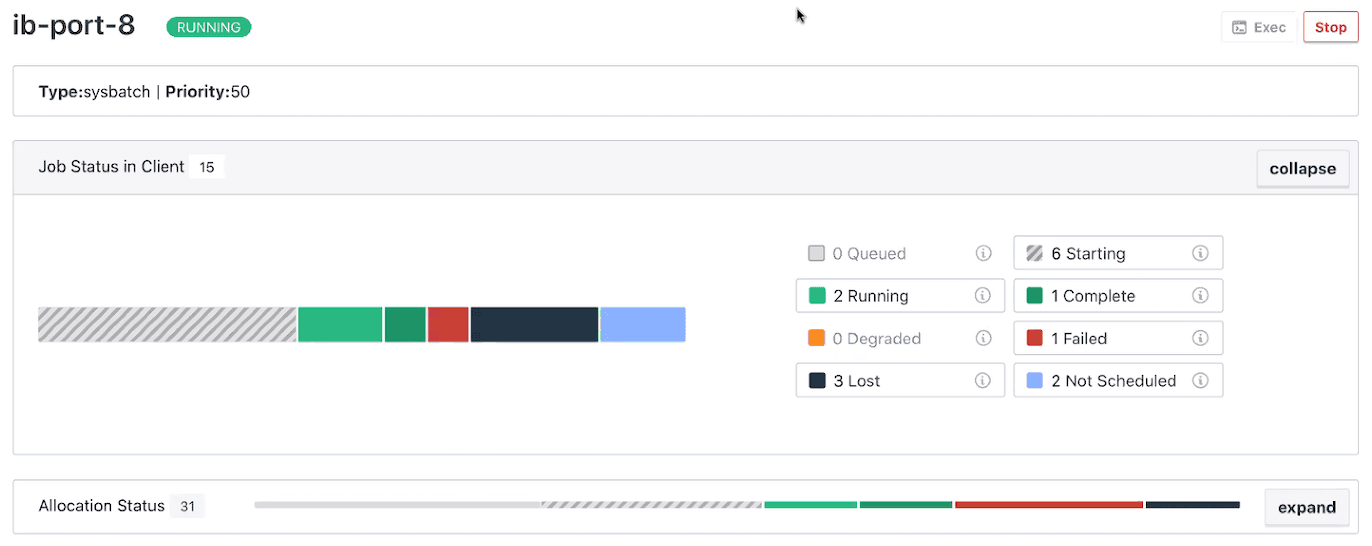

Nomad 1.2 improves job status visualization for Batch jobs and System Batch jobs in the Nomad UI. Traditional Batch jobs and System Batch jobs now include an upgraded Job Status section that includes two new statuses: Not Scheduled and Degraded.

Not Scheduled shows the client nodes that did not run a job. This could be due to a constraint that excluded the node based on its attributes, or because the node was added to the cluster after the job was run.

The Degraded state shows jobs in which any allocations did not complete successfully.

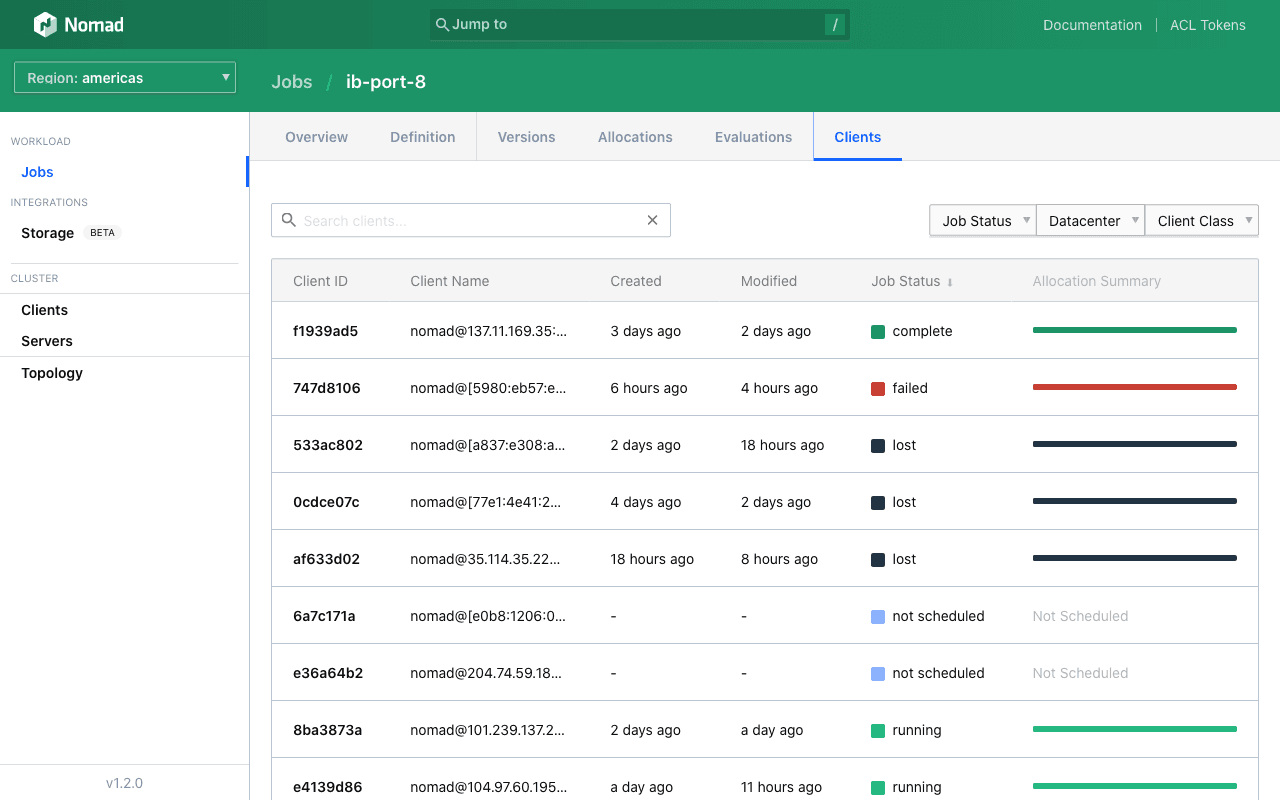

Additionally, you can now view all the client nodes that batch and sysbatch jobs run on with the new Clients tab. This allows you to quickly assess the state of each job across the cluster.

»Nomad Pack (Tech Preview)

We are also excited to announce the tech preview of Nomad Pack, a package manager for Nomad that makes it easy to define reusable application deployments. This lets you quickly spin up popular open source applications, define deployment patterns that can be reused across teams within your organization, and discover job specifications from the Nomad community. Need a quick Traefik load balancer? There’s a pack for that.

Each pack is a group of resources that are meant to be deployed to Nomad together. In the Tech Preview, these resources must be Nomad jobs, but we expect to add volumes and access control list (ACL) policies in a future release.

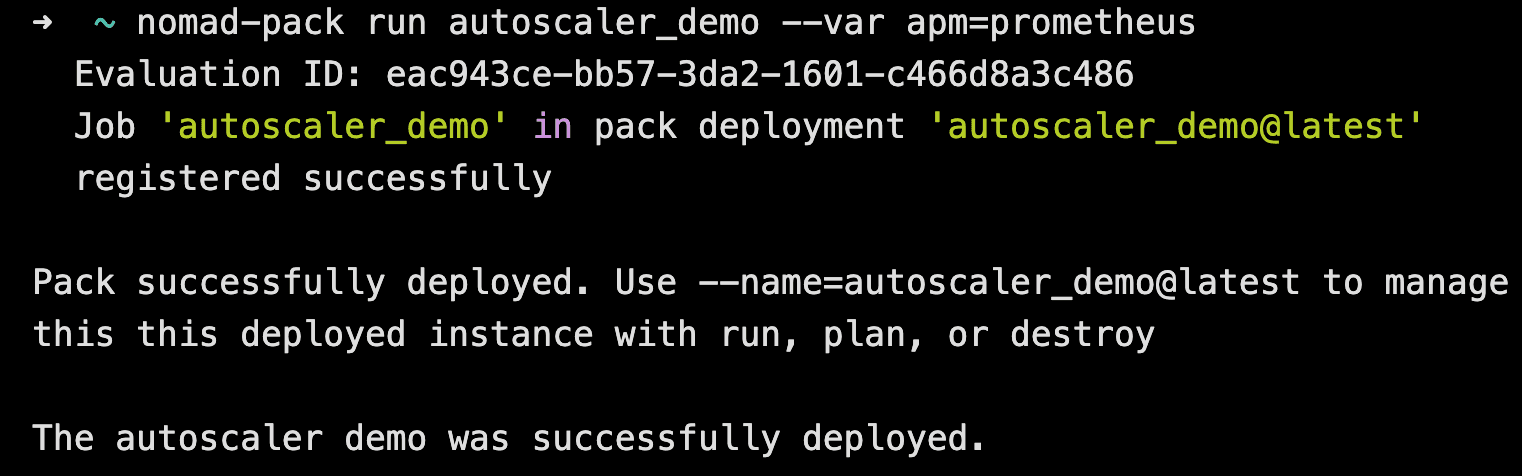

Let’s take a look at Nomad Pack, using the Nomad Autoscaler as an example:

Traditionally, users deploying the Nomad Autoscaler often need to deploy or configure multiple jobs within Nomad, usually Grafana, Loki, the autoscaler itself, an APM, and a load balancer.

With Nomad Pack you can run a single command to deploy all the necessary autoscaler resources to Nomad. Optionally, the deployment can be customized by passing in a variable value:

This allows you to spend less time learning and writing Nomad job specs for each app you deploy. See the Nomad Pack repository for more details on basic usage.

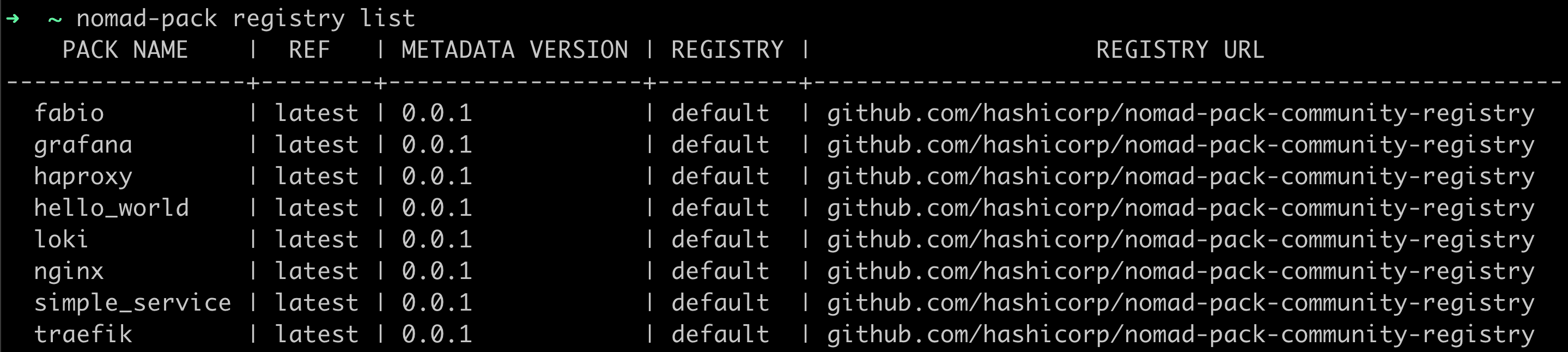

By default, Nomad Pack uses the Nomad Pack Community Registry as its source for packs. This registry provides a location for the Nomad community to share their Nomad configuration files, learn app-specific best practices, and get feedback and contributions from the broader community. Alternative registries and internal repositories can also be used with Nomad Pack. To view available packs, run the registry list command:

You can easily write and customize packs for your specific organization’s needs using Go Template, a common templating language that is simple to write but can also contain complex logic. Templates can be composed and re-used across multiple packs, which allows organizations to more easily standardize Nomad configurations, codify best practices, and make changes across multiple jobs at once.

»Nomad Pack for a Pack Contest

In October 2021, we started a monthly competition for Nomad users. Entering the contest is as simple as contributing a pack to the Nomad Pack Community Registry. Each month, the Nomad team reviews the packs contributed and votes on the winners. The first place prize is a HashiCorp backpack and additional winners (up to 5) selected each month receive a Nomad t-shirt!

To learn more about writing your own packs and registries, see the Writing Custom Packs guide in the repository. Please don’t hesitate to provide feedback. Issues and pull requests are welcome on the GitHub repository and pack suggestions and votes are encouraged via Community Pack Registry issues.

»What’s Next for Nomad?

There are more improvements in Nomad 1.2 that were not detailed in this post. For a complete list of changes in Nomad 1.2, please see the CHANGELOG. If you are upgrading from a previous release and use the Nvidia device plugin, we encourage you to read the upgrade guide to learn about the required upgrade steps.

Finally, on behalf of the Nomad team, we would like to thank our amazing community. In addition to contributing a variety of packs to the Nomad Pack Community Registry, your dedication, feature requests, pull requests, and bug reports help us make Nomad better.

We are deeply grateful for your time, passion, and support.

»Next Steps for Nomad Users

- Download Nomad 1.2 from the project website.

- Learn more about Nomad with tutorials on the HashiCorp Learn site.

- Contribute to the Nomad project and participate in our community.