Nomad 0.12 includes significant networking improvements for Nomad managed services. The two most significant networking improvements are multi-interface networking and CNI plugin support. Both features may be enabled by Nomad cluster administrators and then leveraged by Nomad job authors. Existing clusters and workloads will continue to work as they do today.

»Container Networking Interface Plugins

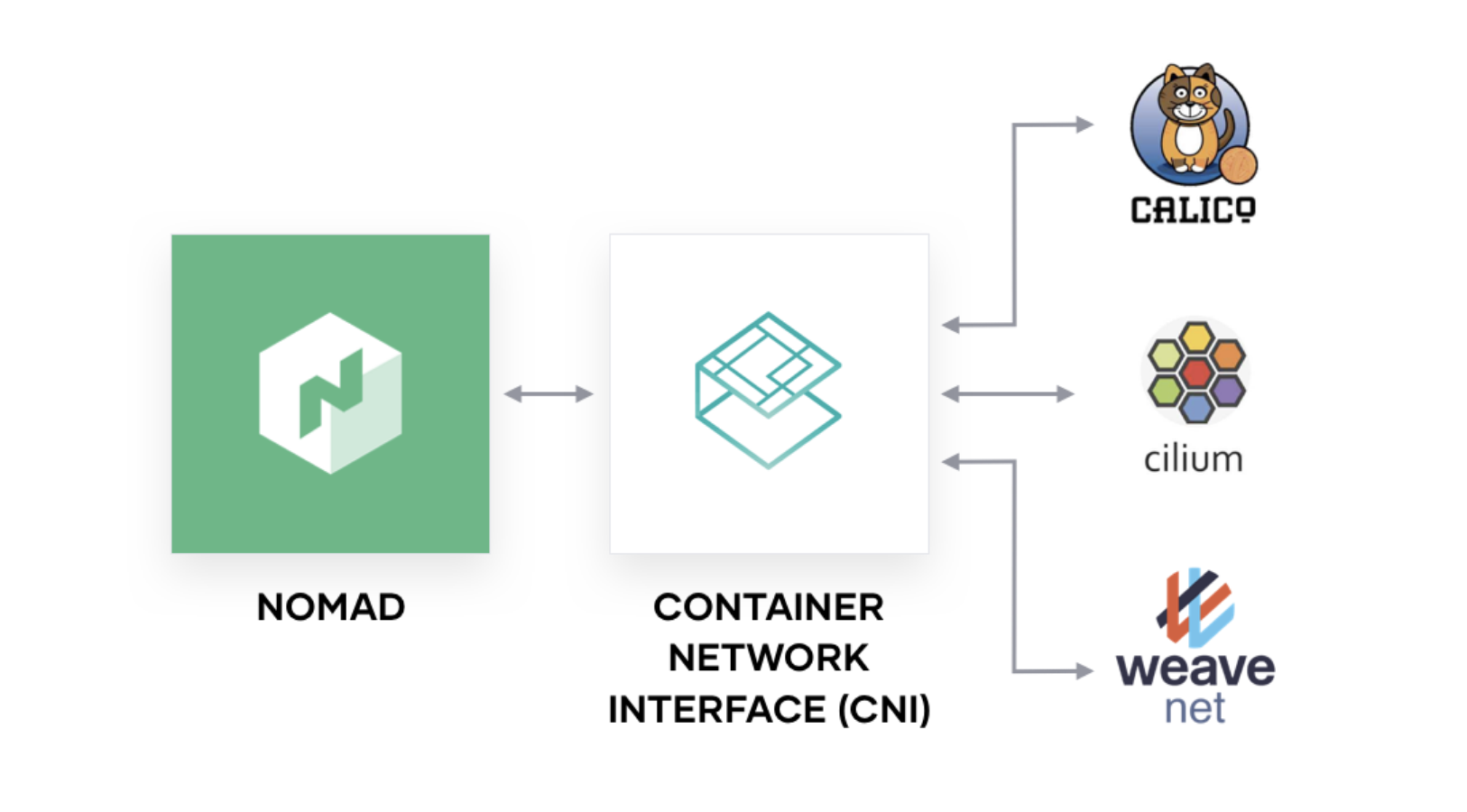

CNI, Container Networking Interface, is a CNCF project to standardize container networking plugins. Not only do popular virtual network products like Calico and Weave provide CNI plugins, but clouds may expose networking functionality via CNI such as AWS’s ECS plugin. CNI plugins are also useful for configuring Linux’s intrinsic networking features: Nomad started using CNI plugins in version 0.10 when bridge networking was introduced to configure the bridge network and port forwarding.

Nomad 0.12 expands Nomad’s CNI support by allowing cluster administrators to define CNI plugins available on a node for job authors to use. For example macvlan is a Linux networking technology that operates like a virtual switch: allowing a single physical network interface to have multiple MAC and IP addresses. CNI plugins are configured via JSON on Nomad nodes. A macvlan plugin may be configured in a file named /opt/cni/config/mynet.conflist:

{

"cniVersion": "0.4.0",

"name": "mynet",

"plugins": [

{

"type": "macvlan",

"master": "enp1s0f1",

"ipam": {

"type": "dhcp"

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

},

"snat": true

}

]

}

Note that both the macvlan and portmap plugins are configured above. This allows job authors to not only use a macvlan device, but still use Nomad’s port assignment and forwarding in job files:

job "api"

group "api" {

network {

mode = "cni/mynet"

port "http" {

to = 8080

}

}

task "http" { ... }

}

}

Nomad will execute the CNI plugin specified by the group network mode (mynet) and configure ports appropriately.

»Multi-Interface Networking

Prior to Nomad 0.12, Nomad only supported a single network interface per Nomad agent. While users could work around this limitation with host networking or task driver specific features, each workaround had significant downsides. Host networking presents a number of security challenges: Nomad is unable to enforce a service that only uses the IPs and ports it has been assigned. Task driver based workarounds, such as Docker’s network_mode, require careful host configuration and break interoperability with Nomad’s other network concepts such as the bridge group network introduced in Nomad 0.10.

Internally the Nomad scheduler has always supported scheduling across multiple IP addresses per node. However, job authors lacked the ability to select which address a job required, so this scheduler ability was largely unusable.

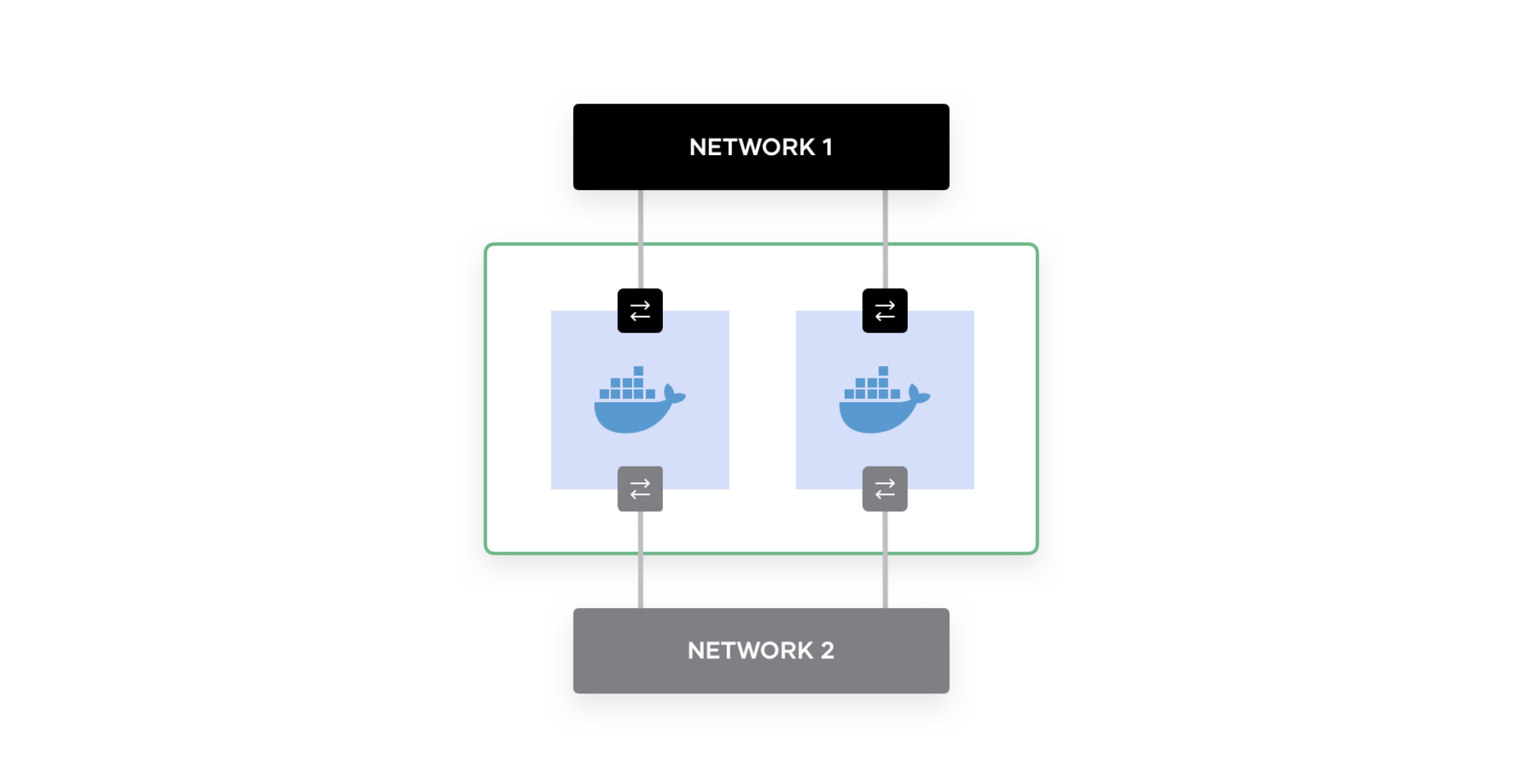

Multi-interface networking in Nomad 0.12 addresses all of these issues by offering cluster administrators the ability to configure named networks on a per-node basis and allowing job authors the ability to select which network their job requires. All of this functionality is backward compatible: existing workloads will continue to work as before.

Multi-interface networking is useful in cases such as ingress services that route traffic from the public Internet to internal services. These frontend workloads (e.g. a load balancer or authenticating gateway), may have two logical networking interfaces: 1 with a public IP address, and 1 with a private IP address. The public interface would be specified in a Nomad 0.12 client agent’s configuration file:

host_network "public" {

cidr = "203.0.113.0/24"

}

Then a job author could reference that network requirement in their ingress job specification:

network {

mode = "bridge"

port "https" {

static = 443

host_network = "public"

}

port "metrics" {

to = 9100

}

}

The ingress job would then receive public Internet traffic on port 443 as well as expose metrics to the internal network on port 9100. Since the service runs inside its own network namespace, it could simply hardcode the ports 443 and 9100. All of the IP addresses and ports, both on the host and inside the network namespace, are available as environment variables for services as well.

»Next Step

For more information on getting started using our new networking features, please refer to the documentation here. To watch a live demo of these features, please sign up for the webinar here.