LogicMonitor Uses Terraform, Packer & Consul for Disaster Recovery Environments

The following is a guest blog from Randall Thomson, Senior Technical Operations Engineer at LogicMonitor. When he's not daydreaming about his next snowboarding adventure, you will find him busily typing to keep the LogicMonitor SaaS platform in tip-top shape.

»Building our Platform for High Availability

LogicMonitor is a SaaS-based monitoring platform for Enterprise organizations and collects over 20 billion metrics each day from over 55,000 users. Our service needs to be available 24/7, without question. In order to ensure this happens, the LogicMonitor TechOps team uses HashiCorp Packer, Terraform, and Consul to dynamically build infrastructure for disaster recovery (DR) in a reliable and sustainable way.

The LogicMonitor SaaS platform is a cellular based architecture. All of the resources (web and database servers, DNS records, message queues, ElasticSearch clusters, etc.) required to run LogicMonitor are referred to as a pod. It is critical that every resource be consistently provisioned and that you aren’t missing anything. To implement this level of detail, any resource we provision in the Public Cloud must be done via Terraform. We made a strong effort over the past 18 months to not only provision new infrastructure using Terraform, but also to backfill (import and/or re-create) existing resources. As it so happened, in a serendipitous way, our DR plan was born. We no longer needed one way to provision our production infrastructure and a different method for our DR plan. With Terraform, it’s basically the same in either case.

Even with this foolproof plan, there were still gaps in the DR process bound to us well intentioned, yet error-prone humans. You may have several hundred web servers being spun up in parallel, but if they need manual intervention before being able to provide service for your customers, then those tasks must be serially processed. Worse, as soon as the automation stops the recovery time objective (RTO) increases rapidly. The switch from actions being automatically instrumented in parallel to serial-based manual tasks not only slows the process down to a crawl, it instantly increases the stress burden.

Our team discussed the results from previous DR exercises and developed two goals: make it faster; remove manual steps. Implementing these HashiCorp products (Packer, Terraform, and Consul) helped satisfy both goals. So let's continue forward and address how we overcame a variety of stumbling blocks.

»Building Blocks

We use Packer to generate a variety of pre-baked server templates. Using this approach instead of starting from a generic image offers many advantages: templates can be tagged (which helps add meaningful context to what they are used for), servers boot up with all applications installed (configuration management pre-applied, check!) and the way in which the image was built is documented and placed under version control. We also integrated Packer into our software build and deployment service to provide better visibility and consistency.

==> amazon-ebs: Creating AMI tags

amazon-ebs: Adding tag: "name": "santaba-centos7.4"

amazon-ebs: Adding tag: "approved": "true"

amazon-ebs: Adding tag: "prebaked": "true"

amazon-ebs: Adding tag: "LM_app": "santaba"

amazon-ebs: Adding tag: "packer_build": "1522690645"

amazon-ebs: Adding tag: "buildresultsurl": "https://build.logicmonitor.com/PACK"

==> amazon-ebs: Creating snapshot tags

==> amazon-ebs: Terminating the source AWS instance...

==> amazon-ebs: Cleaning up any extra volumes...

==> amazon-ebs: Destroying volume (vol-0134567890abcdef)...

==> amazon-ebs: Deleting temporary keypair...

Build 'amazon-ebs' finished.

'''

==> Builds finished. The artifacts of successful builds are:

--> amazon-ebs: AMIs were created:

eu-west-1: ami-1234567876

us-east-1: ami-9101112131

us-west-1: ami-4151617181

With this new process in place, one of the most significant improvements Packer provided was time savings. We went from often taking 45+ minutes from boot-up to only 5 minutes for an individual component to be capable of providing service.

»Orchestrated Buildout

In the past, if we wanted to replicate how an existing server was built, we would have to lookup the documentation (cross your fingers) and then assess if any manual changes were made (there may not even be a co-worker’s .bash_history to comb through) or assume black magic. This led to inconsistencies for environments that should be exactly the same. The one-off, manual based approach was also a big time sink for whomever had to go reverse engineer a pod in order to build a new one. Often there were also a variety of different provisioning techniques needed.

As we moved all of our production infrastructure into Terraform, we realized we could use the same code for Disaster Recovery. We would have the ability to test our DR plan in a repeatable manner without a lot of stress. Terraform can tear down infrastructure just as easily as building it. It takes over 120 different resources to create a pod - which is a lot to remember to remove.

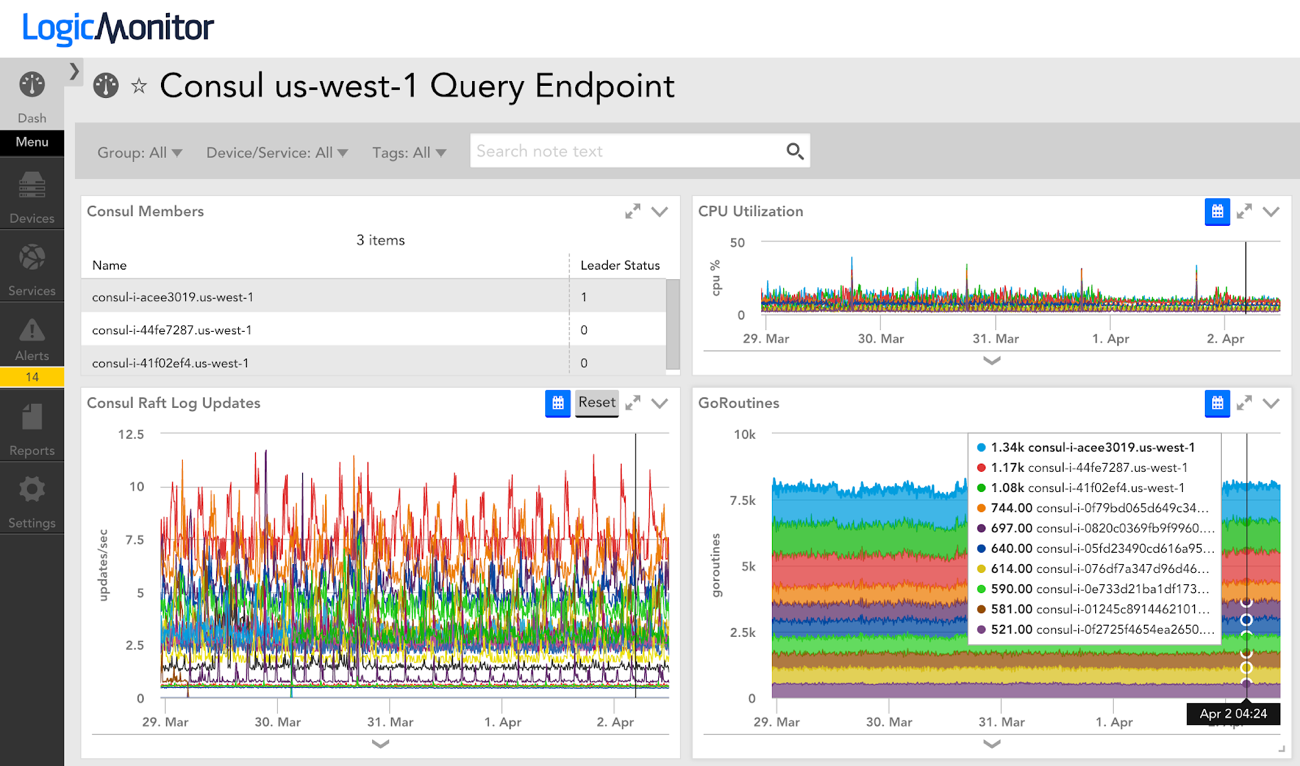

Some of the gaps requiring manual intervention in our DR process were resolved by developing our own Terraform Providers. We created two separate providers, each to achieve a unique task. Since our team uses LogicMonitor as the source of truth for outages, we needed a way to load resources created by Terraform into our LogicMonitor account. We could then monitor those resources on demand and know when they required service. If Consul health checks were failing (more about this in the next section) we wanted to be alerted right away.

The next gap to fill was application configuration management. We developed an internal-use Terraform provider to automatically populate configuration information from the resource outputs. Currently, each pod requires 130 individual configuration items which left a lot of room for mistakes. Using a Terraform provider not only led to more time savings but also depleted the need to copy and paste, minimizing errors (ever try loading a URL with the 'om' missing from ‘.com’?).

»Where is That Thing Running Again?

Registering services in Consul provided us with several benefits. We could already automatically determine the health of services with monitoring but we could not apply that information in a meaningful way to components in our pods. Using the HTTP and DNS interfaces in Consul meant less manual or static configuration in order for services to know where other services are. So far we have 17 different services registered in each pod. We even register our Zookeeper clusters, including tagging the leader, so that we have a consistent (and convenient) way to know if and where services are up. For example, a Zookeeper client can use zookeeper.service.consul as the FQDN via Consul DNS.

Here is an example Consul health check to determine if a given Zookeeper member is the current leader in the cluster:

{

"name": "zookeeper",

"id": "zookeeper-leader",

"tags": [ "leader"],

"port": 2181,

"checks": [

{

"script": "timeout -s KILL 10s echo stat | nc 127.0.0.1 2181 | grep leader; if [ $? -eq 0 ]; then exit 0; else exit 2; fi",

"interval": "30s"

}

]

}

You can get creative with what you consider a service, such as an instance of a customer account. Our proxies and load balancers use consul-template to automatically write their routing configuration files. This has resulted in less chance for error and provided a scalable model so we can continue to manage more complex systems. The proxies literally configure themselves, managing hundreds or thousands of backends and never need human intervention. And of course the proxy itself is registered in Consul as well.

»When Disaster Strikes

The day has come. Your datacenter lost power. It’s 5am and you’ve been up half the night with your toddler. How much thinking do you want to have to do? How much thinking will you even be capable of? Likely very little.

Cue Terraform plan; Terraform apply. Copy the project file and repeat (and hope your VPN works, and that you have up-to-date server templates in the target regions along with enough instance limits...).

The coordinated efforts between implementing HashiCorp Packer, Terraform and Consul have depleted the need for manual configuration and provided a resilient DR process. And by using the LogicMonitor Provider, enables us to rapidly validate the results of our tests as we iterate through improvements. Exercising our disaster recovery muscles has turned processes we used to fear into near thoughtless tasks.

If you are interested in learning more about these products, please visit the HashiCorp Product pages for [Terraform](https://www.hashicorp.com/terraform) and [Consul](https://www.hashicorp.com/consul).

Sign up for the latest HashiCorp news

More blog posts like this one

Protect data privacy in Amazon Bedrock with Vault

This demo shows how Vault transit secrets engine protects data used for RAG in an Amazon Bedrock Knowledge Base created by Terraform.

Preventative beats reactive: Modern risk management for infrastructure vulnerabilities

Vulnerability scanning is a last line of defense. Your first line should be preventative risk management strategies that shift security left and narrow the window for exploits.

Ace your Terraform Professional exam: 5 tips from certified pros

Three HashiCorp Certified: Terraform Authoring & Ops pros share their advice for preparing for and completing the certification exam.