Apache Camel really is the Swiss Army Knife of application integration for any Java (or Spring) developer. With 400+ components/adapters — all open source and easily extensible — Camel fits almost any integration use case. But there’s a catch: How do you keep track of your Camel services running throughout a deployment, and how can they communicate with one another in a multi-cloud or hybrid cloud environment?

In this post, I’ll show you how to use Camel with HashiCorp Consul to address this challenge. In the walkthrough, we’ll use the Service Call Enterprise Integration Pattern (EIP) and Spring Boot.

»Background

Kubernetes is a commonly suggested solution for orchestrating containers and service registration, but it can be complex, cumbersome, and a pain to deploy and manage. HashiCorp Consul presents a much simpler solution.

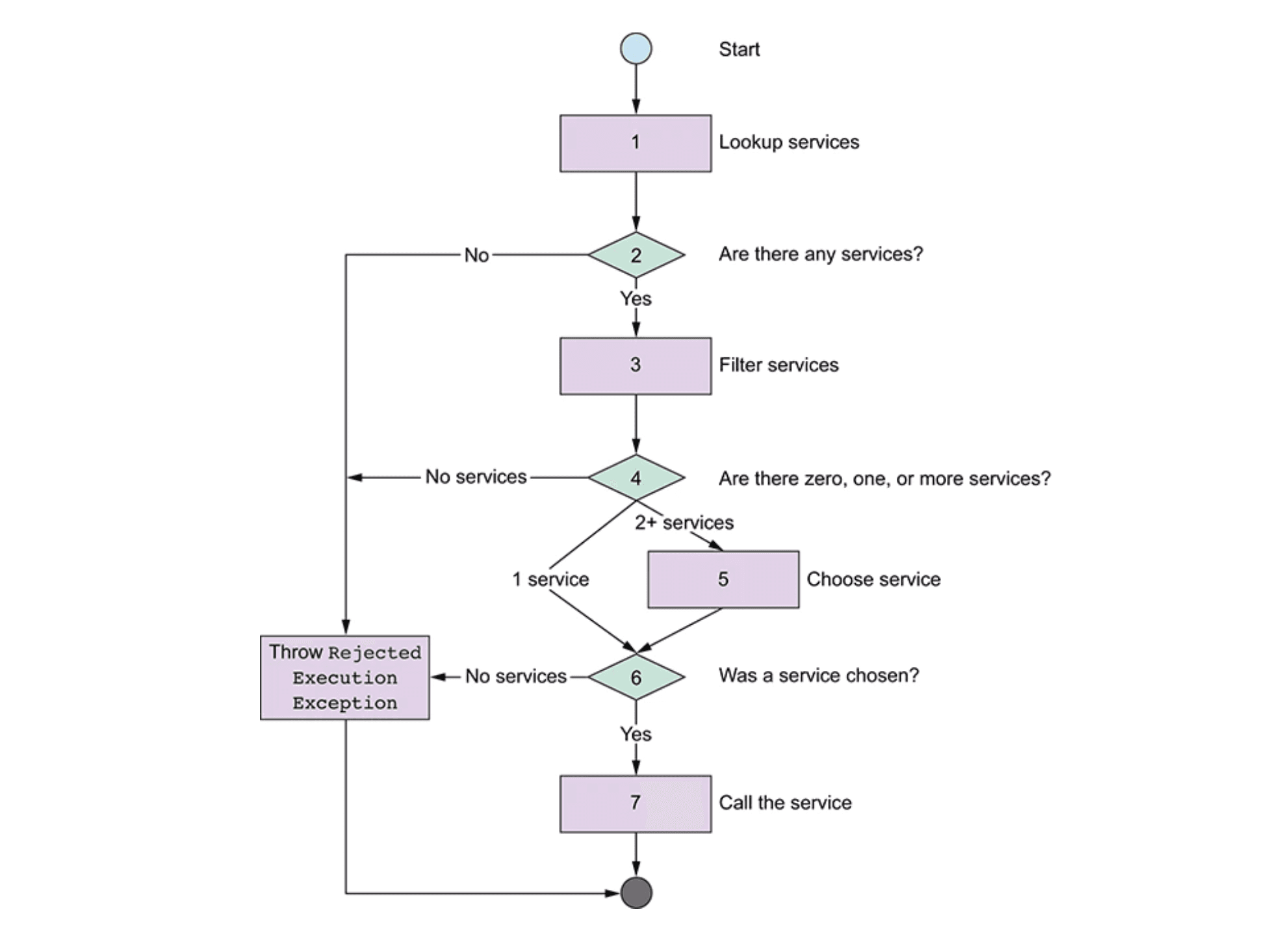

All we really need is service discovery, service health checks, and possibly a service mesh if we’re doing microservices. In Apache Camel, the way we tackle this is using an Enterprise Integration Pattern called Service Call. Basically, it’s a simple load-balancing flow that lets a client call any available service, and if the service is not available, then allow Camel to communicate with an external service registry to figure out what is available. A service registry maintains a list of healthy, available services in the form of a catalog that can be queried by external components.

Open source service registry options include HashiCorp Consul, etcd, Zookeeper, and DNS. For this walkthrough, I’ve chosen to use HashiCorp Consul because of its mass adoption, maturity, community, and industry use cases.

»The Service Call EIP with HashiCorp Consul

To grab the code for this walkthrough, you can clone my repo: Load Balancing Apache Camel routes with Consul. Here’s a flowchart diagram of the Service Call Enterprise Integration Pattern illustrating the problem we’re trying to solve:

To execute this pattern, follow this procedure:

»Prerequisites

»Steps

1. Start up Consul’s Docker image on a development machine. We’ll refer to the Consul server as Badger:

docker pull consul

docker run -d -p 8500:8500 -p 8600:8600/udp --name=badger consul agent -server -ui -node=server-1 \ -bootstrap-expect=1 -client=0.0.0.0

2. Start up the first Consul client. We’ll refer to the first Consul client as Fox:

docker run --name=fox -d -e CONSUL_BIND_INTERFACE=eth0 consul agent -node=client-1 -dev -join=172.17.0.2 -ui

3. Start up the second Consul client. We’ll refer to the second Consul client as Weasel:

docker run --name=weasel -d -e CONSUL_BIND_INTERFACE=eth0 consul agent -node=client-2 -dev -join=172.17.0.2 -ui

The above steps run a completely in-memory Consul server agent with default bridge networking and no services exposed on the host. This is useful for development but should not be used in production. Since the server is running at internal address 172.17.0.2, you can run a three-node cluster for development by starting up two more instances and telling them to join the first node.

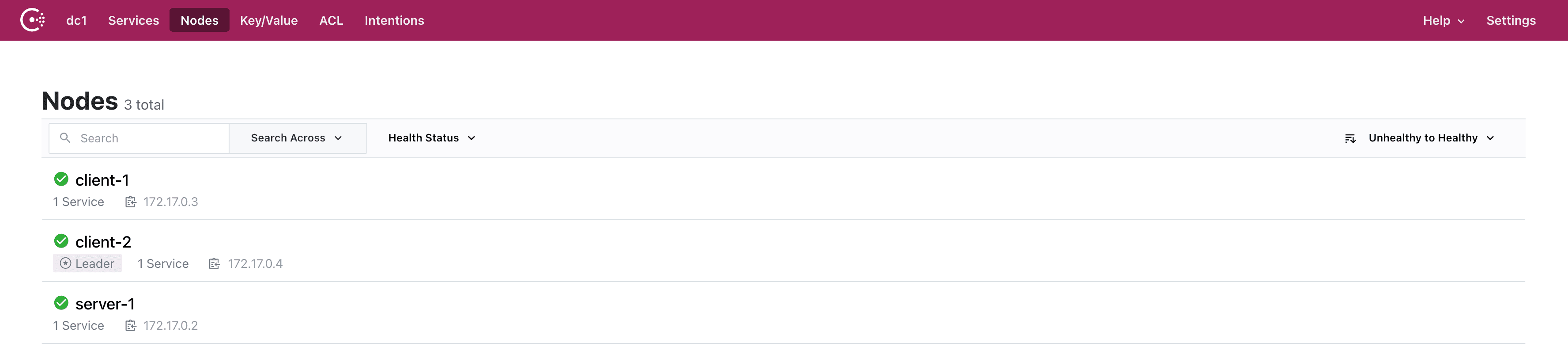

Now that we have Badger, Fox, and Weasel started, we can check their connectivity and health via the Consul web UI:

We now have three nodes that are alive, active, and healthy: server-1, client-1, and client-2. This means Consul is ready to act as our service registry, meaning all we need to do is tell it which services to register and monitor.

Using our example, copy the Consul service definitions to each client (Weasel and Fox):

git clone https://github.com/sigreen/camel-spring-boot-consul

cd camel-spring-boot-consul/services/src/main/resources/consul

docker cp services.json weasel:/consul/config/services.json

docker exec weasel consul reload

docker cp services.json fox:/consul/config/services.json

docker exec fox consul reload

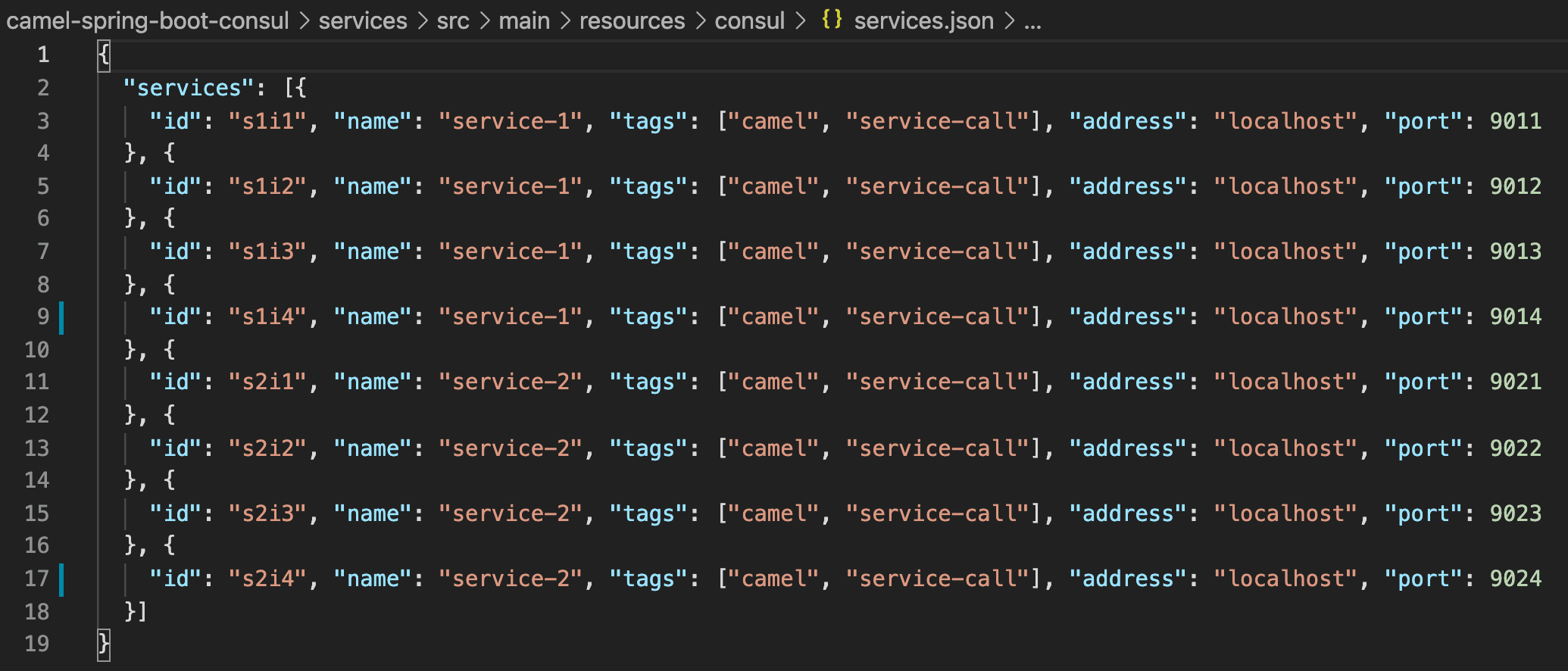

The Consul services.json file is illustrated below. Notice there are eight services defined: four for service-1, plus another four for service-2. All services have a unique identifier, but importantly can be referenced by a common service name: service-1 and service-2. That way, Camel is unaware of dynamic IP changes, caring only about the service name that remains.

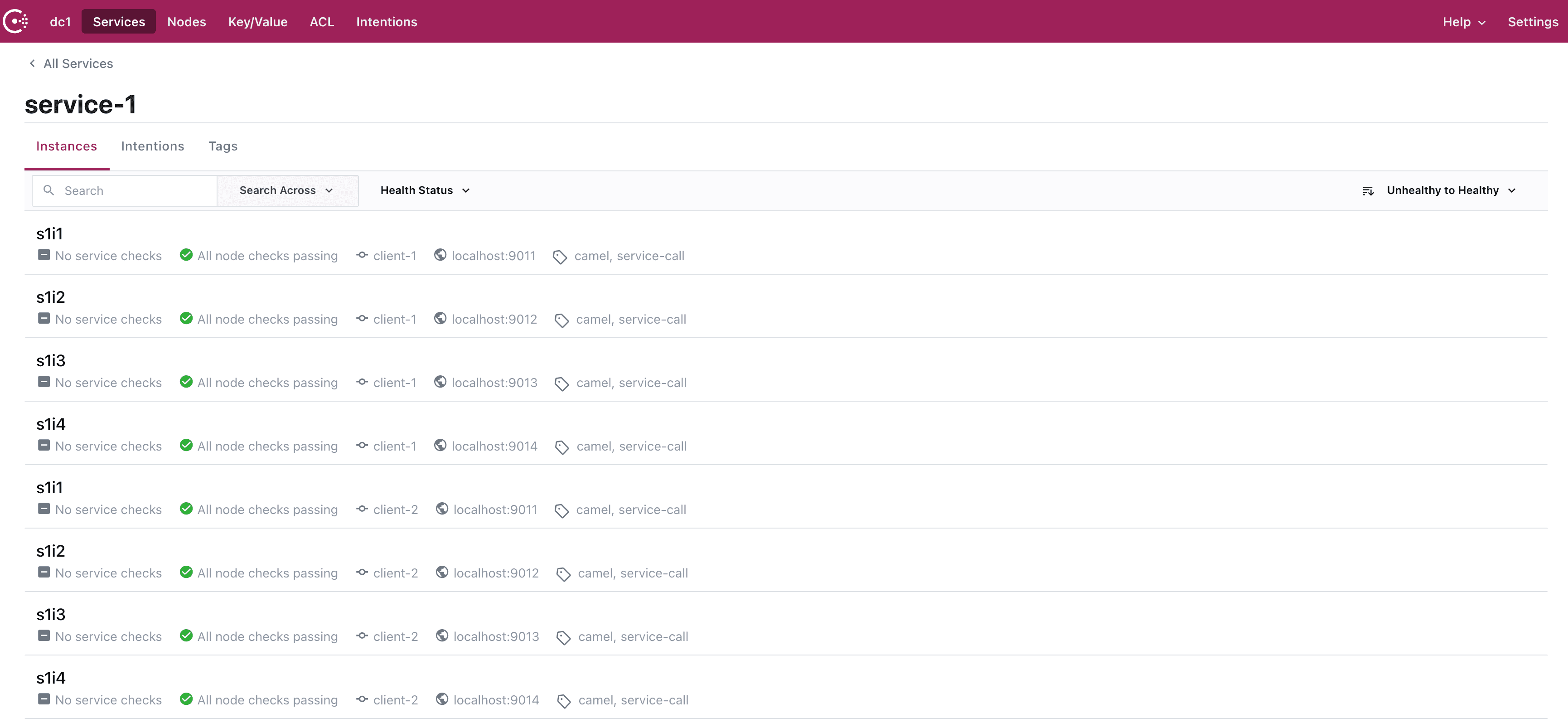

Go back to the Consul web UI, where you’ll notice that our eight Camel HTTP services are registered by Consul:

Now, all we need to do is configure our Camel consumer. As we’re using SpringBoot, we can define allow/deny lists of services to determine which ones to call. Furthermore, with the Consul service registry, we don’t need to worry about dynamic IPs. We can simply refer to our service using a service name, or in this example, localhost. This information is found in the SpringBoot applications.properties file:

# Configure service filter

camel.cloud.service-filter.blacklist[service-1] = localhost:9012

# Configure additional services

camel.cloud.service-discovery.services[service-2] = localhost:9021,localhost:9022,localhost:9023

Let’s fire up our two backend services (service-1 and service-2) together with our Camel consumer. We’re going to need four terminal sessions to execute the services. You can use either a generic command-line interface (CLI) or an IDE like VSCode to execute the following commands.

In the first terminal session:

cd camel-spring-boot-consul/services

mvn spring-boot:run -Dspring-boot.run.profiles=service-1

In the second terminal session:

cd camel-spring-boot-consul/services

mvn spring-boot:run -Dspring-boot.run.profiles=service-2

In the third terminal session:

cd camel-spring-boot-consul/consumer

mvn spring-boot:run

And finally, now that we have our services and consumer running, we can use curl to call our Camel consumer in the fourth terminal session:

curl localhost:8080/camel/serviceCall/service1

Hi!, I'm service-1 on camel-1/route1

curl localhost:8080/camel/serviceCall/service2

Hi!, I'm service-1 on camel-1/route2

There is a hidden gotcha here, though. Did you notice the following error message?

simongreen@simongreen-C02DRCJHMD6R Workspace % curl localhost:8080/camel/serviceCall/service2

java.net.ConnectException: Connection refused

at java.base/sun.nio.ch.Net.pollConnect(Native Method)

at java.base/sun.nio.ch.Net.pollConnectNow(Net.java:660)

at java.base/sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:875)

at org.xnio.nio.WorkerThread$ConnectHandle.handleReady(WorkerThread.java:327)

at org.xnio.nio.WorkerThread.run(WorkerThread.java:591)

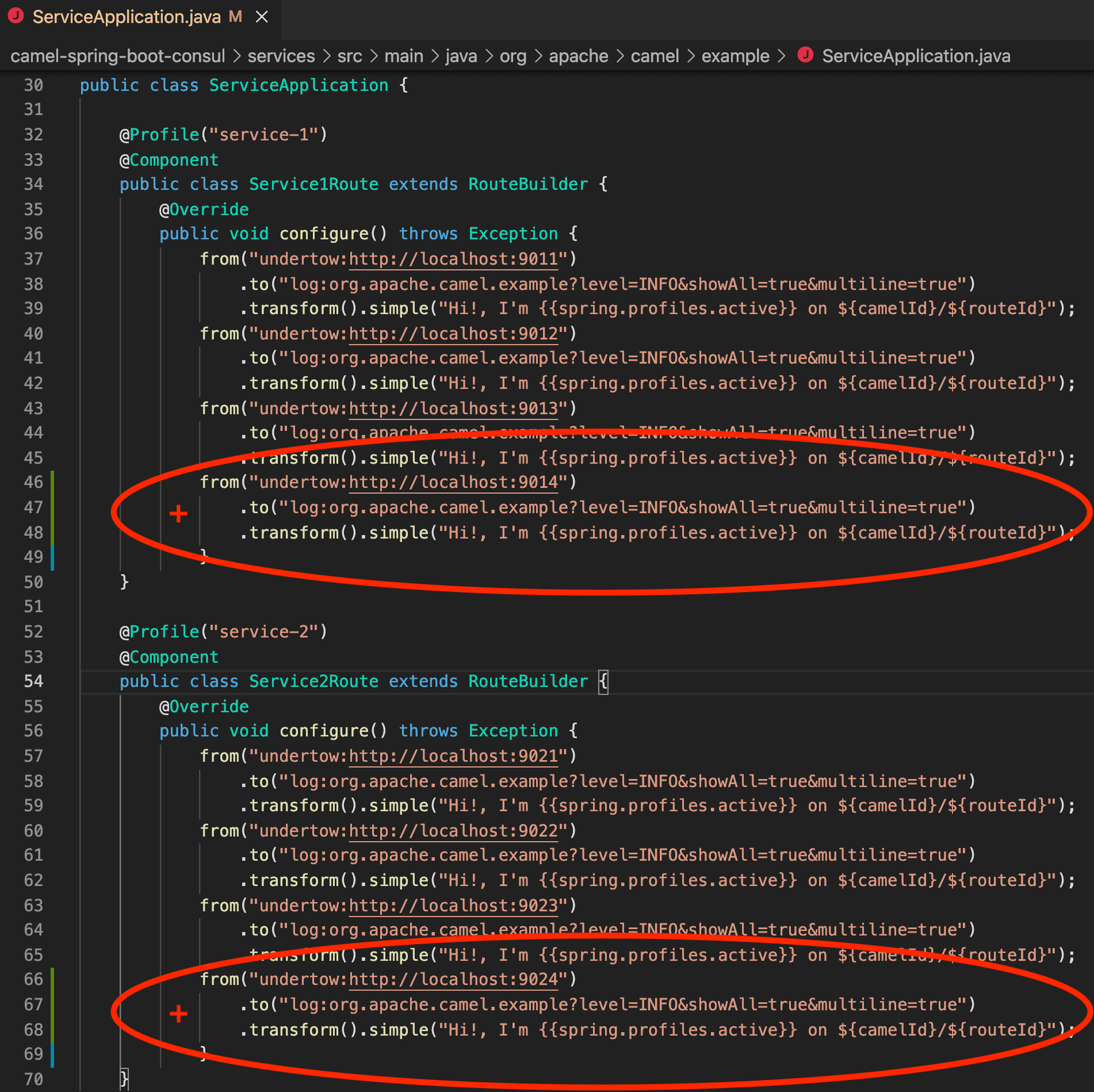

We’re getting this error message on purpose to simulate service-1/route 4 and service2/route4 being unavailable. You’ll notice we have four services registered in Consul for service-1 (ports 9011 - 9014) and four services registered in Consul for service-2 (ports (9021-9024). But only six services were implemented in Camel (service-1: 9011-9013, service-2: 9021-9023). Therefore, because those two services were left out (or unavailable), Camel throws a Connection refused error. To fix this, we simply add those services to our backend implementation.

»Further Reading

Hopefully you found this example useful. For more information on this example, or to try it out yourself, visit my GitHub repo.

For another perspective, take a look at Luca Burgazzoli’s blog: A camel running in the clouds, part 2. Luca also takes things a step further and adds health checking to the mix in part 3 of his blog. With health checks, it’s easy to see which Camel endpoints are healthy and alive in Consul, adding to the service registry functionality of Consul.

Lastly, to learn more about HashiCorp Consul, try one of our hands-on exercises. Consul can be deployed to a large number of platforms, including Kubernetes, Amazon Web Services, Microsoft Azure, Google Cloud, or on-premises.