Throughout this blog series, we have explored Microsoft Azure’s managed identity feature and looked at how Packer, Terraform and Vault work in harmony with it. In this blog post, we’ll discuss how Consul and Nomad can make use of Azure managed identities.

HashiCorp Consul offers service mesh capability to facilitate service-to-service communication. Service mesh primarily solves problems with distributed software architecture patterns, such as microservices. A microservices architecture decouples different application components into individual deployable units, sometimes called services. You can package services into containers, binaries, or executables.

Once packaged, you need an orchestrator to schedule and run these services. Orchestrators like Kubernetes and Nomad can place your workloads on infrastructure to run them for you. As orchestrator deployments scale and grow in complexity, you will have clusters deployed in different datacenters across regions on different networks.

»Multi-Datacenter Challenges

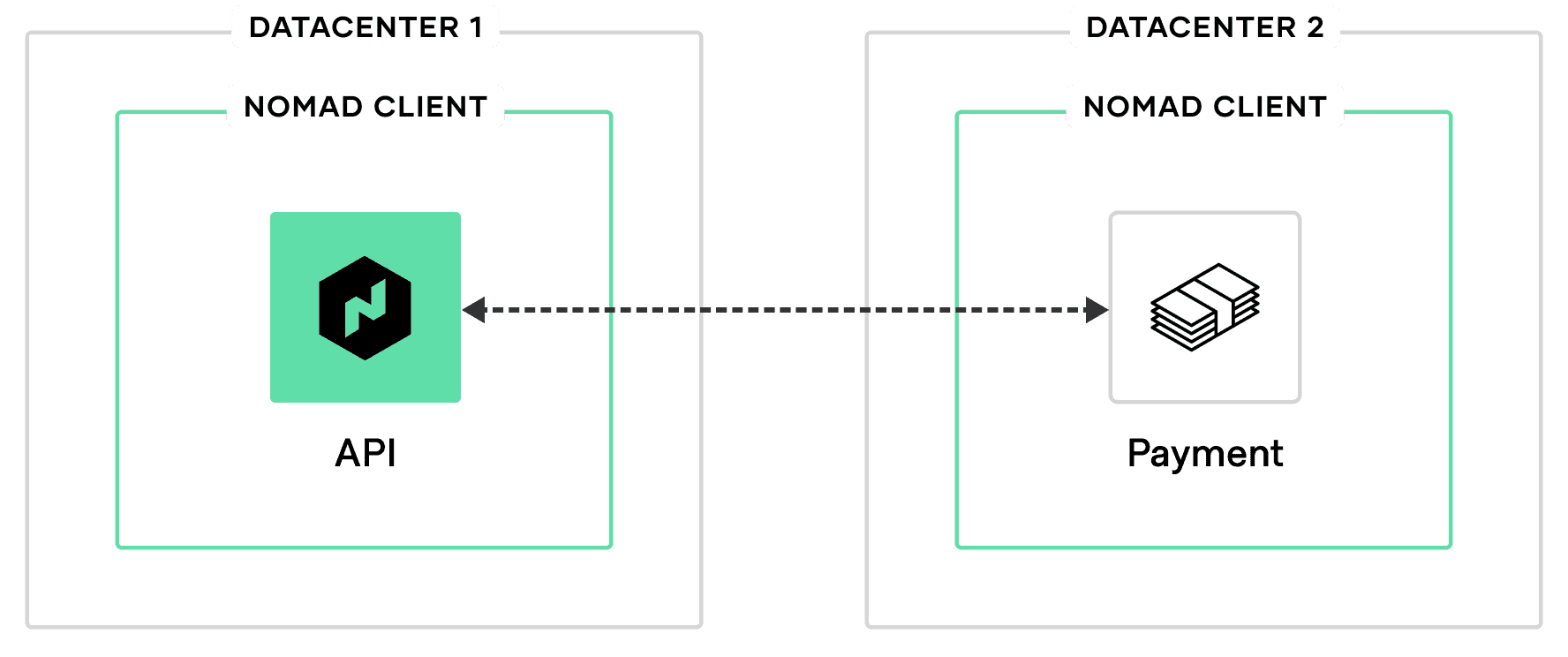

Services often need to communicate with each other. For example, an API service may need to communicate with a payment service. In this instance, the API service will need to be able to reach the payment service. In situations where the API service has been scheduled in one datacenter and the payment service in another, they will need to know how to reach each other on different networks.

The short life cycle of containers adds to the operational complexity of service-to-service communication across regions. An orchestrator reschedules the workload when a container stops, potentially issuing the workload a different IP address and port. Each time the workload reschedules, you must reconfigure connectivity details across dependent services, firewalls, and other network resources.

Service mesh operates at the infrastructure layer to reduce the complexity of network automation for services. Services register to Consul’s control plane, which makes it the source of truth for up-to-date, real-time connectivity details for each service. With this information, Consul can easily broker communication between services.

Consul plays a critical role as a service broker that can affect application availability and uptime. Consul itself must be highly available and ready to broker communications all the time. To achieve this, Consul has the concept of clustering, whereby we deploy up to 7 nodes in a cluster to ensure availability in a failure scenario.

»Cloud Auto-Join

The Consul configuration file contains a retry_join stanza, where you specify the IP address or DNS name of other nodes in the cluster.

retry_join = [

"172.16.0.11",

"172.16.0.12",

"172.16.0.13"

]

Rather than statically define the IP address or DNS name of other nodes in the cluster, you can use a Consul feature called cloud auto-join to discover nodes. Cloud auto-join reads the key-value pairs inside tags of network interface cards (NICs) and automatically joins any instances with these NICs containing the pre-specified tags to the cluster.

For Consul nodes in Azure, we need to configure service principal details in order for Consul to be able to authenticate against Azure and read the resource tags. Similar to the problem discussed in the previous blog post with Vault, the configuration may expose service principal details. Consul can use managed identities to authenticate against Azure in order to read the tags and eliminate the need for hard-coded service principal information.

When you use a managed identity, you set the retry_join stanza to the Azure provider, instance tag, and subscription ID.

{

"retry_join": [

"provider=azure tag_key=... tag_value=...subscription_id=... "

]

}

The subscription ID can also be set using the ARM_SUBSCRIPTION_ID environment variable.

Nomad also has the same functionality with cloud auto-join and can also take advantage of managed identities. You can configure cloud auto-join in the Nomad configuration file.

»Conclusion

In this blog post, we explored how Consul and Nomad can use Azure managed identities to dynamically join a cluster. We also looked at some more fundamental concepts about service mesh and the problems it solves.

For more information on how to use Azure managed identities with Terraform and Packer, review the first blog of this series. You can learn more about managed identities with Vault in the second part of this series.

Reach out to us with any questions on our forum.

_Read parts one and two to learn how managed identities can be used with Terraform, Packer, and Vault.