Consul: Service Mesh for Kubernetes and Beyond

This talk will introduce the new Kubernetes support in Consul and show how to enable seamless service connectivity between workloads inside and outside Kubernetes.

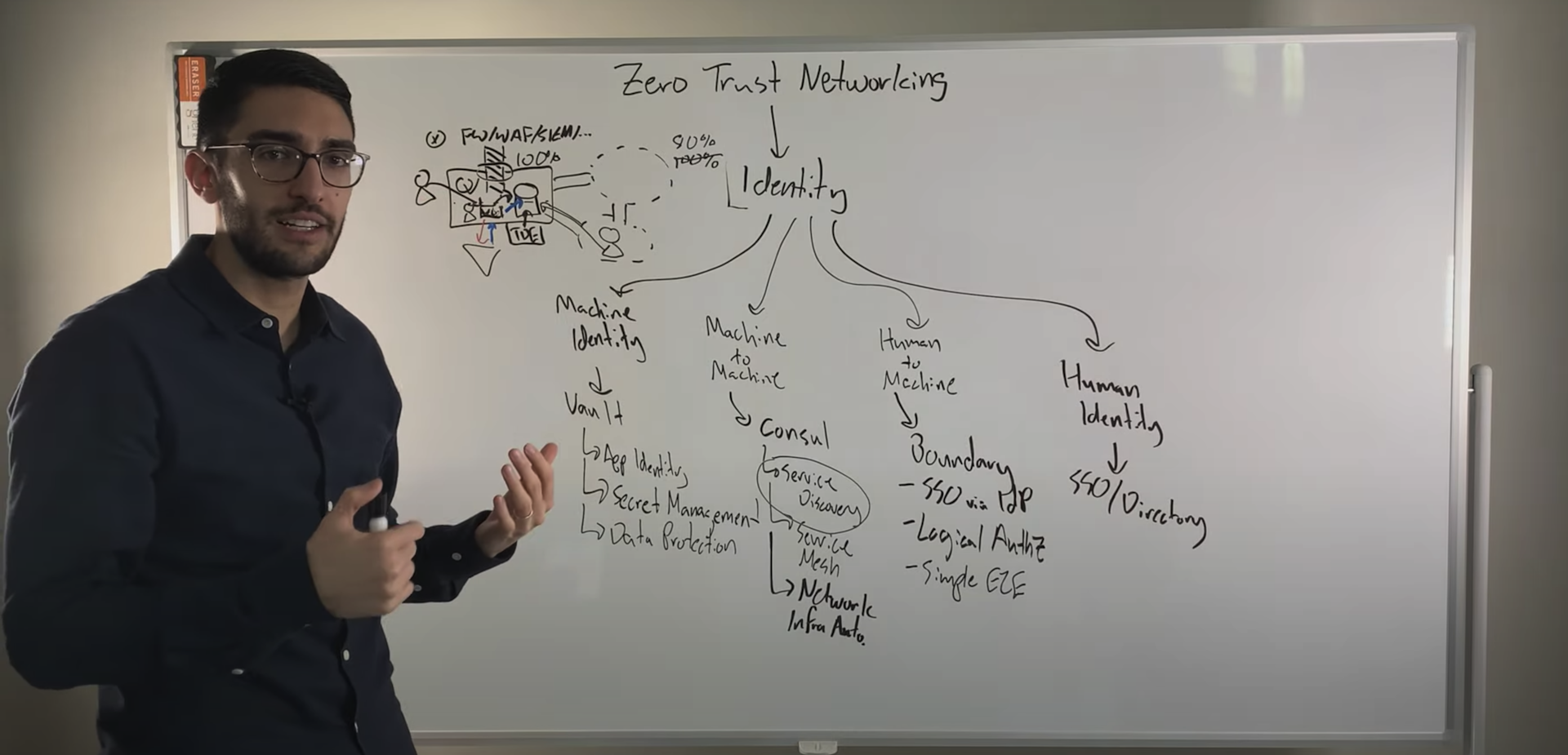

HashiCorp's Consul is now capable of providing the full control plane for a service mesh. Service meshes have become popular in the last couple of years because of how they simplify network topologies in a service-oriented architecture that's attempting to create a zero-trust network.

By providing service discovery, centralized service configuration, and—most importantly for the zero-trust network—service segmentation, Consul is able to provide an enterprise-scale service mesh when paired with a good data plane/sidecar proxy such as Envoy or HAproxy.

In this talk, Paul Banks, a software engineer at HashiCorp who builds Consul, will cover:

- An introduction to service meshes

- Integrating Consul with Kubernetes clusters

- Integrating Consul with legacy applications

Speakers

Paul BanksSoftware Engineer, HashiCorp

Paul BanksSoftware Engineer, HashiCorp

Transcript

Good morning. I'm talking today about Consul, connecting your services together with Consul. I'm talking about doing that inside Kubernetes and outside.

It's going to be fun. Most of what I'm going to show you today is going to be a live demo, so let's see how that goes. It's going to involve both Kubernetes and cloud providers. Hopefully that's going to be good fun and going to go well, but just before I jump into that stuff, I just want to give a little overview of the problem space and what it is we're talking about here.

Mitchell has set the stage really well in the keynote talking about the problems of service mesh and what Connect was to solve, but I want to define what this new term "service mesh" means more crisply.

Service mesh defined

To take a step back, it's about infrastructure becoming more dynamic. For service communication, the big problem with that is, IP addresses are not static, long-lived things that you can insert into firewall rules, that you can hard-code DNS entries for.

You have these 3 problems that we talked about before in the keynote where, how do you discover where these more ephemeral services are running? How do you secure them when your traditional networking firewalls can't keep up with the dynamism you need, and how do you manage configuration amongst lots of distributed instances? How do you have them all see the same consistent state of the world for how they should be behaving?

All 3 of these things are a configuration problem. You have a source of truth about what should be running, where it should be running, what should be allowed to talk to what and how your application should behave. The challenge is: How do you have this update programmatically and how do you distribute those updates efficiently through a large cluster?

Spoiler alert: This is Consul's core feature set, so this is what we're going to be talking about this morning. We want to hone in a little bit more and define what this thing called service mesh is. Service mesh is one particular class of solution to this set of problems that we've just discussed at a high level. There are other solutions, and Consul itself supports a wider range of solutions than just this definition, but I want to hone in on what we mean when we talk about a service mesh. Specifically, we're talking about an infrastructure layer which sits above your network and below your application and it solves this connectivity problem at that level.

There are solutions like gRPC, Apache Thrift, many other frameworks for building services that solve some of the discovery, they solve some of the reliability and communicating and load balancing, and they do that in the application. You build it into your app, so that's an alternative solution, and on the other hand there are software-defined networking solutions which solve some parts of this problem space too, like securing different workloads right in your network.

The service mesh is an approach where you pull it out of both of those: You're agnostic to the network below you, so you're not concerned about how bytes or packets get from one host to another, but you're also outside of the application. The way that works is that you deploy a service mesh typically as a proxy; it's a separate process that's co-located alongside each application process.

These two can talk to each other over loopback interface which, as Mitchell mentioned, is generally very performant, but the benefit you get here is that all of that logic about how to discover services, how to route them, configure themselves, et cetera, is separate from your application and can be deployed as a separate unit. Typically you have a separate infrastructure team who are responsible for deploying your service mesh and they can deploy a new proxy and update that without having to coordinate with application teams to get a new build-out and all that sort of thing. That's the real benefit of this external proxy approach.

The proxy abstracts a lot of patterns around resilient communication between your services, so the obvious one is load balancing but also things like retries on error and circuit breakers when you do have errors to route around failure. These can all be handled in your proxy, and that's logic that you don't have to either code libraries for, for all of your service languages, or rely on something else to re-implement everywhere.

The other benefit you get with this approach is that you can instrument the proxies themselves and you can have a consistent way to see request rates, error rates, latencies, who's talking to who across your entire infrastructure without having to coordinate between different application teams to instrument all their various applications in a consistent way.

The final thing to note, there are two distinct components within the service mesh. The control plane is the source of truth. This is the distributed system that worries about what should be happening and it distributes those updates through your cluster, and then the proxy itself is the data plane, so all your application traffic flows through that proxy. It's important to note that the service mesh solution as a whole should be resilient, so if the control plane has an outage, so if all your Consul servers go down, you don't want all of your application traffic to stop. It won't, in this case, because it's only the proxies themselves that are forwarding that traffic.

That's service mesh defined in a nutshell. I want to move on and talk about Connect. Consul and Connect and Kubernetes all together. Mitchell's already set out a lot of the case for this but our goal with Consul is to solve these service-connectivity problems but for any platform that you're running on. So this isn't us saying, "Kubernetes is the new way to run Consul, you should all do that." This is us saying, "If you're using Consul, Kubernetes should work really well for you in that environment as well as other environments." I'm going to demo this, we're going to show some of the work over the last couple of months that just got announced for integrating with Kubernetes.

A live demo of integrating Consul with Kubernetes

The first question that might come up is "Doesn't Kubernetes kind of solve all these problems already? Why would we need to use Consul as well?" The answer is, it mostly does, yes. The discovery and configuration are built in, you get those out of the box, and it has network policies for security. You do need a separate controller layer, something like Calico that will enforce those policies by configuring iptables, for example. But the key thing here is that these solutions only work for your Kubernetes workloads.

We've just heard about all the many reasons that we see customers, even the ones who are kind of all in on Kubernetes, their reality is not 100% Kubernetes, and even with best intentions there are usually many reasons for having some workloads that are never going to make sense to run in Kubernetes. We want to build a tool that is going to work great there, but it's going to also work great in all your other environments that you have to run things. Even better, it will work seamlessly across the 2, so we're going to show a bit of that today.

We're going to get right into some demos in a minute, and this is the moment of truth. But just before we do that, just before the fireworks begin, I want to show you, visually, what we're going to be installing here.

The first thing we want to do is install Consul on a Kubernetes cluster. If you're not super familiar with Kubernetes then that's cool, hopefully you'll take something away from this demo. I will mention a few details, just for those who are a bit more familiar, but I hope the overall story is clear. Please excuse me for not explaining every detail as we go through.

About a month ago we released the official Consul Helm Chart. Helm is like the package manager for Kubernetes. It packages up complicated applications into the resources needed to run them on Kubernetes. We're going to use that to install Consul. What it's going to look like, because it helps to have this in your mind, once we start looking at the UIs, is this. We're going to have a 5-node Kubernetes cluster and the Helm Chart is going to install a Consul client onto every one of those nodes and then it's going to install 3 servers. We use 3 because they store state in a strongly consistent way with the Raft consensus protocol so you need 3 to be tolerant of one failing.

They're going to end up on any 3 random nodes, I don't know where. A slight quirk is that we're going to end up with a client and a server on the same host. That isn't typical. If you deploy by hand in a data center, you generally wouldn't do that because the servers are also clients, but Kubernetes doesn't currently give you a way to express "I want to run on every machine except the machines that these 3 things are running on." It doesn't matter, Consul doesn't mind, so that's how it's going to look.

The key thing I want to note here is that the client agents are installed per host, they're not inserted into every pod or every network namespace container. They are per host. If you want to talk to them from your app to consume the API, you can do that using the host IP, and Kubernetes can make that available to your pod.

That's what we've got, who's ready for some demos? I might need some encouragement if this doesn't work. If you've not seen this before, this is the Google Cloud platform. We're doing this on a real cloud, guys, please don't start downloading things, using up all the interwebs. Let's just check I've got internet, here we go, it's looking go. We already have our Kubernetes cluster set up. Of course, that was just a single Terraform command, I could have done that live too but it takes a couple minutes, so I didn't.

We have 5 nodes, that's what we wanted, and then if we look here, there are no workloads, so nothing is running on this cluster and there are no services to find, so far. It's an empty Kubernetes cluster right now. The first thing we're going to do is install Consul, and I just want to show you a little bit of config with my fancy cat alternative, here we go.

The Helm Chart itself, by default, with no config changes, will get you the Consul cluster we just looked at. But there are just a couple features and options that we want to enable for this demo, and I'll show you really quickly. One is we're going to have a cloud load balancer just to make it easier for me to connect to the UI without setting up port forwarding and things. You probably don't want to do that in production, and just expose your Consul UI to the public.

Next, we're going to enable catalog sync, and this is cool, but I'll come back to that later. Next thing, we're going to enable Consul connectInject. This is the thing that's going to inject Connect Envoy proxies into any pod that requests it. You can have it do it by default, but we're going to have to opt in here. Last, this is just a bit of housekeeping, Envoy requires a gRPC API and so we just have to enable that, because it's not on by default.

I should be able to do helm install and then those values. I'm going to give this a name, I'm just going to call it Consul. Helm picks a random name if you don't give it one. Our official Consul Helm Chart will be adopted by the official central Helm repository and so you'll be able to do this by name and it will know where to go and download it. Right now they're working through some issues, so I'm just going to do this from the Helm Chart that I've got checked out right here on my machine. Here we go, let's see if it works.

There we go, a bunch of stuff happened. We'll go and see what it set up. These are the workloads in our cluster, It's done something, we have a couple of warnings. Things are still coming up and getting healthy but we'll talk through that. What we can see is Consul, this is the client agents and they're running as a DaemonSet, so that means 1 per node in your cluster and there are 5 of those, like we expect. We have the servers here, we have 3 of them, they're actually a stateful set because Consul services store state.

You can see we've got 2 other services running her already, 2 other workloads. One of those is the webhook that enables that connectInject feature I was talking about, and this bottom one is the catalog sync, the mysterious catalog sync, and again I will come back to that in a minute and show you what it's doing instead of just telling you.

Finally, we've got some services, and because we added that load balancer thing to our config, it's created a load balancer and it's already got an IP address. This is the thing that can sometimes take a while, and so you can see 3 healthy Consul nodes and the UI is available to us and we're all good.

The other cool thing we can see is that Consul UI service, who registered that? This is the mysterious catalog sync, that is the Kubernetes service that we created to expose this UI through a load balancer and because it's an externally accessible service, the catalog sync service has automatically registered that in Consul for us, and that means that anything outside of Kubernetes that is using the same Consul cluster can discover and access that service just using Consul's regular service discovery.

Running a demo app with Consul

I want to run a demo app to show you how Connect works, but before we jump straight to connecting it up, we're going to install it on the Kubernetes cluster without Connect first. Our app is called Emojify, we'll see what it does in a minute, it's quite fun. This is the architecture we're deploying, we have 4 different services. The ingress service here is an NGINX container, and it's going to do basic path-based load balancing and routing, it's routing slash to the web service, I think it's actually called website in Kubernetes.

And slash API is going to API service. The API service is a Go HTTP service, and it's going to consume a mysterious backend called Facebox. If I say "Facebook" by mistake, please ignore me. This is what we want to set up, and we're going to come right over here and see that.

I have all the YAML so these 4 files contain all of the Kubernetes resources we need to set up this app. I'm not going to show them to you right now, we will take a look in a minute, but let's just see, all I have to do is kubectl apply and give it a directory and all of the resources and all of the YAML files in that directory are just going to get created in the cluster for me.

If we come back here, we should see a bunch of new workloads. These 4 Emojify workloads have turned up, and they are okay already and we should see some new services. Here we've exposed another load balancer so we should be able to load up our app as soon as this load balancer is provisioned. This is the bit that can often take a while. Google Cloud takes sometimes a few seconds, sometimes 30 seconds or so to get the load balancer up, so we're going to wait for that.

There it is. Hopefully it's going to load straight away. We have our app and it's up. Let me just show you this app because this is pretty fun. This is the website, it's a single-page React app and it's being served by that website service, and when we enter an image in here, it's going to submit that to the API service. I'm going to grab a picture of my favorite band, here's a test for anyone who grew up in Britain in the '90s, and we're going to submit that. After just a few seconds of loading we should find that all of the faces in the image have been detected by this Facebox service, which is using some machine learning models to detect faces, and then our backend has replaced them all with a random emoji.

That's pretty cool, that's working, but just to recap, this is all just using standard Kubernetes service discovery. Our challenge now is, how do we use Connect to connect these services together? All of these services are exposed to the whole data center at the moment.

I have an alternative set of YAML files here, don't you just love YAML? You can't get enough of it if you with with Kubernetes. I'm not going to show you the diff for the whole lot because it's kind of repetitive, but I'll just show you the API service, the changes to that. This is the one sitting in the middle, remember: NGINX is talking to the API and then the API is talking to the Facebox service.

The first change here is, we don't have a Kubernetes service definition anymore. This is because we don't need Kubernetes service discovery for the app. I could have left it in, it doesn't make much difference, but we're going to remove it and we'll see why in just a second.

This is all the rest of the changes to this service here. You can see we've added a couple of annotations. The first one is just opting us in, it's saying, "Please inject an Envoy proxy into this pod so I can use Connect." This next one is config for the proxy itself, it's saying, "The local app's running on 9090." And that's the same thing that you see down here, this is the augment we're passing to the binary to listen, on 9090.

This last one is saying to the proxy, "We need to speak to the Facebox service to consume that." That's here. Then we want that Facebox service to be mounted locally on our loopback interface on port 8002. This is pretty much a random number. The only thing that cares about this is this line down here, so this is only accessible inside the pod, inside the network namespace that's running this service. As long as these two things match, we're good.

You can see that the URL that we used to be giving for Facebox was using KubeDNS, Kubernetes' service-discovery mechanism, but we don't need that anymore. We just need to talk to localhost:8002, and we no longer need to bind to all interfaces. We only bind the actual service to localhost as well, so nothing is going to be able to access this service unless it's going to come through that Connect proxy, and the Connect proxy is going to enforce mutual TLS and authorization.

I'm going to apply this, then we can watch the workloads all restart. They're usually pretty quick, the ingress takes the longest because there are a couple of them and it does rolling restarts. We just need to wait until the old one's gone. This pod's still initializing, we can see the revisions here. This will still work, by the way. The app is still up, I just want to make sure I'm definitely hitting the new thing that's using Connect before we move on. We can see, if we reload the app, it should still be working. So we're good.

I could carry on here and we get a 50/50 chance, right? We have one ingress that's running the old version without Connect, and one that's running the new one right now. Should we play roulette? See if the rest of it works? I'm gonna talk through the next bit and then we'll see if it works.

This is probably now running using Connect. But you can't see any difference. Maybe that's because we're hitting the instance that's not using Connect yet, but maybe that's because Connect's actually working and it is allowing all by default. We can demo that it does work–this is back in the Consul UI—by creating an intention that explicitly denies all traffic that's gone between the ingress, the NGINX, and the website. If we create that, then instantly you get a 502. This is an unexpected error, guys. You should be happy about this one.

This is NGINX attempting to connect to a website instance and yet the website -- the proxy in the website instance -- is saying, "Sorry, you can't connect. The authorization call to Consul is failing." So it's just dropping the connection. NGINX can't get a connection to any of the backends for the website, so it's giving you a 502 back. This is showing us that Consul's intentions are working and as soon as we delete that intention, everything comes back up and we're working again. This is showing you that Connect is now securing and controlling access to every one of those 4 services and they are all now encrypted on the network.

We've seen how to add Connect to an existing Kubernetes app. It was alright, wasn't it? Just a couple of lines have changed to inject that proxy in to configure it. But this whole theme we've had going through the keynote is about "How do we have a great experience on Kubernetes, but also a great experience on all the other platforms as well?"

For the final part of this demo, we're gonna integrate some legacy. The story goes like this. You've built Emojify in your spare time and you take it to show your boss and he is really excited. He looks at you with a gleam in his eye and says, "How do we make some money from this thing?" Alright? "I've got it," he says. "We're gonna sell prints of these wonderful artistic creations."

Your heart sinks at this idea. Why? Not because prints are a bad idea, 'cause clearly everybody wants prints of emojified faces. Your heart sinks because that means you have to talk to the legacy payments API, and the payment service is this box in your data center. It's attached to your merchant's point-of-sale device with a serial cable. There's no way that thing's ever gonna make it into Kubernetes.

Even worse, last time you had to integrate something with payments, it took you 3 months and 5 Jira tickets just to get them to open your firewall port to that box. That was just for one IP address. You're now asking them to open the IP for every IP in the whole range that any pod in Kubernetes could ever have. Because you don't know where this is gonna end up landing. We're gonna see how Consul can maybe help you with this. Maybe it can't help you deal with Jira tickets, but we'll show you the rest.

This is what the new architecture's gonna look like. We're gonna have the same app running in Kubernetes, but now the API has to talk to a payment service which is outside. Playing the part of this legacy payment service is this VM I set up over here.

Ladies and gentlemen, this is SSH on a real VM in a Kubernetes demo. This isn't really legacy. This isn't an Ubuntu box that's using systemd. You have to use your imagination a little bit, but just to help you along, we've built a genuine Java Spring Boot API for this payment gateway. This is what we have to talk to and I'm just gonna start a couple things off here. And we will see what that is.

What I've just started there are another couple of systemd units. One of them is gonna run a Consul agent on this VM that's outside of Kubernetes, but it needs to join the Consul cluster that's inside of Kubernetes. The one we installed just now with our Helm Chart. Instead of trying to figure out what the IP addresses are gonna be for that thing, we can just use the new Kubernetes discovery built into Consul. We've given read-only access credentials for the Kubernetes API and dropped them on this box. All you have to do is—you need the Kubernetes provider—specify which label you need. It's gonna select all pods that have app=consul and component=server and it's gonna find the IPs of those. Then it's just gonna connect to that automatically.

The other thing we ran was a Consul Connect proxy, and the fun thing to note here is that this is the Consul binary. This is the built-in proxy. This is not gonna be Envoy, but it's gonna be talking to Envoy on the other end. So this is showing you that it's just mutual TLS. As long as they are built the right way, they can talk to each other. And we're using the built-in one 'cause it has a couple of nice features. You can just tell it what service you want to represent. You can tell it where you wanna listen. Then you can even have it registering Consul for you, which just makes this demo a little bit easier.

We now see both the payment service and its proxy registered in our Consul. Remember this is the Consul cluster running inside Kubernetes and our workloads outside of Kubernetes have just been able to discover and register.

Even more fun than that, do you remember that catalog sync feature we turned on? It works the other way, too. You can see down at the bottom here, the payment service and the payment proxy are now showing up in Kubernetes. And they have this consul true label. That means that anything running inside your Kubernetes cluster can now use native KubeDNS discovery to discover the IP of the thing that's running outside of Kubernetes.

We have our payment service. We now need to change our app to use it. I'm just gonna once again show you the diff on the API config. The only change is, we're adding a new upstream. We're now saying, "We also need to be able to talk to the payment service," and we're just picking another port, 8004, that's wrapped, unfortunately. But, there we go. The only other thing is, we're configuring the API itself. Your payment service is now on localhost:8004. It's worth noting here that this is no different. In your configure it looks no different that we're connecting to something else outside Kubernetes as inside. It's all in Consul and it's all the same.

It's gone almost too well so far. Let's see if this last bit can work for us. We are restarting that Kubernetes pod and that may or may not be a good error. We can come back here and see when it reloads.

I'm gonna go check out this error. It's a new one on me. I didn't want to learn more, thank you. You often see errors like this. This is the old one that's erroring, so I think we're good. We should be good in just a second. We're waiting for the config map for the website service to update, which can take awhile. It looks like I could be out of time to show you, which is unfortunate.

Our payment service sadly is not there so we should see the upgrade to Emojify Enterprise and we should be able to go through this whole flow and then buy a print at the end. Sadly, I'm out of time. I'd love to finish this demo if anyone wants to drop by the Consul booth, but let me just wrap up quickly.

What we did here, we installed Consul clients and servers on an empty Kubernetes cluster. We deployed an app containing 4 services on it. We upgraded those so they can use Consul Connect to secure all their traffic. And then we—you didn't quite see it, but honestly, we did—used Connect to secure that legacy service.

Thank you very much.