When making changes to infrastructure managed by Terraform, we want to assess, test, and appropriately limit their impact in production. In this post, we demonstrate some approaches to feature toggling, blue-green deployment, and canary testing of Terraform resources to mitigate impact to production infrastructure.

The application of these approaches differs based on the infrastructure resource and its upstream dependencies. For example, network changes can be particularly disruptive for applications hosted on the network and often require a combination of feature toggles and blue-green deployment. This allows us to test changes to infrastructure in production without fully recreating the system in a staging environment.

»Feature Toggles

Feature toggles or feature flags activate system functionality with a binary operation. In the case of software development, we use feature toggles to turn features on or off for users in order to test functionality in production. Similarly, infrastructure feature toggles can be used to deploy separate infrastructure for supporting upstream dependencies. When we do not have enough resources to reproduce a production system, we can use feature toggles to isolate and test an infrastructure resource before releasing it for use.

In Terraform, we can create a feature toggle with a conditional expression. By adding the count meta-argument to the resource, we can toggle the creation of a specific resource.

variable "enable_new_ami" {

default = false

type = bool

}

resource "aws_instance" "example" {

instance_type = "t2.micro"

ami = data.aws_ami.ubuntu.id

vpc_security_group_ids = [aws_security_group.instances.id]

subnet_id = aws_subnet.public.id

tags = {

Has_Toggle = var.enable_new_ami

}

}

resource "aws_instance" "example_bionic" {

count = var.enable_new_ami ? 1 : 0

instance_type = "t2.micro"

ami = data.aws_ami.ubuntu_bionic.id

vpc_security_group_ids = [aws_security_group.instances.id]

subnet_id = aws_subnet.public.id

tags = {

Has_Toggle = var.enable_new_ami

}

}

The virtual machine will only be created if var.enable_new_ami is set to true. The toggle can facilitate a blue-green deployment approach, which runs two resources in parallel before completely changing to the newest one.

If an in-place upgrade has sufficiently low impact, we can toggle a parameter on a resource, such as a virtual machine image.

variable "enable_new_ami" {

default = false

type = bool

}

resource "aws_instance" "example" {

instance_type = "t2.micro"

ami = var.enable_new_ami ? data.aws_ami.ubuntu_bionic.id : data.aws_ami.ubuntu.id

vpc_security_group_ids = [aws_security_group.instances.0.id]

subnet_id = aws_subnet.public.0.id

tags = {

Has_Toggle = var.enable_new_ami

}

}

In both use cases, we ensure the toggle defaults to false until we want to test and use the resource. We also include a tag for whether or not the resource has a toggle to identify removal at a later date, preventing the accumulation of technical debt.

»Blue-Green Deployments

For most infrastructure changes, we want to mitigate the risk of a change by creating a duplicate set of resources with new configuration for initial testing in production. We can apply this technique, called blue-green deployment, to a variety of resources including networking, virtual machines, and even account credentials. We often refer to the live infrastructure resources as “blue” and the new infrastructure resources as “green”. Before we release the “green” resources, we want to ensure the system works as a whole. For example, we have an application linked to a load balancer and a DNS entry. We can access the application at application.test.in.production.

resource "aws_instance" "application" {

instance_type = "t2.micro"

ami = data.aws_ami.nginx.id

vpc_security_group_ids = [aws_security_group.instances.0.id]

subnet_id = aws_subnet.public.0.id

tags = {

Name = "${var.prefix}-application"

}

}

resource "aws_elb" "application" {

name = "${var.prefix}-elb"

subnets = [aws_subnet.public.0.id]

listener {

instance_port = 80

instance_protocol = "http"

lb_port = 80

lb_protocol = "http"

}

health_check {

healthy_threshold = 2

unhealthy_threshold = 2

timeout = 3

target = "HTTP:80/"

interval = 30

}

instances = [aws_instance.application.id]

}

resource "aws_route53_record" "application" {

zone_id = aws_route53_zone.private.zone_id

name = "application.${aws_route53_zone.private.name}"

type = "A"

alias {

name = aws_elb.application.dns_name

zone_id = aws_elb.application.zone_id

evaluate_target_health = true

}

weighted_routing_policy {

weight = 10

}

set_identifier = "blue"

}

Imagine we have a new version of the application. We want to ensure the new version works before releasing it to users. We create a “green” set of infrastructure with the new application version for testing.

variable "enable_green_application" {

default = false

type = bool

}

resource "aws_instance" "application_green" {

count = var.enable_green_application ? 1 : 0

instance_type = "t2.micro"

ami = data.aws_ami.nginx_green.id

vpc_security_group_ids = aws_security_group.instances.*.id

subnet_id = aws_subnet.public.0.id

tags = {

Has_Toggle = var.enable_green_application

}

}

resource "aws_elb" "application_green" {

count = var.enable_green_application ? 1 : 0

name = "${var.prefix}-green-elb"

subnets = aws_subnet.public.*.id

listener {

instance_port = 80

instance_protocol = "http"

lb_port = 80

lb_protocol = "http"

}

health_check {

healthy_threshold = 2

unhealthy_threshold = 2

timeout = 3

target = "HTTP:80/"

interval = 30

}

instances = aws_instance.application_green.*.id

tags = {

Has_Toggle = var.enable_green_application

}

}

resource "aws_route53_record" "application_green" {

count = var.enable_green_application ? 1 : 0

zone_id = aws_route53_zone.private.zone_id

name = "application.${aws_route53_zone.private.name}"

type = "A"

alias {

name = aws_elb.application_green.0.dns_name

zone_id = aws_elb.application_green.0.zone_id

evaluate_target_health = true

}

weighted_routing_policy {

weight = 0

}

set_identifier = "green"

}

When we insert the green set of infrastructure into our Terraform configuration, we want to ensure that none of the existing “blue” infrastructure changes. Using a feature toggle, we can disable the “green” infrastructure until we are ready to test. As a result, the output of terraform plan shows infrastructure is up-to-date.

> terraform plan

Refreshing Terraform state in-memory prior to plan...

...

No changes. Infrastructure is up-to-date.

This means that Terraform did not detect any differences between your

configuration and real physical resources that exist. As a result, no

actions need to be performed.

When we toggle on the new application, the output of terraform plan adds the new “green” resources.

> terraform plan -var=enable_green_application=true

…

Terraform will perform the following actions:

# aws_elb.application_green[0] will be created

…

Plan: 3 to add, 0 to change, 0 to destroy.

...

In the example, we update the weight of the DNS record in order to gradually route traffic to the “green” resources. When 100% of traffic flows through the new infrastructure resources, we can remove the “blue” infrastructure from Terraform configuration and eliminate the feature toggles.

resource "aws_instance" "application_green" {

instance_type = "t2.micro"

ami = data.aws_ami.nginx_green.id

vpc_security_group_ids = aws_security_group.instances.*.id

subnet_id = aws_subnet.public.0.id

tags = {

Has_Toggle = var.enable_green_application

}

}

Terraform will automatically align the previous index of the resource to a single resource in state. As a result, the resource itself does not change. Since we have removed the toggle, the tag for the virtual machine will be updated in-place without affecting the virtual machine.

> terraform plan

...

# aws_instance.application_green will be updated in-place

~ resource "aws_instance" "application_green" {

## Omitted for clarity

~ tags = {

~ "Has_Toggle" = "true" -> "false"

}

...

Plan: 0 to add, 2 to change, 3 to destroy

When we preserve the name of resources, we do not need to re-import an existing resource into a new name. By removing the “blue” infrastructure configuration, Terraform notes that the “blue” resources will be destroyed. In the next update of the application, we can name the application aws_instance.application_blue and repeat the pattern we established.

»Canary Tests

In order to determine if we can use our new infrastructure resources, we execute a canary test to quickly confirm proper configuration. We often apply this to blue-green deployments as confirmation before increasing traffic to the new resources.

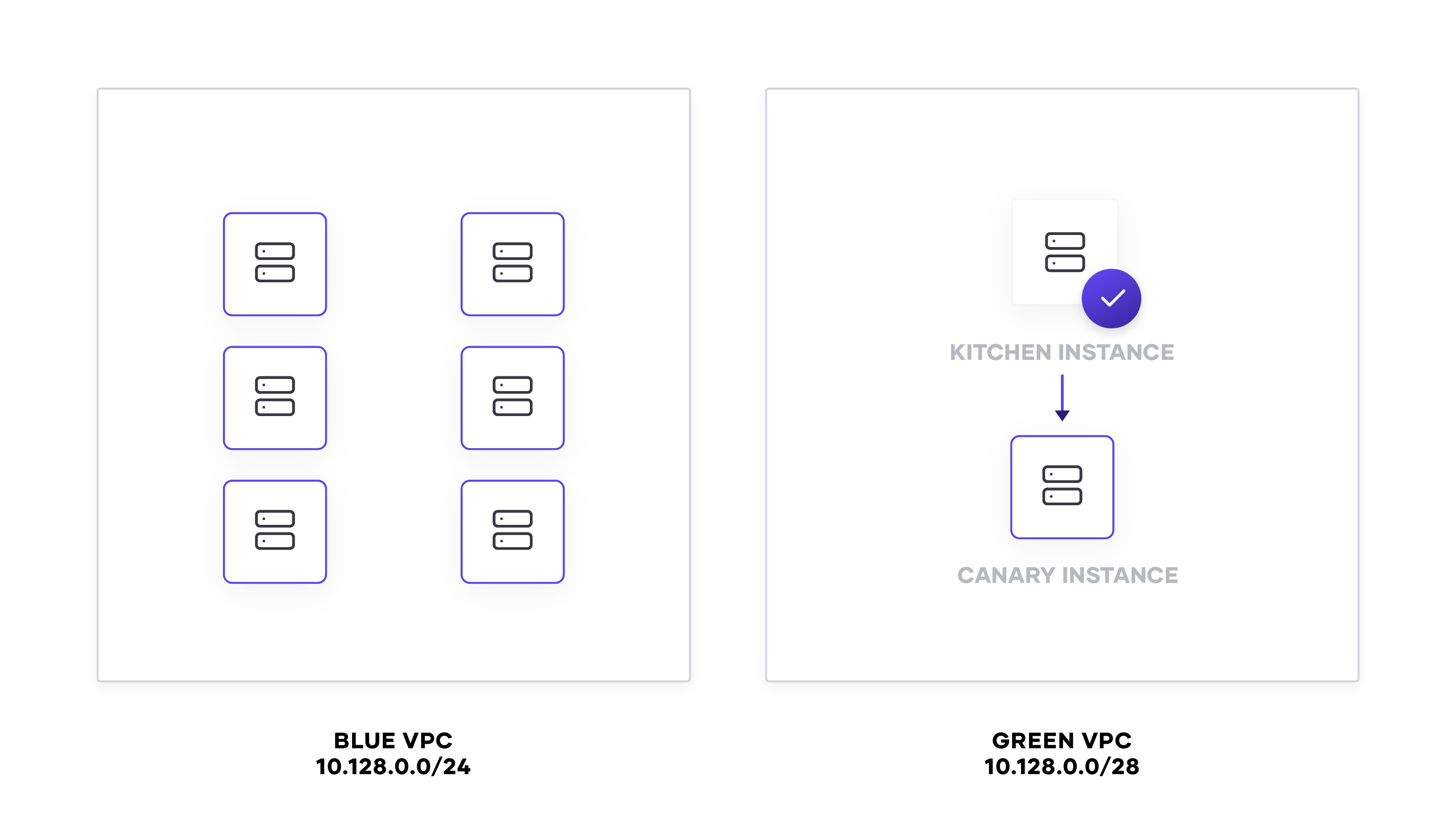

In the case of networking, we might want a canary test to check for network peering and routing configured with Terraform. Changes to networking potentially affects routing configurations, security groups, and applications. To ensure that our new subnet has the correct routing and configuration, we deploy a virtual machine that serves as the “canary” instance.

resource "aws_instance" "canary" {

count = var.enable_new_network ? 1 : 0

instance_type = "t2.micro"

ami = data.aws_ami.ubuntu.id

vpc_security_group_ids = [aws_security_group.instances[1].id]

subnet_id = aws_subnet.public[1].id

tags = {

Name = "${var.prefix}-canary"

}

}

We can use this instance to test routing from an external source to the new network, within the network, and between other networks, such as with kitchen-ec2 or Goss.

When our tests pass and we’ve confirmed the subnet works, we can destroy the canary instance and begin deploying new instances onto the “green” network.

»Conclusion

Using Terraform meta-arguments and its planning capability, we can feature toggle resources, deploy infrastructure in a blue-green approach, and test canary infrastructure in production. We demonstrated the use of the count meta-argument as a feature toggle and how to manage the toggle’s lifecycle. We applied the feature toggle to a blue-green deployment, allowing us to deploy similar infrastructure to test new configuration. Finally, we examined a canary test for a blue-green deployment to confirm its production readiness.

Testing in production approaches for infrastructure allow us to mitigate change impact, highlight dependencies, and reduce the overall cost of development environments. While we focus on infrastructure-related feature toggles in this post, we can use Consul KV or the LaunchDarkly Terraform provider to store and manage feature toggles for application uses.

To learn more about testing in production techniques and approaches, refer to this blog by Cindy Sridharan. For more information about Terraform 0.12 and its configuration language, see official documentation. Learn more about Terraform at HashiCorp Learn.

Try the Use Application Load Balancers for Blue-Green and Canary Deployments tutorial on HashiCorp Learn.

Post questions about this blog to our community forum!