You can use retrieval augmented generation (RAG) to refine and improve the output of a large language model (LLM) without retraining the model. However, many data sources include sensitive information, such as personal identifiable information (PII), that the LLM and its applications should not require or disclose — but sometimes they do. Sensitive information disclosure is one of the OWASP 2025 Top 10 Risks & Mitigations for LLMs and Gen AI Apps. To mitigate this issue, OWASP recommends data sanitization, access control, and encryption.

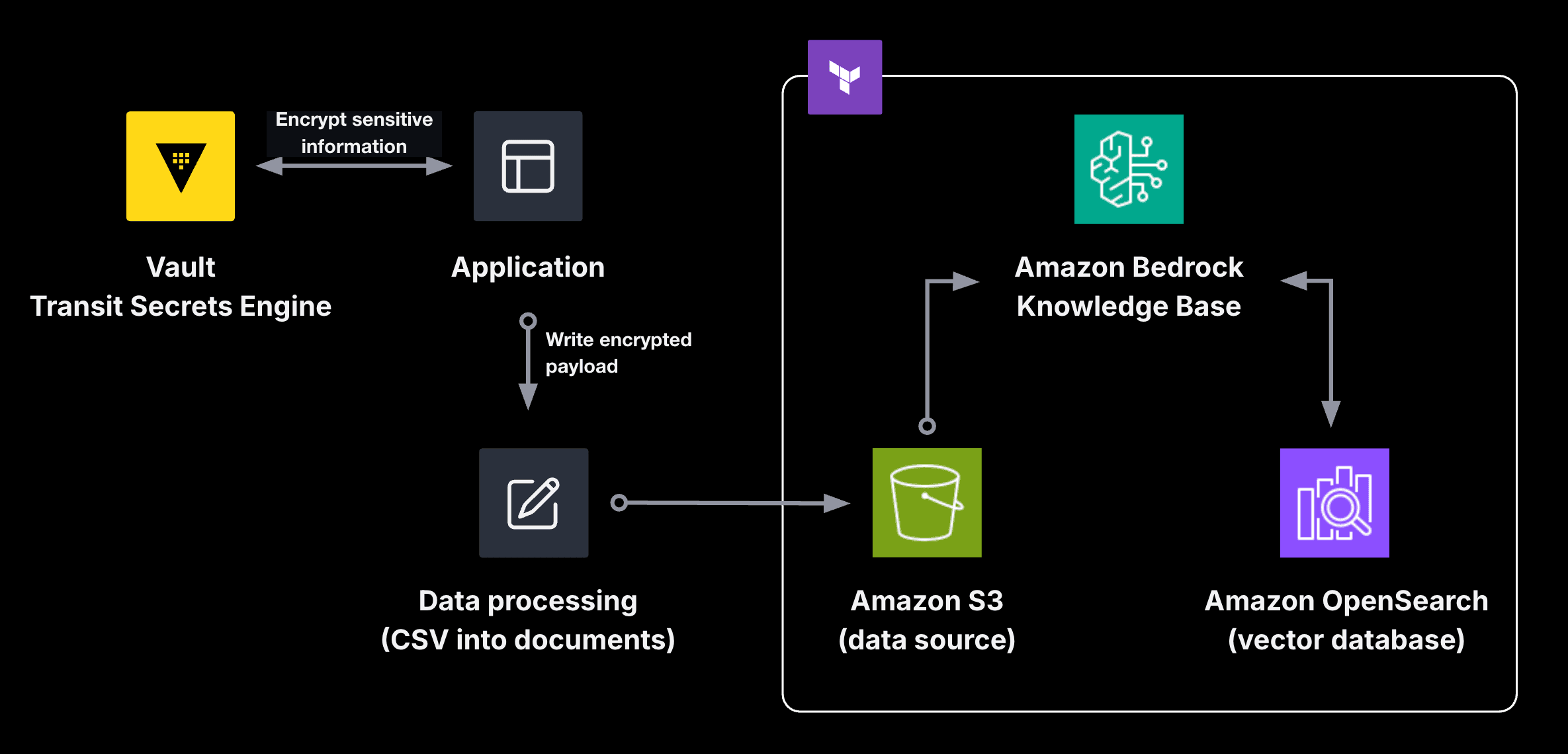

This post shows how HashiCorp Vault’s transit secrets engine can be configured to encrypt and protect sensitive data before sending it to an Amazon Bedrock Knowledge Base created by Terraform.

The sample dataset contains a list of vacation rentals from Airbnb, which includes the names of the hosts. Not all applications require the names of the hosts, so the original application collecting the information encrypts the field using Vault before storing a rental listing in the database. As a result, running queries against the Amazon Bedrock Knowledge Base outputs the encrypted name and prevents the leakage of PII.

»Encrypt and upload data

The demo uses an HCP Vault cluster with the transit secrets engine enabled. The host name for each rental gets encrypted by a key named listings with convergent encryption enabled. Convergent encryption ensures that a plaintext host name results in the same ciphertext. This allows the LLM to analyze each rental listing for similarities between hosts without knowing the actual host name.

resource "hcp_hvn" "rental" {

hvn_id = var.name

cloud_provider = "aws"

region = var.region

cidr_block = var.cidr_block

}

resource "hcp_vault_cluster" "rental" {

cluster_id = var.name

hvn_id = hcp_hvn.rental.hvn_id

tier = "plus_small"

public_endpoint = true

}

resource "hcp_vault_cluster_admin_token" "rental" {

cluster_id = hcp_vault_cluster.rental.cluster_id

}

resource "vault_mount" "transit_rental" {

path = var.name

type = "transit"

description = "Key ring for rental information"

default_lease_ttl_seconds = 3600

max_lease_ttl_seconds = 86400

}

resource "vault_transit_secret_backend_key" "listings" {

backend = vault_mount.transit_rental.path

name = "listings"

derived = true

convergent_encryption = true

deletion_allowed = true

}

To process a CSV file with a list of vacation rentals in New York City from January 2025, you would encrypt the name of the host as if it is sensitive data. Create a local script that uses the HVAC Python client for HashiCorp Vault to access the encryption API endpoint in Vault.

import base64

import json

import logging

import os

import boto3

import hvac

import pandas

from botocore.exceptions import ClientError

from langchain_community.document_loaders import CSVLoader

from vardata import S3_BUCKET_NAME

LISTINGS_FILE = "./data/raw/listings.csv"

ENCRYPTED_LISTINGS_FILE = "./data/listings.csv"

MOUNT_POINT = "rentals"

KEY_NAME = "listings"

CONTEXT = json.dumps({"location": "New York City", "field": "host_name"})

client = hvac.Client(

url=os.environ["VAULT_ADDR"],

token=os.environ["VAULT_TOKEN"],

namespace=os.getenv("VAULT_NAMESPACE"),

)

def encrypt_payload(payload):

try:

encrypt_data_response = client.secrets.transit.encrypt_data(

mount_point=MOUNT_POINT,

name=KEY_NAME,

plaintext=base64.b64encode(payload.encode()).decode(),

context=base64.b64encode(CONTEXT.encode()).decode(),

)

ciphertext = encrypt_data_response["data"]["ciphertext"]

return ciphertext

except AttributeError:

return ""

def encrypt_hostnames():

dataframe = pandas.read_csv(LISTINGS_FILE)

dataframe["host_name"] = dataframe["host_name"].apply(lambda x: encrypt_payload(x))

dataframe.to_csv(ENCRYPTED_LISTINGS_FILE, index=False)

# omitted for clarity

This example data would usually exist in a database. A separate application that adds the listing would encrypt and store the data in the database before it gets exported to CSV for reporting. While you could upload the CSV file in its entirety as a document, for this demo, continue processing the rental listings by uploading each record as its own document to debug and improve the LLM’s responses. For more information on how to process semi-structured data for Amazon Bedrock, review the AWS samples repository.

Each entry in the CSV file gets written as its own document to the S3 bucket. This example uses LangChain to convert each CSV record to a text file and upload it to S3.

import base64

import json

import logging

import os

import boto3

import hvac

import pandas

from botocore.exceptions import ClientError

from langchain_community.document_loaders import CSVLoader

from vardata import S3_BUCKET_NAME

LISTINGS_FILE = "./data/raw/listings.csv"

ENCRYPTED_LISTINGS_FILE = "./data/listings.csv"

MOUNT_POINT = "rentals"

KEY_NAME = "listings"

# omitted for clarity

def create_documents():

loader = CSVLoader(ENCRYPTED_LISTINGS_FILE)

data = loader.load()

return data

def upload_file(body, bucket, object):

s3_client = boto3.client("s3")

try:

s3_client.put_object(Body=body, Bucket=bucket, Key=object)

except ClientError as e:

logging.error(e)

return False

return True

def main():

encrypt_hostnames()

docs = create_documents()

for i, doc in enumerate(docs):

upload_file(doc.page_content, S3_BUCKET_NAME, f"listings/{i}")

if __name__ == "__main__":

main()

This script is intended for educational and testing purposes only. In a production use case, you can process data using AWS Sagemaker.

The script creates a text file with the name of the host in ciphertext. All other non-sensitive attributes, such as room type and listing ID, remain in plaintext.

id: 1284789

name: Light-filled Brownstone Triplex with Roof Deck

host_id: 5768571

host_name: vault:v1:ocqgeegsqMeo2PawbxsK8lQ1Sqp5/VVGqftD4DUbx1iKMw==

neighbourhood_group: Brooklyn

neighbourhood: Prospect Heights

latitude: 40.67506

longitude: -73.96423

room_type: Entire home/apt

price:

minimum_nights: 30

number_of_reviews: 4

last_review: 2023-07-28

reviews_per_month: 0.06

calculated_host_listings_count: 1

availability_365: 0

number_of_reviews_ltm: 0

license:

After processing the data, set up an Amazon Bedrock Knowledge Base to ingest the documents from S3 as a data source. If you stored the data in Amazon Aurora, Amazon Redshift, or Glue Data Catalog, you can set up a knowledge base to ingest from a structured data source.

»Set up a vector store

Amazon Bedrock Knowledge Bases allow you to add proprietary information into applications using RAG. To ingest a data source from S3, Bedrock Knowledge Bases requires the following:

- Sufficient IAM policies to connect to S3

- A supported vector store for embeddings.

This demo uses Amazon OpenSearch Serverless to provision an OpenSearch cluster for vector embeddings. The cluster requires additional IAM policies to access collections and indexes that store the vector embeddings.

Below are two security policies: one for the encryption of the collection with embeddings and a second for network access to the collection and dashboard. The security policy for network access to the collection and dashboard ensures that Terraform can properly create an index.

resource "aws_opensearchserverless_security_policy" "rentals_encryption" {

name = var.name

type = "encryption"

policy = jsonencode({

"Rules" = [

{

"Resource" = [

"collection/${var.name}"

],

"ResourceType" = "collection"

},

],

"AWSOwnedKey" = true

})

}

resource "aws_opensearchserverless_security_policy" "rentals_network" {

name = var.name

type = "network"

policy = jsonencode([{

"Rules" = [

{

"Resource" = [

"collection/${var.name}"

],

"ResourceType" = "collection"

},

{

"Resource" = [

"collection/${var.name}"

],

"ResourceType" = "dashboard"

},

],

"AllowFromPublic" = true,

}])

}

The configuration below generates an access policy to allow Amazon Bedrock and your current AWS credentials in Terraform to read and write to the index with the collection of the embeddings.

data "aws_caller_identity" "current" {}

resource "aws_opensearchserverless_access_policy" "rentals" {

name = var.name

type = "data"

description = "read and write permissions"

policy = jsonencode([

{

Rules = [

{

ResourceType = "index",

Resource = [

"index/${var.name}/*"

],

Permission = [

"aoss:*"

]

},

{

ResourceType = "collection",

Resource = [

"collection/${var.name}"

],

Permission = [

"aoss:*"

]

}

],

Principal = [

aws_iam_role.bedrock.arn,

data.aws_caller_identity.current.arn

]

}

])

}

After creating these policies, Terraform can now build the collection. For Amazon Bedrock Knowledge Bases, set the type to VECTORSEARCH.

resource "aws_opensearchserverless_collection" "rentals" {

name = var.name

type = "VECTORSEARCH"

depends_on = [

aws_opensearchserverless_security_policy.rentals_encryption,

aws_opensearchserverless_security_policy.rentals_network

]

}

Finally, you need to create an index for the vector embeddings collection in the OpenSearch cluster. Based on prerequisites for bringing your own vector store, configure an index using the OpenSearch Provider for Terraform. This example uses Amazon Titan 2.0 embeddings, which have 1024 dimensions in the vector.

provider "opensearch" {

url = aws_opensearchserverless_collection.rentals.collection_endpoint

healthcheck = false

}

locals {

vector_field = "bedrock-knowledge-base-default-vector"

metadata_field = "AMAZON_BEDROCK_METADATA"

text_field = "AMAZON_BEDROCK_TEXT_CHUNK"

}

resource "opensearch_index" "bedrock_knowledge_base" {

name = "bedrock-knowledge-base-default-index"

number_of_shards = "2"

number_of_replicas = "0"

index_knn = true

index_knn_algo_param_ef_search = "512"

mappings = <<-EOF

{

"properties": {

"${local.vector_field}": {

"type": "knn_vector",

"dimension": 1024,

"method": {

"name": "hnsw",

"engine": "faiss",

"parameters": {

"m": 16,

"ef_construction": 512

},

"space_type": "l2"

}

},

"${local.metadata_field}": {

"type": "text",

"index": "false"

},

"${local.text_field}": {

"type": "text",

"index": "true"

}

}

}

EOF

}

After configuring the vector store, you can create an Amazon Bedrock Knowledge Base for the S3 bucket with rental listings.

»Configure RAG in Amazon Bedrock

In order to augment the responses from an LLM with rental listing information, you need to create an Amazon Bedrock Knowledge Base to facilitate RAG. The knowledge base requires sufficient IAM access to the S3 bucket with rental listings and the OpenSearch collection. In addition to the data source and collection access, Amazon Bedrock’s IAM role also needs permissions to invoke the model used for embeddings.

resource "aws_iam_policy" "bedrock" {

name = "bedrock-${var.name}-s3"

path = "/"

description = "Allow Bedrock Knowledge Base to access S3 bucket with rentals"

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = [

"s3:ListBucket",

],

Effect = "Allow",

Resource = aws_s3_bucket.rentals.arn,

Condition = {

StringEquals = {

"aws:ResourceAccount" = data.aws_caller_identity.current.account_id

}

}

},

{

Action = [

"s3:GetObject",

],

Effect = "Allow",

Resource = "${aws_s3_bucket.rentals.arn}/*",

Condition = {

StringEquals = {

"aws:ResourceAccount" = data.aws_caller_identity.current.account_id

}

}

},

{

Action = [

"aoss:APIAccessAll"

],

Effect = "Allow",

Resource = aws_opensearchserverless_collection.rentals.arn,

},

{

Action = [

"bedrock:InvokeModel"

],

Effect = "Allow",

Resource = data.aws_bedrock_foundation_model.embedding.model_arn,

}

]

})

}

resource "aws_iam_role" "bedrock" {

name_prefix = "bedrock-${var.name}-"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = "sts:AssumeRole"

Effect = "Allow"

Principal = {

Service = "bedrock.amazonaws.com"

},

Condition = {

StringEquals = {

"aws:SourceAccount" = data.aws_caller_identity.current.account_id

},

}

},

]

})

}

resource "aws_iam_role_policy_attachment" "bedrock" {

role = aws_iam_role.bedrock.name

policy_arn = aws_iam_policy.bedrock.arn

}

Configure the Amazon Bedrock knowledge base in Terraform. It uses the IAM role with permissions to the S3 bucket, OpenSearch collection, and embedding model. It also defines the field mappings defined in the OpenSearch index for the vector embeddings.

resource "aws_bedrockagent_knowledge_base" "rentals" {

name = var.name

role_arn = aws_iam_role.bedrock.arn

knowledge_base_configuration {

vector_knowledge_base_configuration {

embedding_model_arn = data.aws_bedrock_foundation_model.embedding.model_arn

}

type = "VECTOR"

}

storage_configuration {

type = "OPENSEARCH_SERVERLESS"

opensearch_serverless_configuration {

collection_arn = aws_opensearchserverless_collection.rentals.arn

vector_index_name = opensearch_index.bedrock_knowledge_base.name

field_mapping {

vector_field = local.vector_field

text_field = local.text_field

metadata_field = local.metadata_field

}

}

}

}

Create a data source for the knowledge base linked to the Amazon S3 bucket with rental listings.

resource "aws_bedrockagent_data_source" "listings" {

knowledge_base_id = aws_bedrockagent_knowledge_base.rentals.id

name = "listings"

data_source_configuration {

type = "S3"

s3_configuration {

bucket_arn = aws_s3_bucket.rentals.arn

}

}

}

After deploying this in Terraform, you can test the knowledge base with some requests.

»Test the knowledge base

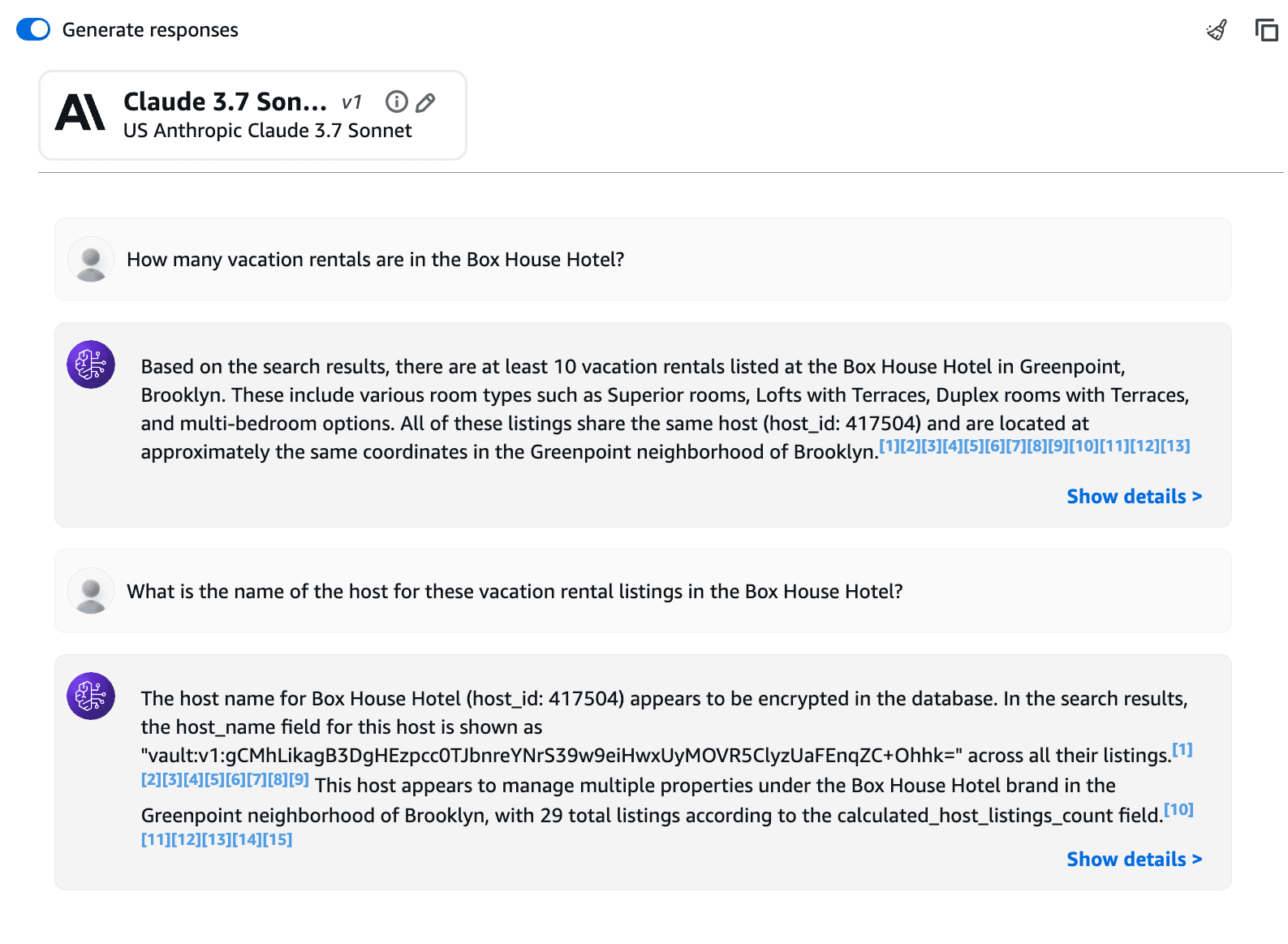

When you ask questions to the knowledge base with information about rental listings, you should get responses with additional detail based on the listings.

This example asks Anthropic Claude 3.7 Sonnet how many vacation rentals are in the Box House Hotel. It returned a response indicating that it searched the rental listings and found at least ten of them.

Input:

How many vacation rentals are in the Box House Hotel?

Output:

Based on the search results, there are at least 10 vacation rentals listed at

the Box House Hotel in Greenpoint, Brooklyn. These include various room types

such as Superior rooms, Lofts with Terraces, Duplex rooms with Terraces, and

multi-bedroom options. All of these listings share the same host

(host_id: 417504) and are located at approximately the same coordinates in the

Greenpoint neighborhood of Brooklyn.

Loading data into Amazon Bedrock improved the response by adding new information that the model did not have for training. Since you took additional steps to encrypt the names of the hosts and protect them as sensitive data, the model should not return information with the plaintext names of the hosts.

The example below asks an additional question about the name of the host who owns these vacation rental listings. After some searching, the model responds that the host name is encrypted and it found 29 other listings with the same ciphertext.

Input:

What is the name of the host for these vacation rental listings in the Box House Hotel?

Output:

The host name for Box House Hotel (host_id: 417504) appears to be encrypted in

the database. In the search results, the host_name field for this host is shown

as "vault:v1:gCMhLikagB3DgHEzpcc0TJbnreYNrS39w9eiHwxUyMOVR5ClyzUaFEnqZC+Ohhk="

across all their listings.

This host appears to manage multiple properties under the Box House Hotel brand in the Greenpoint neighborhood of Brooklyn, with 29 total listings according to the calculated_host_listings_count field.

Convergent encryption ensures the same names of hosts result in the same ciphertext, which allows the LLM to still analyze the data and identify that the same host offers 29 rental properties. If an LLM application needs to respond with the plaintext name of the host, it can use the Vault transit secrets engine to decrypt the ciphertext before returning the response.

»Learn more

By encrypting sensitive data before augmenting a LLM with RAG, you can protect access to the data and prevent leakage of sensitive information. In this demo, an LLM in testing and other applications by default do not need access to the plaintext name of the host for the vacation rental. They can still analyze and provide other information without leaking the name of the host for each vacation rental listing.

For applications that need access to the plaintext name of the host, they can implement additional code to decrypt the payload with Vault transit secrets engine. This ensures that only authorized applications have access to Vault’s decryption endpoint to reveal sensitive data. Vault offers additional advanced data protection techniques such as format-preserving encryption, masking, and data tokenization using the transform secrets engine. The transform secrets engine further masks or sanitizes sensitive information while allowing the LLM and its applications to process data.

To learn more about Amazon Bedrock Knowledge Bases, check out its documentation. The OWASP Top 10 Risks & Mitigations for LLMs and Gen AI Apps includes a list of risks and mitigations for LLMs and Gen AI Apps, including some recommendations to prevent sensitive data disclosure.