Network segmentation is a highly effective strategy to limit the impact of network intrusion. However, in modern environments such as a cluster scheduler, applications are started and restarted often without operator intervention. This dynamic provisioning results in constantly changing IP addresses, and application ingress ports. Segmenting these dynamic environments using traditional methods of firewalls and routing can be very technically challenging.

In this post, we look at this complexity and how a service mesh is a potential solution for secure network traffic in modern dynamic environments.

»Dynamic Environments

Before we continue, let's define what we mean by dynamic environments. In the simplest terms, a dynamic environment is one where applications and infrastructure are subject to frequent changes, either manually through regular deployments and infrastructure changes, or without operator intervention triggered by auto scaling or automated instance replacement. Operating a scheduler like HashiCorp Nomad or Kubernetes exhibits this behaviour, as does leveraging the automated redundancy of autoscaling groups provided by many cloud providers. The effect, however, is not limited to cloud environments, any platform such as vSphere configured in a highly available mode can also be classified as a dynamic environment.

»Network Segmentation

We also need to clarify why network segmentation is valuable to network security. Traditionally network segmentation was primarily implemented with a perimeter firewall. The problem with this approach is that the trusted zone is flat. It only requires a single intrusion to gain widespread access to a network, and the larger the network the more limited the chance of intruder detection.

With fine-grained network segmentation a network is partitioned into many smaller networks with the intention of reducing the blast radius should an intrusion occur. This approach involves developing and enforcing a ruleset to control the communications between specific hosts and services.

Each host and network should be segmented and segregated at the lowest level which can be practically managed. Routers or layer 3 switches divide a network into separate smaller networks using measures such as Virtual LAN (VLAN) or Access Control Lists (ACLs). Network firewalls are implemented to filter network traffic between segments, and host-based firewalls filter traffic from the local network adding additional security.

If you are operating in a cloud-based environment, network segmentation is achieved through the use of Virtual Private Clouds (VPC) and Security Groups. While the switches are virtualized the approach of configuring ingress rules and ACLs to segment networks is mostly the same as physical infrastructure.

»Service Segmentation

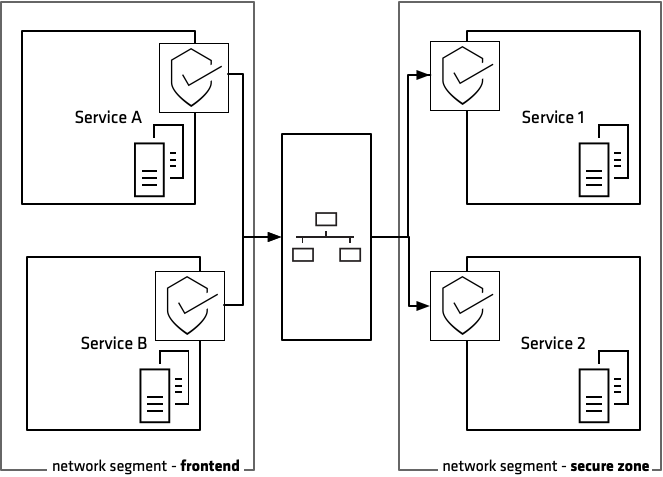

Where network segmentation is concerned with securing traffic between zones, service segmentation secures traffic between services in the same zone. Service segmentation is a more granular approach and is particularly relevant to multi-tenanted environments such as schedulers where multiple applications are running on a single node.

Implementing service segmentation depends on your operating environment and application infrastructure. Service segments are often applied through the configuration of software firewalls, software defined networks such as the overlay networks used by application schedulers, and more recently by leveraging a service mesh.

Like network segmentation, the principle of least privilege is applied and service to service communication is only permitted where there is an explicit intention to allow this traffic.

»Problems with Network Segmentation and Dynamic Environments

When we attempt to implement this approach in a dynamic environment there are a number of problems:

»Application deployment is disconnected from the network configuration

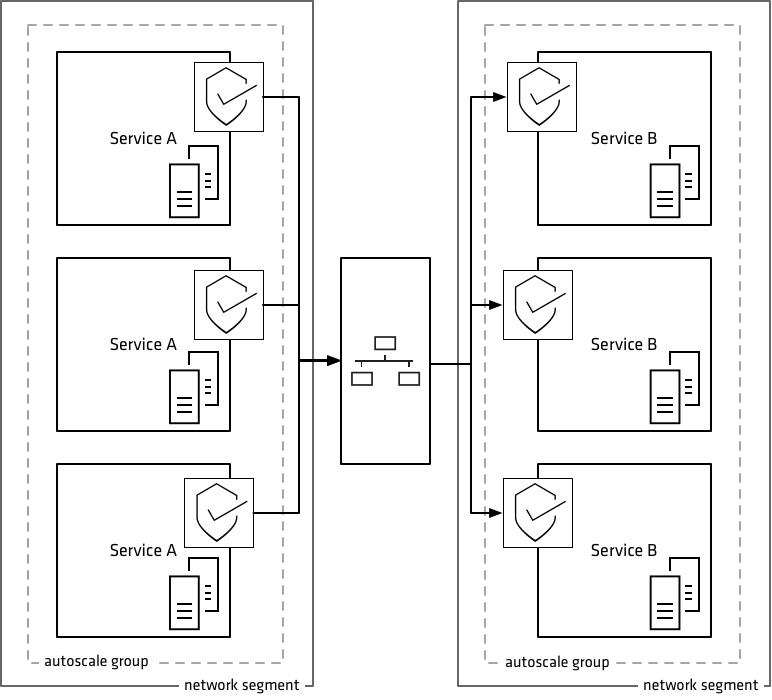

When applications are deployed using an autoscaling group, and a new instance is created, it is generally dynamically assigned an IP address from a pre-configured block. This particular application is rarely going to be running in isolation and needs to access services running inside the same network segment, and potentially another network segment. If we had taken a hardened approach to our network security, then there would be strict routing rules between the two segments which only allow traffic on a predefined list. In addition to this, we would have host level firewalls configured on the upstream service which again would only allow specific traffic.

In a static world, this was simpler to solve as the application is deployed to a known location. The routing and firewalls rules could be updated at deploy time to enable the required access.

In a dynamic world, there is a disconnect, the application is deployed independently to configuring network security and the allocated IP address, and potentially even ports are dynamic. Typically there is a manual process of updating the network security rules, which can slow down deployments.

»Applications are scheduled in a modern scheduler

The goal of network segmentation is to logically partition the network to reduce the blast radius of a compromise. Taken to the limit, the network segment would only include a pair of services that need to communicate. This leads to the idea of service segmentation, where we manage access to the service layer. With service level segmentation we are concerned with interactions between services, regardless of where they are on the network. If you are running your application on a scheduler like Kubernetes or Nomad, leveraging an overlay network is a common approach to configuring service segmentation. While configuring an overlay can be simpler than managing multiple network routes and firewall rules, as the overlay is often automatically re-configured when application locations change. We need to consider that a scheduler is often part of a more significant infrastructure deployment.

In this case, we also need to consider how applications inside the scheduler communicate with other network services. For example, you have a one hundred node cluster and one of your application instances needs to talk to a service in another network segment. The application running in the scheduler could be running on any of the one hundred nodes. This makes it challenging to determine which IP should be whitelisted. A scheduler often moves applications between nodes dynamically, and this causes a constant requirement for routing and firewall rules to be updated.

A modern environment is composed of VMs, containers, serverless functions, cloud data stores, and much more. This complex infrastructure poses a significant challenge to managing network security.

Configuring network level security for firewall and routing rules, and also scheduler level security for the overlay network helps to increase the security of your system. However we have to manage security configuration in two separate areas. Operationally, we also have two distinctly different approaches required to implement the required configuration. These two requirements greatly increase the systemic complexity and the operational overhead for managing application security.

One of the unfortunate side effects of this complexity can be that security is relaxed, network segmentation is reduced to blocks of routing rules rather than absolute addresses. Entire clusters are allowed routing rather than just the nodes which are running specific applications. And from a service perspective, too much trust is applied to a local network segment. From a security perspective this is suboptimal and increases the applications attack surface, there needs a consistent and centralized way to manage and understand network and service segmentation.

»Service Segmentation with Intention-based security

The solution to the complexity and to increase our network security is to remove the need to configure location based security rules and move to an Intention-based model. Intention-based security builds rules on identity rather than location. For example, we can define an intention which states that the front-end service needs to communicate with the payment service.

Defining network segmentation through Intentions alleviates the complexity of traditional network segmentation and allows tighter control of network security rules. With Intentions, you describe security at an application level, not a network location level.

For example, if we have the following infrastructure:

To enable ‘Service A’ to ‘Service B’ communication, we need to create routing rules between the two segments. Firewall rules must be created to allow access from the ‘Service A’ instances.

This configuration is a total of 9 rules:

- 9x firewall rules between Service A and Service B

Consider the impact when a ‘Service A’ instance is replaced, and remember this is not just due to failure but also continuous integration. These rules again would need to be updated, the removed instance details would need to be deleted and the new instance details added.

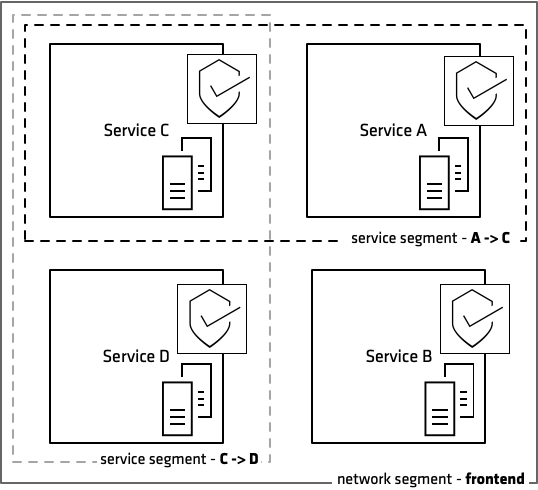

Now consider the approach where the routing is controlled through Intention-based security.

This configuration results in a single Intention:

- 'Service A' -> 'Service B',

Changing the scale or location of running instances does not change that simple rule.

Intention-based security can be implemented by using a service mesh. The service mesh consists of a central control plane that allows you to configure network and service segmentation centrally through the use of Intentions rather than network location, and a data plane provides routing and service discovery. Together these two components can almost completely replace the requirement for complex routing and firewall rules.

Using identity-based authorization, the data plane only allows communication between services defined by these Intentions, and because Intentions are managed centrally by the control plane, a single update re-configures the entire network.

»Benefits of Intentions over Traditional Network Segmentation

Service Intentions dramatically simplify the approach to securing service to service communication. We can also take a centralized approach no matter if our application is running in a virtual machine, on a scheduler, or if it is a cloud-managed data store.

Since all traffic in a service mesh is proxied, network routing rules are dramatically simplified. The ports through which the traffic flows can be restricted to a single port, or a very narrow range, and the host level firewall, only needs to be configured to allow ingress on the known proxy port.

In a service mesh, connections are authenticated using mutual TLS so even if a rogue application gained access to the network, without a valid identity and authentication it would not be able to communicate to the open proxy port running on the host.

Finally, defining service to service security with a single centralized rule is simpler to conceptualize and far more straightforward to implement.

We believe that service segmentation is an important step in securing the datacenter, particularly in modern cloud-based environments. We recently introduced “Consul Connect”, which adds segmentation capability to Consul to make it a full service mesh. You can read more about that announcement here https://www.hashicorp.com/blog/consul-1-2-service-mesh, and learn more about Consul here https://www.consul.io/.