How to Build Network Infrastructure Automation (NIA) with HashiCorp Terraform, Consul, and A10 ADC

Get a walkthrough of the code and workflow for setting up NIA with Consul-Terraform-Sync (CTS) and A10 ADC.

This guest post was written by Taka Mitsuhata, Senior Manager of Technical Marketing at A10 Networks, a provider of secure application solutions for on-premises, multi-cloud, and edge-cloud environments.

As cloud adoption continues to grow, many organizations are moving towards hybrid cloud environments, gradually transferring some of their application services to public cloud platforms while also keeping their existing on-premises datacenters. Hybrid-cloud environments increase complexity when designing and deploying application services globally, and they complicate the operations workflow by requiring collaboration among different groups (application, server, networking, and security teams) and among different platforms (cloud providers and datacenters). This motivates organizations to leverage DevOps approaches and tools that simplify and streamline the process, enabling self-service models and automating operational tasks.

A new outlook on network automation involves the creation of automation flows between your service networking control plane, your infrastructure provisioning platform, and network devices. This article demonstrates step-by-step how you can automate the configuration update process for an application delivery controller (ADC) using open source DevOps tools.

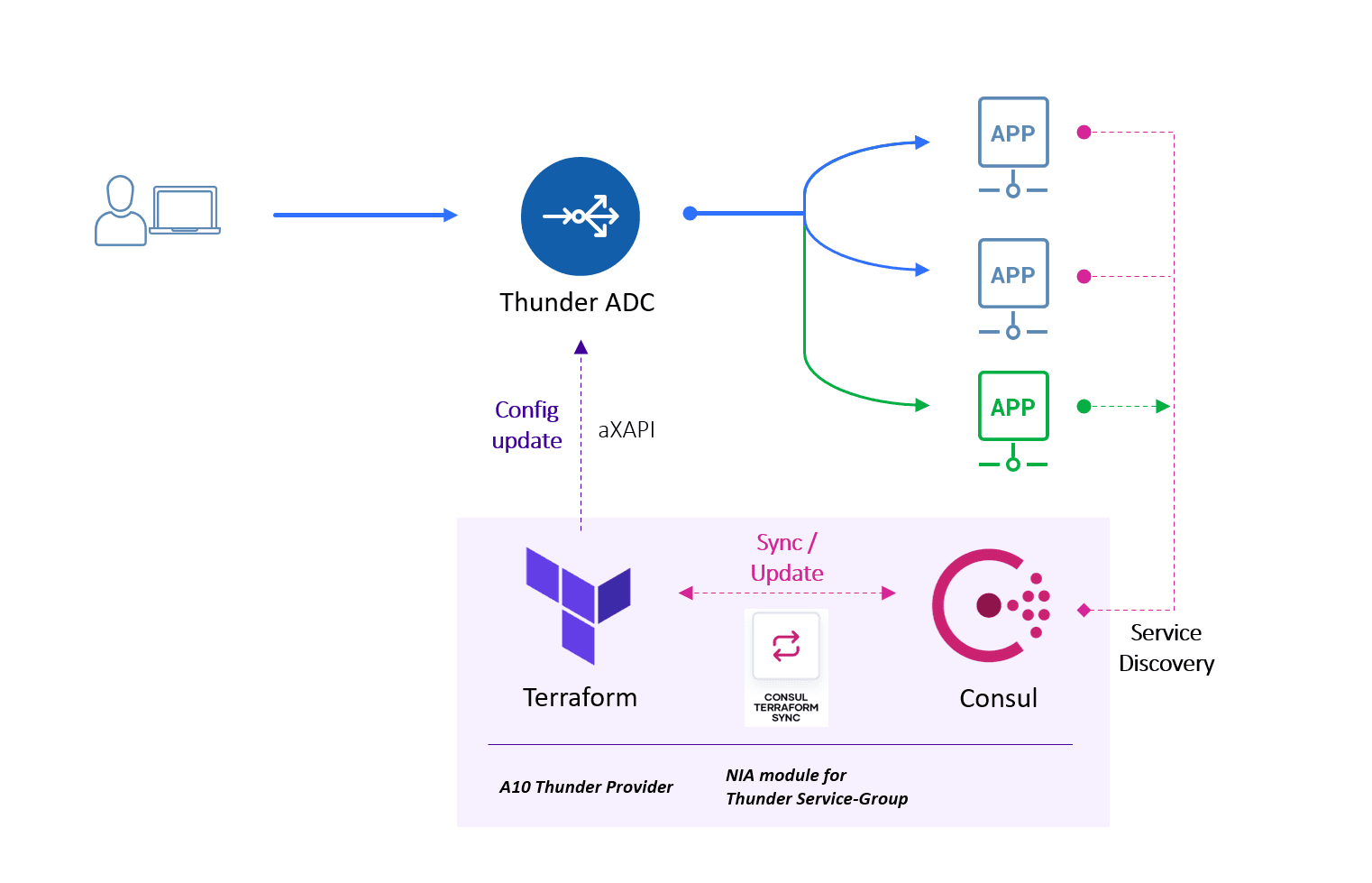

HashiCorp and A10 Networks recently collaborated on a strategy like this called “Network Infrastructure Automation (NIA).” NIA consists of four components: HashiCorp Terraform for infrastructure as code, HashiCorp Consul for service networking and service mesh, Consul-Terraform-Sync (CTS) for automation between those two products, and integrations provided by network devices such as A10 Thunder ADC. In this article, we’re going to show you how this workflow looks so that you can see if it makes sense as a new pattern to simplify and accelerate your own networking infrastructure management.

»The Ingredients of NIA

Before we show you NIA in action, let’s first take a brief look at the components.

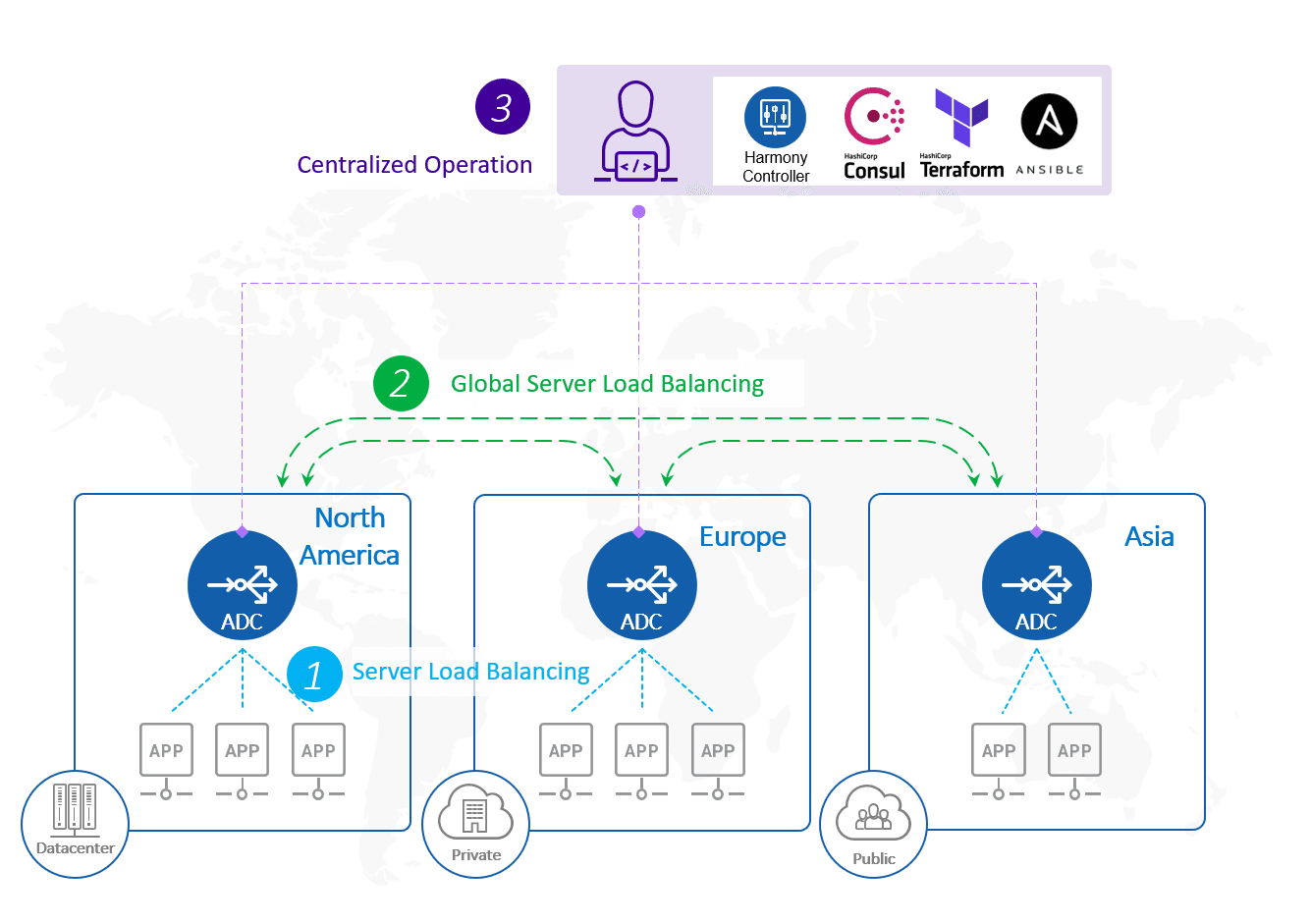

This is a basic architecture of an NIA setup.

»Network Device - A10 Thunder Application Delivery Controller (ADC)

First, there are network devices that need to be automated for provisioning workflows. In this article, we’ll be using A10 Thunder ADC as an example, but this workflow can also work with other vendors that have built NIA integrations.

One of the main ADC functions is as a reverse proxy for application servers. This increases service resiliency with server load balancing, application acceleration, and security features and enables agile traffic control including CI/CD operation support. Furthermore, global server load balancing (GSLB), which comes with A10 ADC by default, can intelligently distribute the traffic across available global sites, based on regions and locations, contents and language localization policy, or the site’s load and health condition regardless of cloud or platform types. So, it can work as a cloud selector and effectively utilize hybrid and multi-cloud environments (resources).

»Terraform

Terraform is a widely adopted, de facto choice for creating and managing cross-cloud infrastructures. The A10 Terraform provider supports the provisioning and configuration updates for A10 Thunder ADC in any form factors or underlying cloud infrastructure (GitHub repository).

Below is an example Terraform configuration that shows a minimum service load balancing (SLB) configuration required for A10 ADC and NIA integration. This defines a service group web80 and a virtual server named vip-web80. The application server resources are automatically added or deleted via an NIA workflow process that I’ll explain in a moment.

provider "thunder" {}

resource "thunder_virtual_server" "vip-web" {

name = "vip-web80"

ip_address = "10.64.4.111"

port_list {

auto = 1

port_number = 80

protocol = "http"

service_group = thunder_service_group.sg1.name

snat_on_vip = 1

}

}

resource "thunder_service_group" "sg1" {

name = "web80"

protocol = "TCP"

}

Note: It is assumed that system, interface, and routing are already configured on the Thunder ADC during the installation process.

»Consul

In NIA, application servers and their services’ statuses are monitored via Consul. Consul builds a service catalog by communicating with each server running as a Consul agent.

$ consul members

Node Address Status Type Build Protocol DC Segment

devops1.a10tme-demo 192.168.0.201:8301 alive server 1.8.4 2 dc1 <all>

s1.a10tme-demo 192.168.0.10:8301 alive client 1.8.4 2 dc1 <default>

s2.a10tme-demo 192.168.0.11:8301 alive client 1.8.4 2 dc1 <default>

s3.a10tme-demo 192.168.0.12:8301 alive client 1.8.4 2 dc1 <default>

Once Consul membership is formed with agents (e.g. web servers), it is time to prepare Consul-Terraform-Sync (CTS).

»Consul-Terraform-Sync

CTS is a tool that uses Consul’s service catalog as a source of truth for all applications running in a given environment. When changes are detected to these applications (e.g. a new service node is added or deleted from the service list), CTS dynamically applies the necessary changes to your network infrastructure using Terraform.

Example NIA workflow with Terraform, Consul, CTS, and Thunder ADC.

You will define a set of tasks for CTS to execute whenever a service is registered or removed on Consul. The CTS configuration (in HCL format) below contains several blocks:

$ cat tasks.hcl

log_level = "info"

driver "terraform" {

log = true

required_providers {

thunder = {

source = "a10networks/thunder"

version = "0.4.14"

}

}

}

terraform_provider "thunder" {

address = "10.64.4.104"

username = "{{ env \"THUNDER_USER\" }}"

password = "{{ env \"THUNDER_PASSWORD\" }}"

alias = "adc-1"

}

consul {

address = "192.168.0.201:8500"

}

task {

name = "slb_auto_config"

description = "Automate SLB Config on A10 Thunder"

source = "a10networks/service-group-sync-nia/thunder"

providers = ["thunder.adc-1"]

services = ["web80"]

variable_files = []

}

The driver defines all Terraform providers required to execute the task. In this case, source = "a10networks/thunder" is listed. The terraform_provider specifies the options and variables to interface with network infrastructure such as ADC. The example above includes the IP address of an A10 Thunder ADC, an alias, and a login credential.

Note: For security’s sake, you may want to separate login credentials and load dynamically via shell (Env), Consul KV, or HashiCorp Vault.

The task block identifies a task to run as automation for the selected services. The task named slb_auto_conifg includes a list under services of logical service names that should match the service name(s) registered on the Consul catalog. providers lists the network infrastructure (e.g., Thunder ADC) with aliases (if applicable). source specifies a path to the Thunder Terraform module defined for CTS that allows Thunder ADC to dynamically manage ADC configuration (e.g. SLB server and SLB service group) for the services monitored on the Consul catalog.

Note: For more details about the configuration, refer to the Consul NIA Configuration. For more details on the Terraform NIA module for A10 Thunder, refer to the Terraform Registry or GitHub.

Once CTS is started by running $ consul-terraform-sync -config-file=tasks.hcl, it will download and install Terraform providers and modules according to your HCL config file, then create Terraform files for the tasks defined and connect to Consul.

Here’s what that looks like:

$ consul-terraform-sync -config-file=tasks.hcl

2021/04/10 06:40:12.364475 [INFO] v0.1.0 (354ce7a)

2021/04/10 06:40:12.365844 [INFO] (driver.terraform) installing terraform to path '/root/nia'

2021/04/10 06:40:16.009060 [INFO] (driver.terraform) successfully installed terraform

2021/04/10 06:40:16.012513 [INFO] (templates.hcltmpl) evaluating dynamic configuration for "thunder"

:

2021/04/10 06:40:17.258628 [INFO] running Terraform command: /root/nia/terraform init -no-color -force-copy -input=false -lock-timeout=0s -backend=true -get=true -upgrade=false -lock=true -get-plugins=true -verify-plugins=true

Initializing modules...

Downloading a10networks/service-group-sync-nia/thunder 0.1.6 for slb_auto_config...

- slb_auto_config in .terraform/modules/slb_auto_config

Initializing the backend...

Successfully configured the backend "consul"! Terraform will automatically

use this backend unless the backend configuration changes.

:

Terraform has been successfully initialized!

2021/04/10 06:40:20.158072 [INFO] running Terraform command: /root/nia/terraform workspace new -no-color slb_auto_config

Workspace "slb_auto_config" already exists

2021/04/10 06:40:20.512676 [INFO] running Terraform command: /root/nia/terraform workspace select -no-color slb_auto_config

Switched to workspace "slb_auto_config".

2021/04/10 06:40:20.853882 [INFO] running Terraform command: /root/nia/terraform apply -no-color -auto-approve -input=false -var-file=terraform.tfvars -var-file=providers.tfvars -lock=true -parallelism=10 -refresh=true

module.slb_auto_config.thunder_service_group.service-group["web80"]: Refreshing state... [id=web80]

Apply complete! Resources: 0 added, 0 changed, 0 destroyed.

2021/04/10 06:40:22.636456 [INFO] (ctrl) task completed slb_auto_config

2021/04/10 06:40:22.637694 [INFO] (ctrl) all tasks completed once

2021/04/10 06:40:22.637722 [INFO] (cli) running controller in daemon mode

2021/04/10 06:40:22.638563 [INFO] (api) starting server at '8558'

»See NIA in Action

Let’s get started by adding services registered with the Consul service catalog. On each server, you will define service details (IP, port, etc.) and health check information as shown in the Consul config file below. Please note that the service name should match the service-group name defined on the A10 ADC.

$ cat /etc/consul.d/web.json

{

"service": {

"name": "web80",

"id": "web80-s1",

"tags": ["web-p80"],

"port": 80,

"address": "192.168.0.10",

"check": {

"http": "http://localhost:80/",

"interval": "15s"

}

}

}

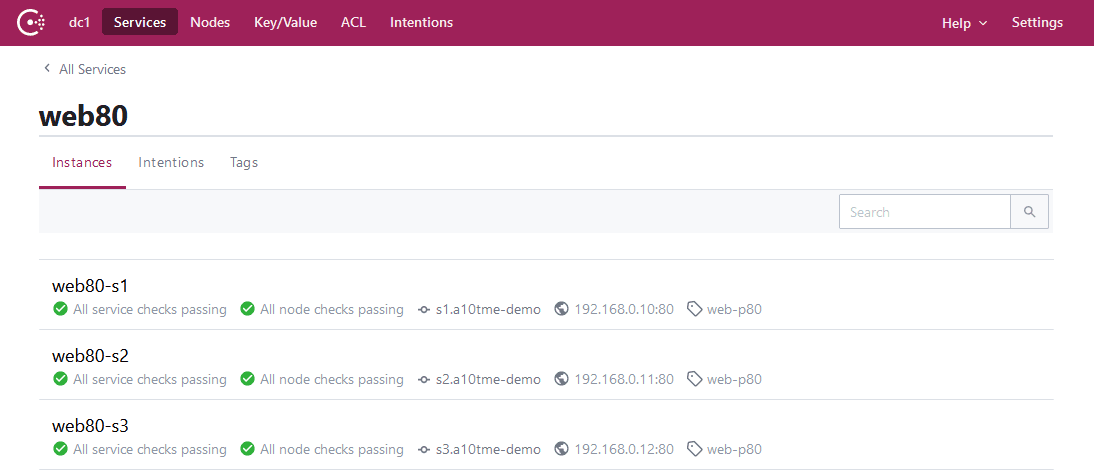

Once you place the config file onto the Consul home directory (e.g. /etc/consul.d/) and run the $ consul reload command, the Consul catalog will show the application servers available:

On CTS, the change is picked up and the task slb_auto_config is executed automatically. In this process, CTS automatically creates a new “slb server” and adds them into the service-group named web80 using Terraform on the Thunder ADC.

:

2021/04/19 23:26:47.770352 [INFO] (ctrl) executing task slb_auto_config

2021/04/19 23:26:47.770494 [INFO] running Terraform command: /root/nia/terraform apply -no-color -auto-approve -input=false -var-file=terraform.tfvars -var-file=providers.tfvars -lock=true -parallelism=10 -refresh=true

Apply complete! Resources: 0 added, 0 changed, 0 destroyed.

2021/04/19 23:26:49.450869 [INFO] (ctrl) task completed slb_auto_config

2021/04/19 23:26:59.549362 [INFO] (ctrl) executing task slb_auto_config

2021/04/19 23:26:59.549466 [INFO] running Terraform command: /root/nia/terraform apply -no-color -auto-approve -input=false -var-file=terraform.tfvars -var-file=providers.tfvars -lock=true -parallelism=10 -refresh=true

module.slb_auto_config.thunder_service_group.service-group["web80"]: Creating...

module.slb_auto_config.thunder_service_group.service-group["web80"]: Creation complete after 0s [id=web80]

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

2021/04/19 23:27:01.380723 [INFO] (ctrl) task completed slb_auto_config

On vThunder ADC, all three servers were successfully added by CTS, and the service (VIP with service port 80) is now up and running.

vThunder#show slb virtual-server bind

Total Number of Virtual Services configured: 1

---------------------------------------------------------------------------------

*Virtual Server :vip-web80 10.64.4.111 All Up

+port 80 http ====>web80 State :All Up

+192.168.0.10:80 192.168.0.10 State :Up

+192.168.0.11:80 192.168.0.11 State :Up

+192.168.0.12:80 192.168.0.12 State :Up

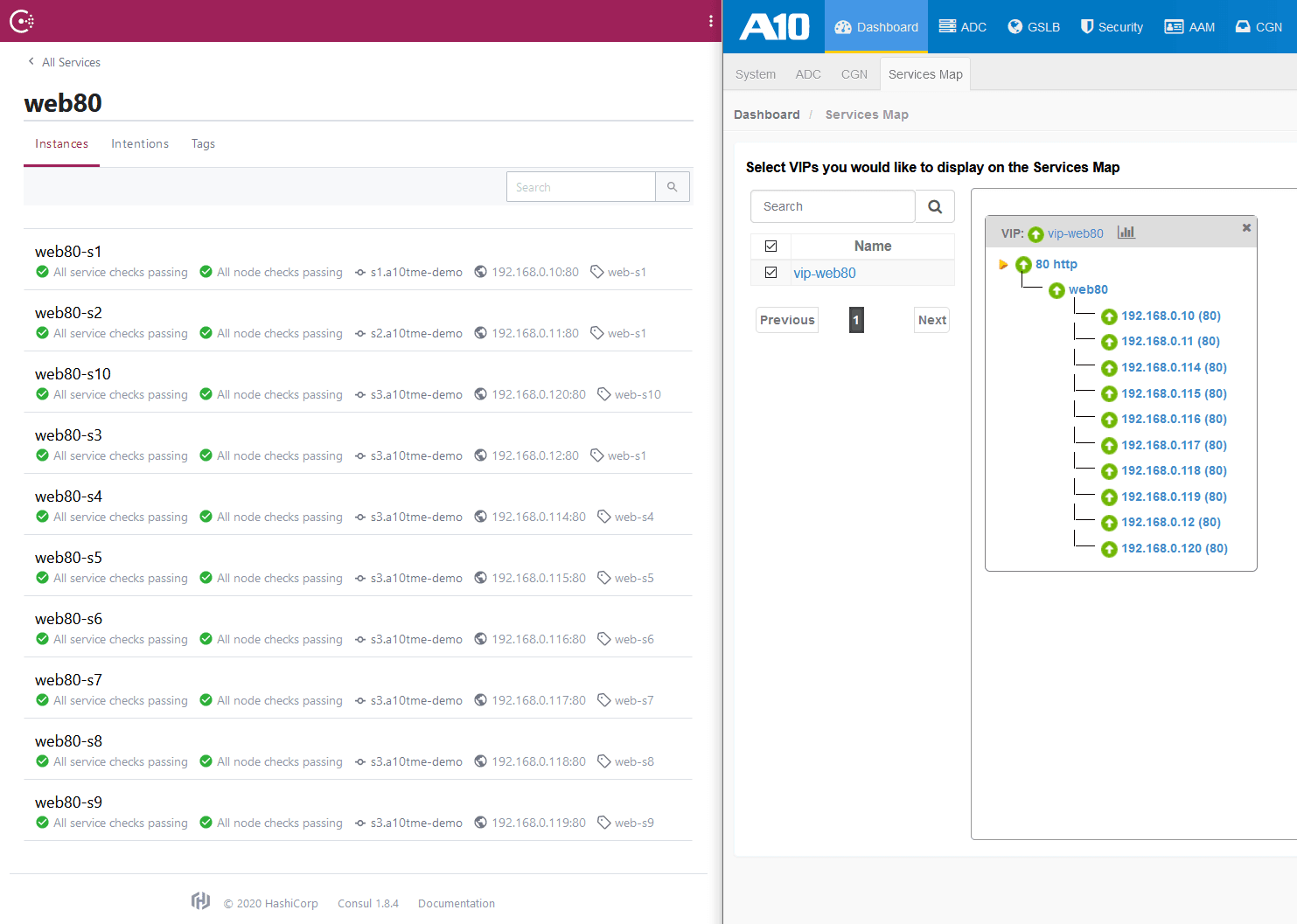

Even if many more servers are added to the service as shown below, Thunder ADC’s SLB configurations are automatically updated in near-real time by CTS.

»Next Steps

The NIA solution using CTS is a powerful network automation enabler and works perfectly with A10 Thunder ADC whenever any new service nodes are added/deleted/moved or any unexpected server failure happens. Furthermore, you can easily extend this NIA solution to support CI/CD operations including blue-green deployment by leveraging Terraform and service tags on Consul.

The strength of this NIA solution is that it can be implemented in any type of platform as Consul is a cloud-agnostic DevOps tool and A10 Thunder can run on various clouds, hypervisors, and containers.

For more resources on how to try NIA out yourself, see this list:

- Consul documentation

- Try A10 Thunder free for 30 days

- Get the Terraform NIA module for A10 Thunder

- Webinar: Automating Network Infrastructure Tasks with A10 and HashiCorp

Sign up for the latest HashiCorp news

More blog posts like this one

Protect data privacy in Amazon Bedrock with Vault

This demo shows how Vault transit secrets engine protects data used for RAG in an Amazon Bedrock Knowledge Base created by Terraform.

Preventative beats reactive: Modern risk management for infrastructure vulnerabilities

Vulnerability scanning is a last line of defense. Your first line should be preventative risk management strategies that shift security left and narrow the window for exploits.

Ace your Terraform Professional exam: 5 tips from certified pros

Three HashiCorp Certified: Terraform Authoring & Ops pros share their advice for preparing for and completing the certification exam.