HCP Vault now supports shipping metrics and audit logs to Datadog, Grafana Cloud, or Splunk SaaS data platforms. To add this feature, we considered building our own integration system from scratch, but we also looked at third-party systems we could leverage instead. We eventually decided to use Datadog’s vendor-agnostic Vector data pipeline. Let's take a look at how we set out to solve this data shipping challenge and how we settled on using Vector to build the system we have now.

»Starting with the Problem

While HashiCorp manages the infrastructure of HCP Vault, customers have a need for security, business, and capacity planning insights into Vault usage tailored to their use cases. Many customers have existing industry-standard observability platforms and prefer to use their existing tools for analyzing HCP Vault audit logs and metrics. Hence, there was a need to ship Vault metrics and audit logs to the customer's choice of observability platform.

»Design Requirements

Every major SaaS offering for security information and event management (SIEM) uses a different format to ingest data. Since data ingestion varies from one platform to another, it was important to remove that complexity for the user, unifying workflows where we could. Whether we built a new piece of technology from scratch or picked up an existing one, the design requirements included:

- A unified solution to handle metrics and logs export. This was essential so that design remained simple.

- A pluggable/extensible ecosystem. The design should be flexible enough to accommodate more SaaS integrations down the line without changing the underlying setup.

- Tolerance and robustness toward network partition. As with most distributed systems, we needed to ensure that no data was missed or dropped during an outage where the external observability platform was not reachable.

- A translation layer to add or drop data as needed. This would allow tagging or adding data as needed.

- Light on compute resources. Maximum resources should be available to the customer.

- Handle audit log rotation use cases. Since Vault audit logs are basically files on the compute instances, we need to handle file rotation cases.

»Discovering Vector

While evaluating many technologies, our team stumbled upon Datadog’s Vector:

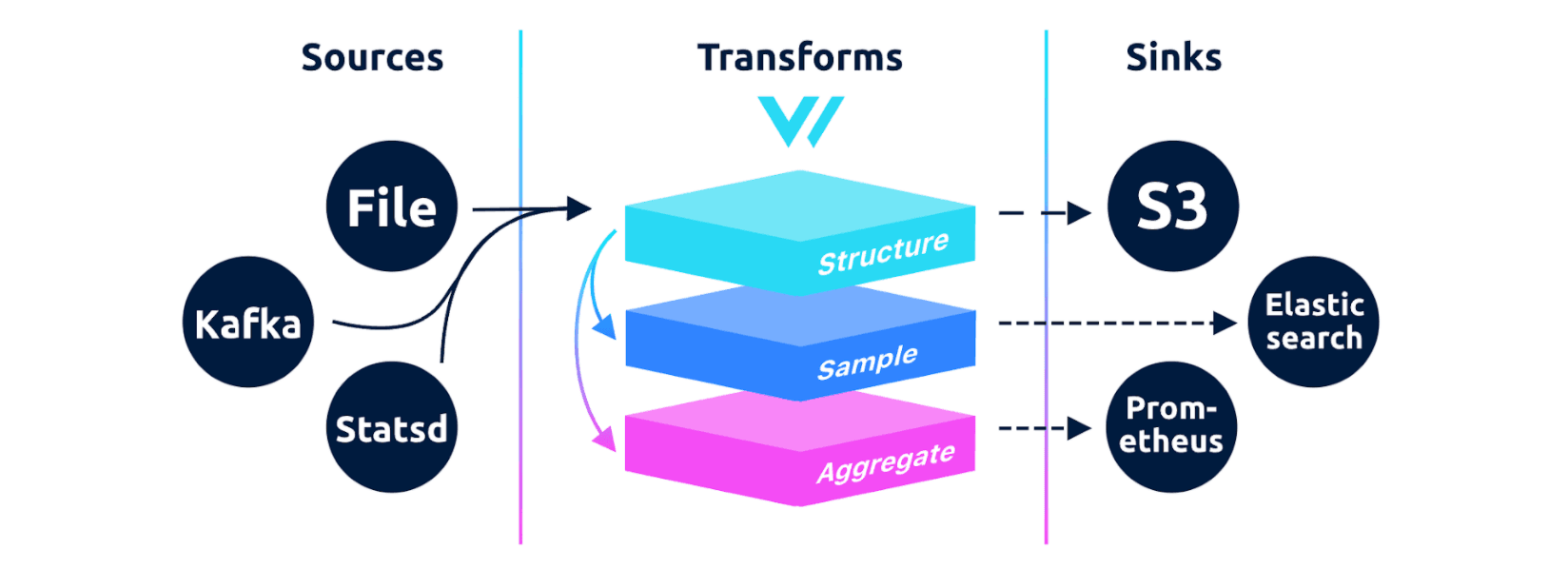

Vector is a vendor agnostic data platform that enables users to collect, enrich, and transform logs, metrics, and traces from on-premises and cloud environments — and route them to the destinations of their choice.

It looked interesting to us from the outset. HashiCorp has a phenomenal partnership with Datadog, so we started asking them about Vector’s capabilities. Much to our surprise, Vector was trying to offer a unified solution to a problem similar to ours.

Vector’s vendor-agnostic design made it a great contender for what we were hoping to build, but we also wanted to see how flexible the data transformation layer offerings were, since the ability to add and drop data at will was a key requirement. We found out that Vector’s VRL (Vector Remap Language) would give us that flexibility.

VRL is an expression-oriented language designed to transform data in a safe and performant manner. The design of Vector is simple; define the source to collect data, perform data transformation via VRL, and route it to your desired sinks (destination data platforms) with one simple tool.

We found VRL to be flexible, easy to understand, and extremely powerful. Vector also covered various network-partition edge cases that we wanted to address as part of our design. During our evaluation, the resource-utilization footprint was also minimal (< 2% CPU utilization for our use case). Vector was easy to integrate into our CI/CD testing as well.

We worked with the Datadog team to ship new features (like cgroup metric support, among others) that we thought would be valuable additions to the product and community. We are really grateful to have such great support from the Datadog team.

»How We’re Using Vector Today — and Looking Ahead

After testing and fulfilling all of our requirements with Vector, we integrated it into HCP Vault to export metrics and logs to external data platforms such as Datadog, Grafana, and Splunk. It was easy to create a common framework to generate Vector configuration based on the requested sink (SIEM platform) and roll it out to the underlying infrastructure in an immutable way for Vector to start the exporting process. The whole mindset of building distributed workflows in VRL — capturing data sources, transformations, and forwarding to sinks — simplifies the overall design and also allows room for future enhancements around supporting multiple data platforms and integrating new ones.

»Try HCP Vault

As a fully managed service, HCP Vault makes it easier to secure secrets and application data, including API keys, passwords, or certificates. For more information about HCP Vault and pricing, please visit the HCP Vault product page or sign up today through the HCP portal.