We are pleased to announce the public availability of HashiCorp Nomad 0.9.

Nomad is a flexible workload orchestrator that can be used to easily deploy both containerized and legacy applications across multiple regions or cloud providers. Nomad is easy to operate and scale, and integrates seamlessly with HashiCorp Consul for service discovery and HashiCorp Vault for secrets management.

Nomad 0.9 adds new scheduling features that enable greater control over how Nomad places applications across the available infrastructure. It also lays the groundwork for a plugin-based feature strategy that will enable users to more easily integrate Nomad with a wide range of ecosystem tools and technologies. The major new features in Nomad 0.9 include:

-

Spread: Enable job authors to distribute workloads based on specific attributes or client metadata.

-

Affinities: Enable job authors to express preferred placement for a workload.

-

Preemption for System Jobs: Enable system jobs to displace low priority workloads.

-

Task Driver Plugins: Enable community members to easily contribute and maintain new task drivers to support specific runtimes.

-

Device Plugins: Enable Nomad to support provisioning workloads that require specialized hardware like GPUs.

-

Web UI: Support job submission and improve resource visualizations.

This release also includes a number of additional improvements to the Web UI, the CLI, the Docker driver, and other Nomad components. The CHANGELOG provides a full list of Nomad 0.9 features, enhancements, and bug fixes.

»Spread

Nomad uses bin packing by default when making job placement decisions. Bin packing seeks to fully utilize the resources on a subset of the available client nodes before moving on to utilize additional nodes. While this behavior generally leads to smaller fleet sizes and cost savings, it often makes sense for the instances of a given workload to be spread out across the available infrastructure to optimize for high availability or performance.

A new spread stanza has been added in Nomad 0.9 that will allow a job author to specify a distribution of allocations for their jobs based on a specific attribute, usually a failure domain like datacenter or rack:

spread {

attribute = “${node.dc}”

weight = 20

target “us-east1” {

percent = 50

}

target “us-east2” {

percent = 30

}

target “us-west1” {

percent = 20

}

}

The spread stanza includes a target parameter that allows a user to specify the distribution ratio across a set of target values for the specified attribute. A weight parameter can also be used to assign relative priority across a series of spread and affinity stanzas.

See the Increasing Failure Tolerance with Spread guide for more details.

»Affinities and Anti-affinities

Nomad 0.9 adds scheduler support for affinity and anti-affinity. Affinity refers to the ability for a user to express preferred placement for a given workload based on the current state of the runtime environment. This is in contrast to a hard requirement as currently imposed by a constraint in Nomad.

A new affinity stanza has been added that enables a job author to express a preference for placement based on any node property that Nomad clients are aware of:

affinity {

attribute = “${meta.node_class}”

operator = “=”

value = “m5”

weight = 10

}

As with spread, the weight parameter can be used to assign relative priority if multiple stanzas have been defined for a single task group. A negative weight can be used to preferentially avoid placement with respect to a given node property (anti-affinity):

affinity {

attribute = “${meta.node_class}”

operator = “=”

value = “m5”

weight = -10

}

See the Expressing Job Placement Preferences with Affinities guide or the Spread and Affinities blog post for more details.

»Preemption for System Jobs

Preemption refers to the ability for a scheduler to evict lower priority work in favor of higher priority work when the targeted nodes lack sufficient capacity for placement. While Nomad currently enables a job author to specify a priority for a given job, the impact is limited to scheduling order at the evaluation and planning stages which is achieved by sorting the respective queues accordingly (highest priority to lowest):

job "myjob" {

region = "north-america"

datacenters = ["us-east-1"]

priority = 80

group "mygroup" {

task "mytask" {

# ...

}

}

}

When a targeted set of nodes is fully utilized, any allocations that result from a newly scheduled job will remain in the pending state until sufficient resources become available - regardless of the defined priority. This can lead to priority inversion, where low priority workloads that are currently running can prevent high priority workloads from being scheduled.

Preemption support in Nomad 0.9 will initially enable Nomad to evict lower priority allocations as necessary to ensure that system jobs can run on every node in the cluster. Preemption for service and batch jobs will be included in the Enterprise version of Nomad in a subsequent 0.9.x point release.

»Plugins

The Nomad client was refactored in Nomad 0.9 to enable plugin-based support for a range of features. Plugins lower the barrier for ecosystem technology integrations by enabling community members to develop and maintain third-party integrations outside of the standard code review and release process that Nomad core requires. The 0.9 release adds support for Task Driver Plugins and Device Plugins (see below). Upcoming releases will add plugin-based features for networking and storage that are compatible with the CNI and CSI specifications, respectively.

»Task Driver Plugins

Nomad’s task driver subsystem allows it to support a wide range of workload types. Operators can leverage Nomad’s native task drivers to bring benefits like deployment automation, self-service, high resource utilization, and automatic secret injection to non-containerized, legacy, or Windows workloads - in addition to their Docker based workloads. The task driver subsystem has been refactored in Nomad 0.9 to support external plugins. This will enable the community to easily contribute new task drivers or create modified versions of existing task drivers. The LXC task driver has been externalized in Nomad 0.9 and provides a good illustration of how the new task driver plugin system works.

plugin "nomad-driver-lxc" {

config {

volumes_enabled = true

lxc_path = "/var/lib/lxc"

}

}

»Device Plugins

Nomad 0.9 introduces a device plugin system in addition to the new task driver plugin system. Device plugins will enable Nomad to support specialized hardware devices like GPUs, TPUs, and FPGAs. GPUs (graphical processing units) have become a standard means to accelerate computational workloads including machine learning, image processing, and financial modeling. To support these and other use cases out of the box, Nomad 0.9 includes a native Nvidia GPU device plugin.

The device plugin system will enable the Nomad client to detect a device, fingerprint its capabilities, and make it available to the scheduler for reservation by job authors:

resources {

device "nvidia/gpu" {

count = 2

#Require a GPU with at least 4 GiB of memory

constraint {

attribute = "${device.attr.memory}"

operator = ">="

value = "4 GiB"

}

#Prefer a GPU from the Tesla model family

affinity {

attribute = "${device.model}"

operator = "regexp"

value = "Tesla"

}

}

}

»Web UI

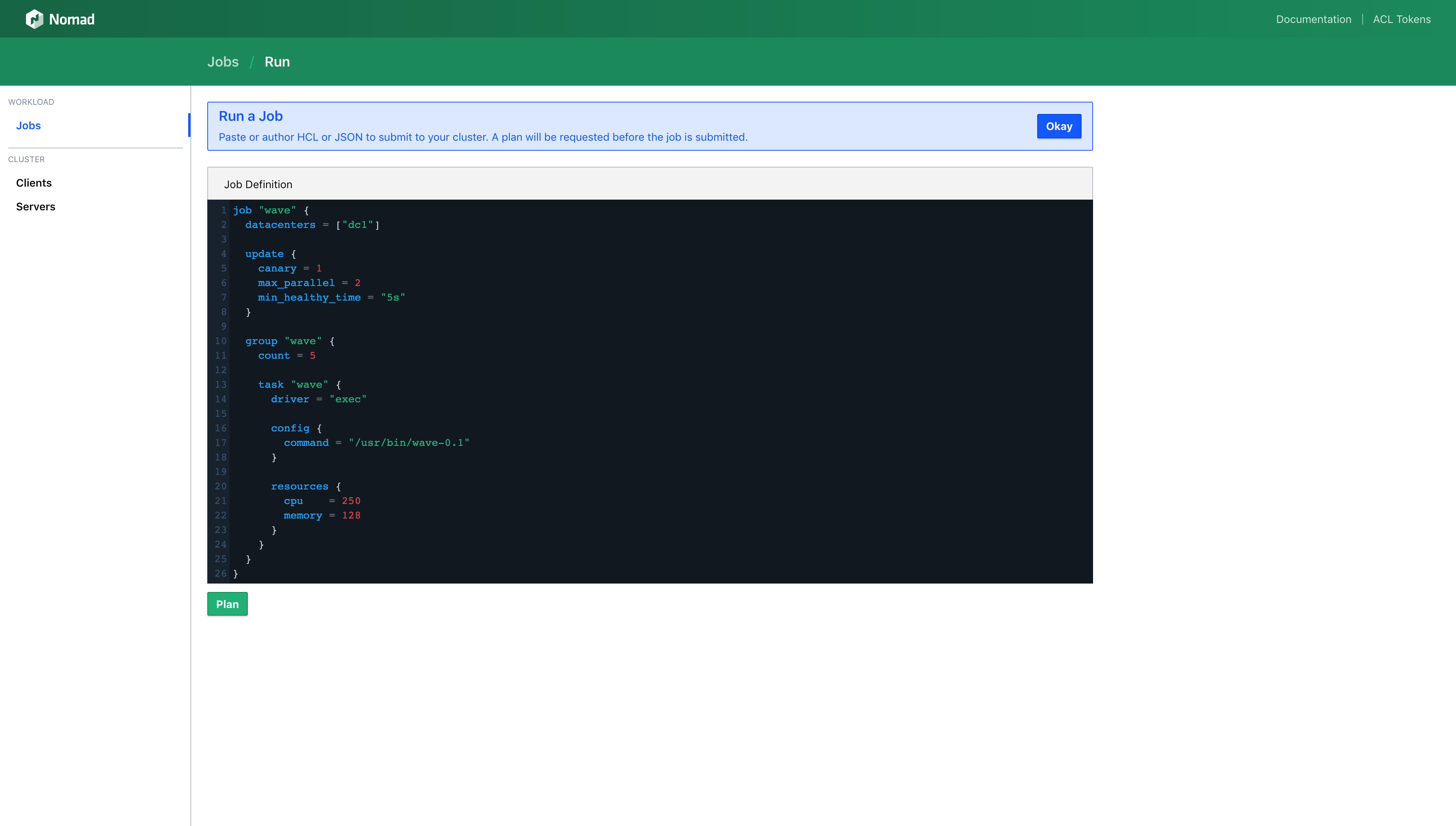

Nomad 0.9 enhances the Web UI to support job submission and modification. While a CI/CD and version control based deployment approach is still recommended, Web UI support for job submission will enable easy experimentation for new users, easy iteration during dev/test cycles, and a mechanism for operators to quickly make minor changes to resolve production-level service outages. Job submission through the Web UI will require authorization through the Nomad ACL subsystem (via the coarse grained write policy or the submit-job capability).

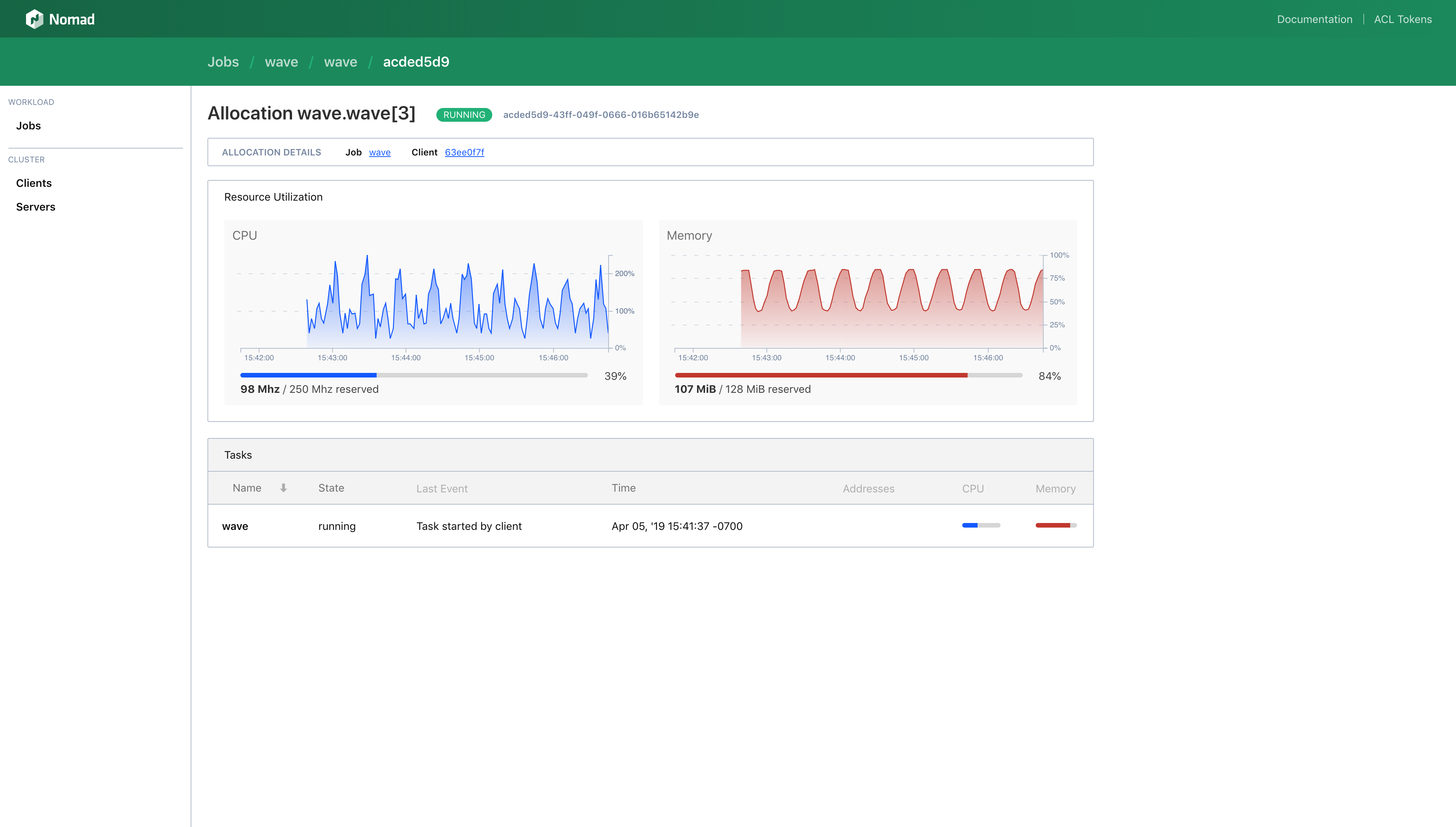

The 0.9 release also adds improved CPU and memory usage charts to the Web UI. CPU and memory metrics are now plotted over time during a session in line charts on the node detail, allocation detail, and task detail pages:

»Additional Features in Nomad 0.9

Web UI

- Support region switching

- Support canary promotion

- Support restart of stopped jobs

ACLs

- Support the use of globs in namespace definitions

Logs

- Support JSON log output

- Support logs when using Docker for Mac

Docker Task Driver

- Support specification of storage_opt

- Support specification of bind and tmpfs mounts

- Support stats collection on Windows

»Conclusion

We are excited to share this release with our users. Visit the nomad website to learn more.