We are pleased to announce the release of HashiCorp Nomad 0.7. Nomad is HashiCorp's lightweight and flexible cluster scheduler designed to easily integrate into your existing workflows. Nomad can run both microservice and batch workloads, and has first-class support for Docker as well as non-containerized applications.

Highlights of the Nomad 0.7 release for open source include:

Nomad 0.7 is also the premiere of Nomad Enterprise. Nomad Enterprise extends the open source version of Nomad with features related to collaboration, operations and governance. The Nomad Enterprise features in the 0.7 release include:

»Access Control System

Nomad 0.7 introduces an Access Control List (ACL) system which can be used to limit access to data and APIs. The ACL system is capability-based, relying on tokens which are associated with policies to determine which rules should be applied at runtime. Nomad's ACL system is similar to the access control systems used by HashiCorp Consul and HashiCorp Vault.

The following sections describe the main components of the ACL system in more detail:

»Policies

An ACL policy is a named set of rules that specify the set of capabilities that should be granted to any token associated with the policy. Capabilities can be defined for the following top level policy rules:

- namespace - Job related operations by namespace

- agent - Utility operations in the Agent API

- node - Node-level catalog operations

- operator - Cluster-level operations in the Operator API

- quota - Quota specification related operations

The HashiCorp Configuration Language (HCL) is used to specify rules.

»Capabilities

Capabilities represent the explicit set of actions that will be authorized by the policy. This includes submitting jobs, reading logs, querying nodes and others. The sample policy below demonstrates using both fine-grained capabilities as well as the coarse-grained policy dispositions (“read”, “write” and “deny”):

# Allow read only access to the default namespace

namespace "default" {

# Equivalent to 'policy = "read"'

capabilities = ["list-jobs", "read-job"]

}

# Allow writing to the `foo` namespace

namespace "foo" {

# Equivalent to 'capabilities = ["list-jobs", "read-job", "submit-job", "read-logs", "read-fs"]'

policy = "write"

}

agent {

policy = "read"

}

node {

policy = "read"

}

»Tokens

ACL tokens are used to authenticate requests and determine if the caller is authorized to perform an action. Each ACL token has a public Accessor ID which is used to identify the token and a Secret ID which is used to make requests to Nomad.

»Multi-Region Configuration

Nomad supports multi-datacenter and multi-region configurations. ACL policies are replicated from the authoritative region to all federated regions when they are created. This allows policies to be administered centrally and for enforcement to be local to each region for low latency. ACL tokens can be optionally replicated to secondary regions to enable secure cross-region requests as necessary.

See the ACL Guide for a comprehensive overview.

»Web UI

Nomad 0.7 adds a Web UI that enables a user to easily investigate and monitor jobs, allocations, deployments, clients, and servers. The Web UI is embedded in the Nomad binary and is served from the same address and port as the HTTP API (under /ui). The Web UI can be used in conjunction with the Nomad ACL system to restrict access to jobs, nodes, and other details appropriately. Below are a few screen captures that demonstrate the look-and-feel of the Nomad Web UI.

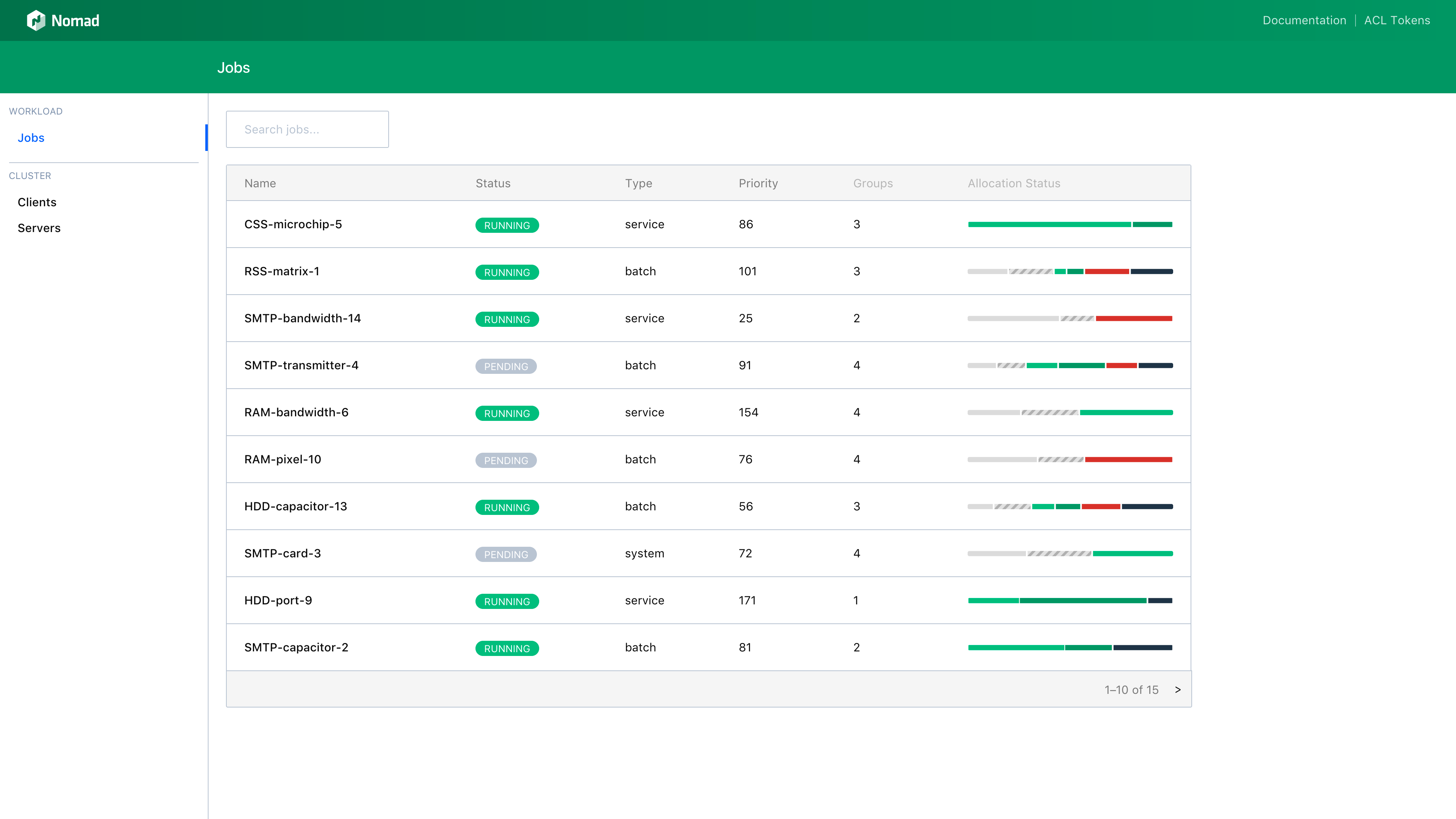

The Jobs page has a searchable table of all jobs in the cluster:

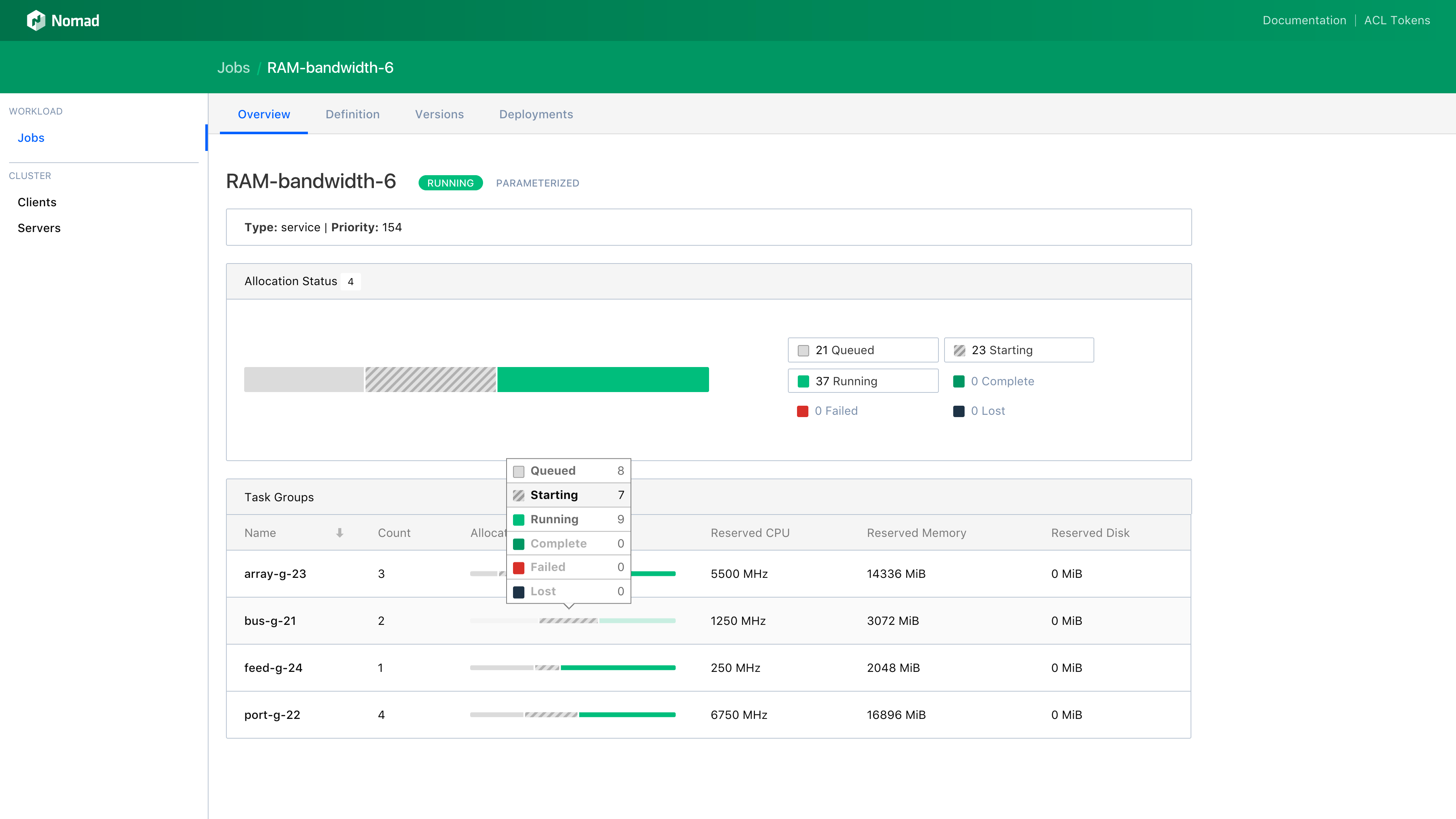

The Job Detail page lists the task groups for the job as well as the status of the job's allocations. This page also has tabs for the job definition, past versions and deployments:

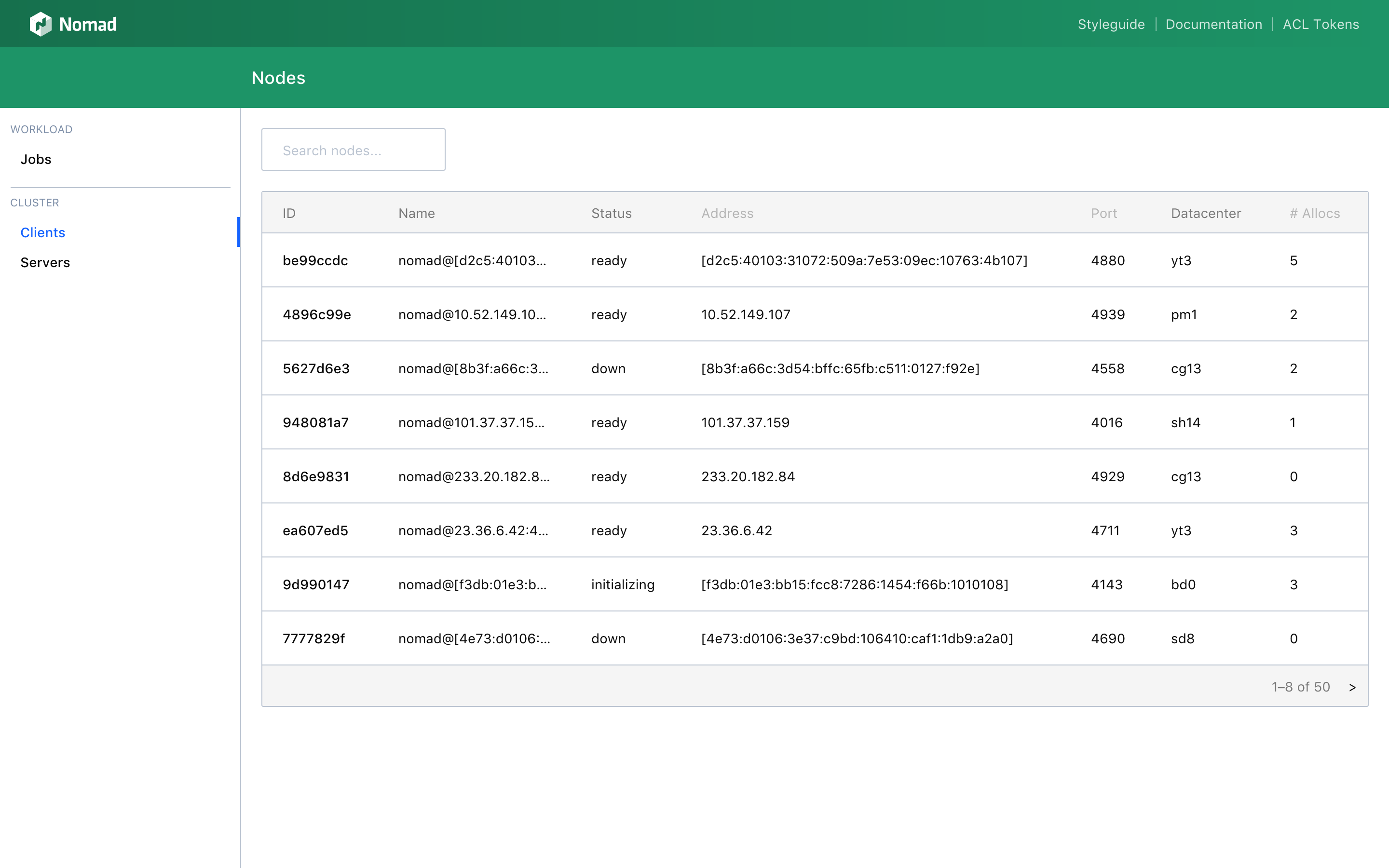

The Clients page has a searchable table of all clients in the cluster:

The Nomad Web UI also includes pages and features related to browsing and monitoring job deployments, inspecting individual clients and servers, and inspecting task groups and allocations.

See the Web UI guide for a thorough walkthrough or visit demo.nomadproject.io to take the Web UI for a test drive.

»Namespaces (Enterprise)

Nomad 0.7 introduces support for multi-tenancy through Namespaces. Namespaces allow many teams and projects to share a single cluster, with the guarantee that job names will not conflict. Administrators can use the ACL system to restrict access to namespaces and use Resource Quotas to limit resource consumption on a per-namespace basis. Similar to ACL policies, namespaces are replicated globally and managed by a single authoritative region.

An example workflow follows.

The namespace apply command will create a new namespace:

$ nomad namespace apply -description "QA instances of web servers" web-qa

Successfully applied namespace "web-qa"!

The following ACL policy authorizes read vs. write access across multiple namespaces:

# Allow read only access to the production namespace

namespace "web-prod" {

policy = "read"

}

# Allow writing to the QA namespace

namespace "web-qa" {

policy = "write"

}

To run a job in a specific namespace, simply annotate the job with the namespace parameter:

job "rails-www" {

# Run in the QA namespace

namespace = "web-qa"

# Only run in one datacenter when QAing

datacenters = ["us-west1"]

...

}

Include the namespace parameter to run a CLI command within the context of a given namespace:

$ nomad job status -namespace=web-qa

ID Type Priority Status Submit Date

rails-www service 50 running 09/17/17 19:17:46 UTC

See the Namespaces Guide for further detail.

»Resource Quotas (Enterprise)

Nomad 0.7 adds support for Resource Quotas. Resource quotas provide a means for operators to limit CPU and memory consumption across namespaces to guarantee that no single tenant can dominate usage. When a quota is attached to a namespace, the resources reserved for all existing and future jobs are accounted towards the defined limits. A single quota can also be attached to multiple namespaces if a higher level accounting across a subset of namespaces is desired. Like ACL policies and namespaces, resource quotas are replicated globally and managed by a single authoritative region.

When a resource quota is exhausted, Nomad will queue incoming work to be scheduled when resources become available, either because jobs have completed or the quota has been increased. This behavior provides a higher Quality of Service (QoS) without forcing clients to implement their own queuing on top of Nomad.

Resource quota limits are defined on a per-region basis. The quota definition below limits CPU consumption to 2500 and memory consumption to 1000 for the global region:

name = "web-qa-quota"

description = "Limit the web-qa namespace"

# Create a limit for the global region. Additional limits may

# be specified in-order to limit other regions.

limit {

region = "global"

region_limit {

cpu = 2500

memory = 1000

}

}

The following command will create a new quota:

$ nomad quota apply web-qa-quota.hcl

Successfully applied quota specification "web-qa-quota"!

To apply the quota to the web-qa namespace, use:

$ nomad namespace apply -quota web-qa-quota web-qa

Successfully applied namespace "web-qa"!

The quota status CLI command returns the current accounting for the quota:

$ nomad quota status web-qa-quota

Name = web-qa-quota

Description = Limit the web-qa namespace

Limits = 1

Quota Limits

Region CPU Usage Memory Usage

global 500 / 2500 256 / 1000

Access to resource quotas can be restricted using the ACL system:

# Allow read only access to quotas.

quota {

policy = "read"

}

See the Resource Quotas Guide for more information.

»Sentinel Policies (Enterprise)

Nomad 0.7 integrates with HashiCorp Sentinel to enable fine-grained policy enforcement on job submission and job updates. Sentinel is an embedded policy as code framework that enables fine-grained policy decisions optionally using external information. This integration extends the capabilities of Nomad ACLs which are limited to the authorization of coarse grained capabilities across Nomad namespaces and APIs. Sentinel enables a team to manage policy “as code” using constructs like version control, iteration, testing and automation that characterize the management of application code and infrastructure code today.

Sentinel policies are specified in the Sentinel Language which has been optimized for ease-of-use and performance. Nomad currently supports Sentinel policy enforcement for job submission only. All fields represented in the JSON job specification are implicitly available.

The following Sentinel policy would restrict jobs to only allow Docker workloads:

allowed_drivers = ["docker"]

main = rule {

all job.task_groups as tg {

all tg.tasks as t { t.driver in allowed_drivers }

}

}

This policy would limit jobs to only 8 GB of memory resources:

func sum_tasks(tg) {

count = 0

for tg.tasks as t { count += t.resources.memory else 0 }

return count

}

func sum_job(job) {

count = 0

for job.task_groups as tg {

if tg.count < 0 {

fail("unreasonable count value: %d", tg.count)

}

count += sumTasks(tg) * tg.count

}

return count

}

main = rule { sum_job(job) <= 8000 }

The nomad sentinel CLI command can be used to create and manage Sentinel policies. The following command adds a new policy at the advisory enforcement level:

$ nomad sentinel apply -level=advisory test-policy test.sentinel

Successfully wrote "test-policy" Sentinel policy!

Soft and hard mandatory enforcement levels are also available. See the Sentinel Policies Guide for more details.

»Additional New Features and Bug Fixes

Nomad 0.7 also adds support for additional key features including tagged metrics and automatic restart of unhealthy tasks based on health check status. There are many bug fixes and other improvements as well. Please review the Nomad CHANGELOG for a detailed list of changes and be sure to read the upgrade guide.

»Nomad Talks at HashiConf 2017

Operating Jobs at Scale with Nomad

End to End Production Nomad at Citadel

Containing Elsevier's World: A Nomad Framework

Backend Batch Processing with Nomad

Jet.com discussing Nomad in HashiConf 2017 Keynote

»Getting Started

To get started with Nomad open source, download the latest version at https://www.nomadproject.io/. To find out more about Nomad Enterprise, visit https://www.hashicorp.com/products/nomad.