We are pleased to announce the general availability of Consul-Terraform-Sync (CTS) 0.7. This release marks another step in the maturity of our larger Network Infrastructure Automation (NIA) solution.

CTS combines the functionality of HashiCorp Terraform and HashiCorp Consul to eliminate manual ticket-based systems across on-premises and cloud environments. Its capabilities can be broken down into two parts: For Day 0 and Day 1, teams use Terraform to quickly deploy network devices and infrastructure in a consistent and reproducible manner. Once established, teams manage Day 2 networking tasks by integrating Consul’s catalog to register services into the system via CTS. Whenever a change is recorded to the service catalog, CTS triggers a Terraform run that uses partner ecosystem integrations to automate updates and deployments for load balancers, firewall policies, and other service-defined networking components.

This post covers the evolution of CTS and highlights the new features in CTS 0.7.

»Consul-Terraform-Sync 0.1 Through 0.6

- CTS 0.1 and CTS 0.2 enabled a publisher-subscriber (pub-sub) paradigm for updating your network infrastructure based on changes in the HashiCorp Consul catalog, leveraging the Terraform CLI on the local node. These releases focused on establishing core networking use cases for practitioners, including applying firewall policies, updating load balancer member pools, and more.

- The 0.3 release of CTS marked a major milestone by adding support for Consul-Terraform-Sync Enterprise. This release featured regex support for service triggers and Terraform Enterprise integration.

- CTS 0.4 enabled integration with Terraform Cloud and support to trigger a Terraform workflow based on Consul key-value (KV) changes. The integration with Terraform Enterprise and Terraform Cloud allowed organizations to dynamically manage their Day 2 applications’ networking delivery lifecycle and oversee infrastructure with governance and security functionality.

- The CTS 0.5 release in February of 2022 improved task-lifecycle APIs and CLIs to reduce friction while streamlining configuration management workflows.

- CTS 0.6, released in May, added Terraform Cloud Agent and HCP Consul support.

»High Availability for CTS (Enterprise Only)

Prior to this release, CTS did not natively support high availability and depended on a third-party system or orchestrator to recover from failures. Given that keeping network infrastructure up to date is mission critical, we wanted to make CTS highly available and redundant; release 0.7 does exactly that.

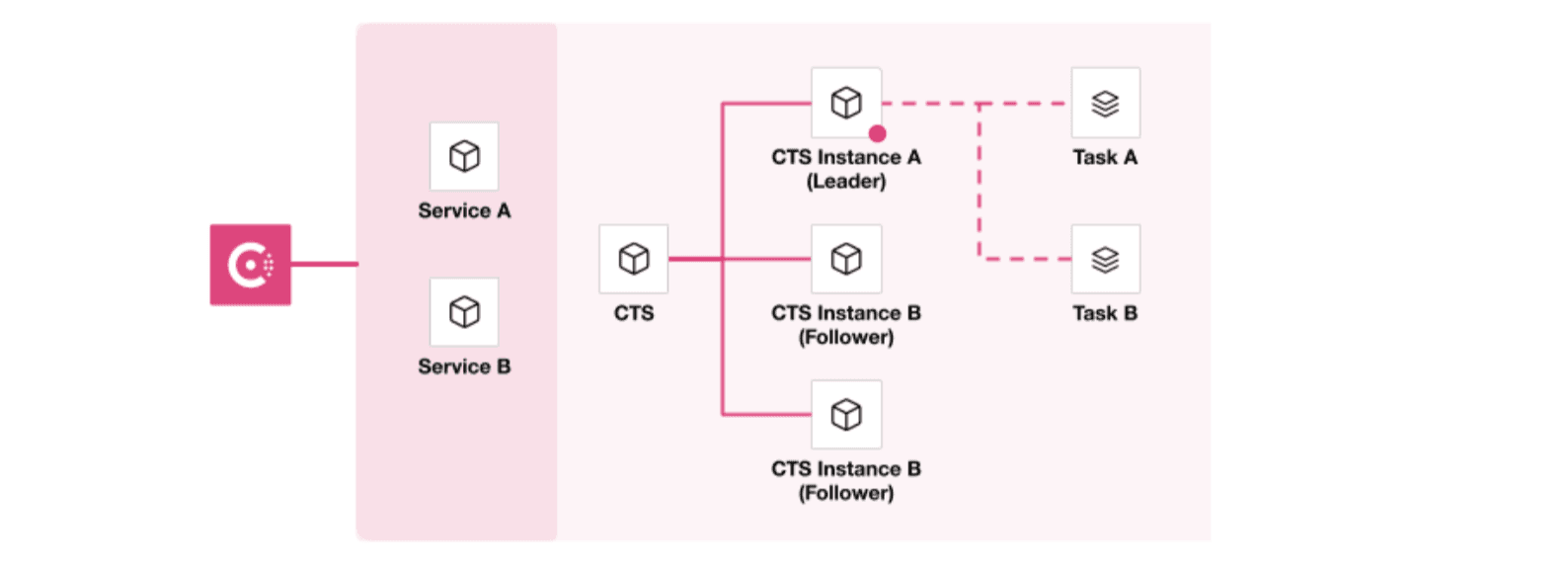

CTS is now highly available in 0.7 by implementing a leader/follower system. There is one leader and any number of followers (we recommend at least two for redundancy). The leader is responsible for monitoring and running tasks. Followers are standby instances supporting redundancy in case of leader failure.

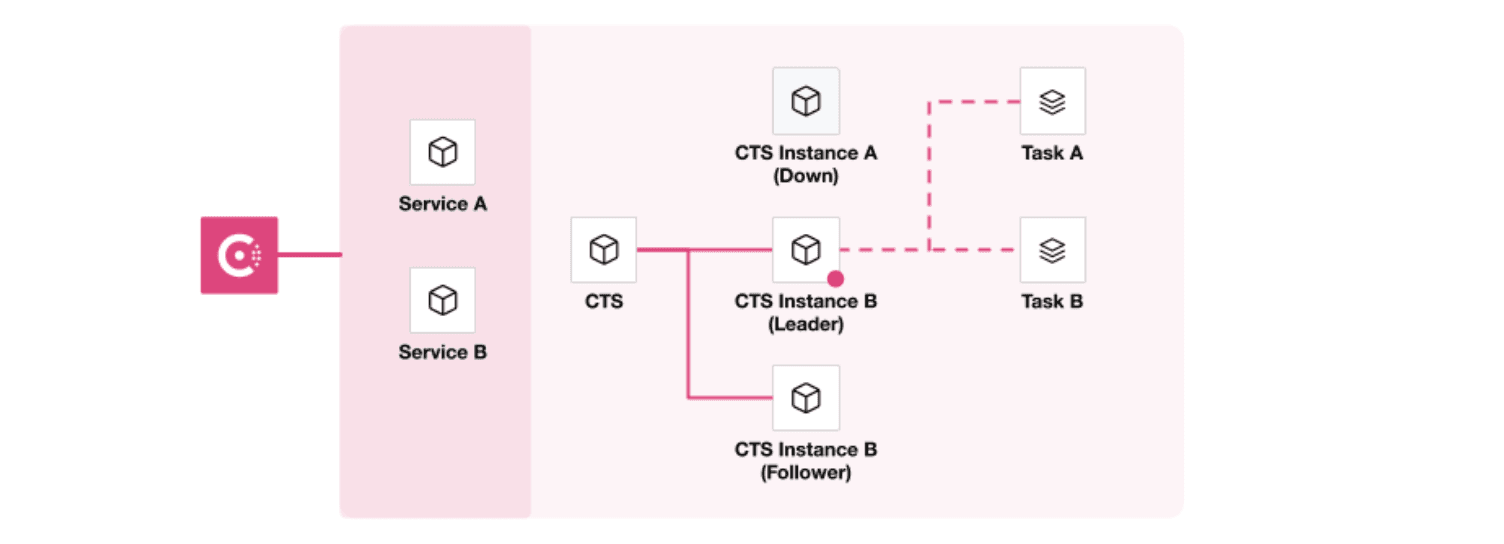

The diagrams below illustrate the high availability and redundancy capabilities of CTS 0.7:

CTS leader instance executing tasks with follower instance on standby.

Follower instance becoming the leader and executing task on leader failure.

Consul has long supported building client-side leader elections for service instances with the help of sessions and it is now leveraged by CTS to build a HA cluster and also handle failures gracefully.

»Enabling High Availability and Failover Behavior

Enabling high availability for CTS is really straightforward. Here is the relevant configuration snippet:

id = "cts-ha-01"

high_availability {

cluster {

name = "nia-ha-hrs-cluster"

storage "consul" {

parent_path = "cts"

session_ttl = "30s"

}

}

instance {

address = "10.1.5.238"

}

}

As you can see, all you need to provide is a unique ID (introduced in CTS 0.6) and a high_availability.cluster as well as a high_avilability.instance section with the details noted above.

Note: The instance address could be an IP address or a DNS name. For further details and configuration reference, please see the CTS 0.7 docs.

Once configured and started, these are the logs to expect from the first instance that is brought up, which becomes the leader:

2022-08-26T23:40:04.918Z [INFO] ha: attempting to acquire leadership lock: lock_path=cts/nia-ha-hrs-cluster/leader

2022-08-26T23:40:04.926Z [INFO] ha: acquired leadership lock: id=cts-ha-01

2022-08-26T23:40:04.942Z [INFO] ctrl: executing all tasks once through

Other instances that are brought up afterwards will log the following but never log a message that they “acquired”, indicating they are followers.

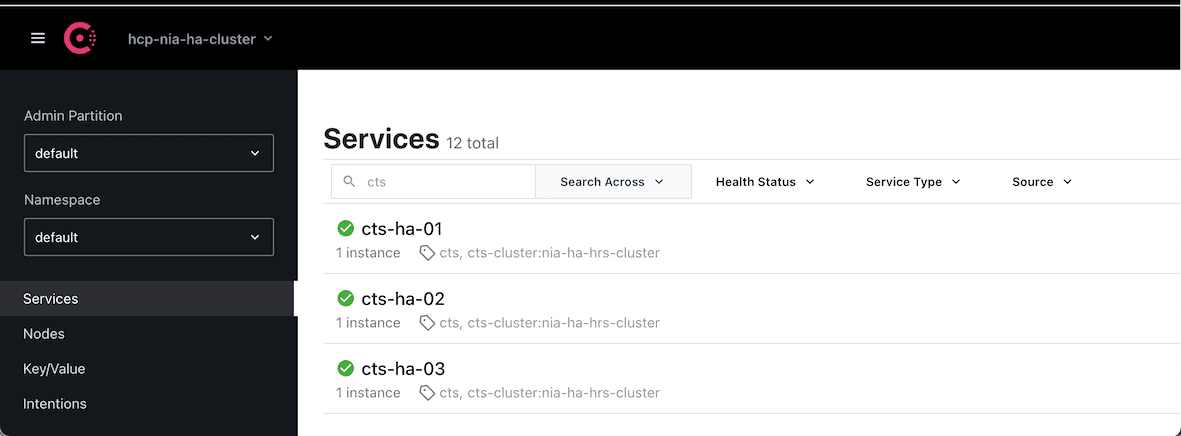

2022-08-26T23:42:13.304Z [INFO] registration: to view registered services, navigate to the Services section in the Consul UI: id=cts-ha-02 service_name=cts-ha-02

2022-08-26T23:42:13.419Z [INFO] ha: attempting to acquire leadership lock: lock_path=cts/nia-ha-hrs-cluster/leader

Here’s a snippet from the Consul service catalog once the services are registered:

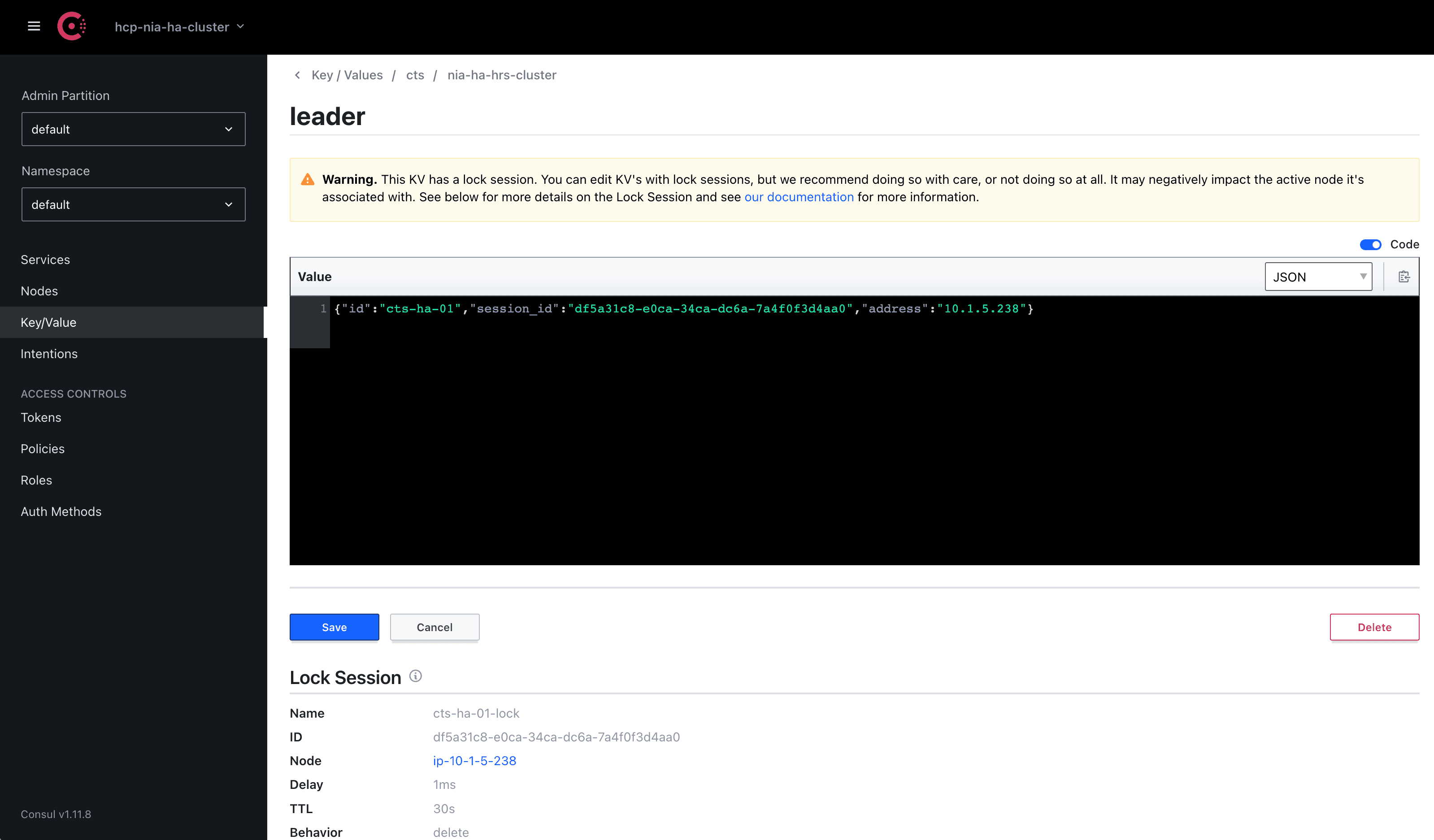

As mentioned above, CTS uses Consul KV and this is a snippet of the session locked by the current leader:

Existing APIs have been thoughtfully modified so users/services can know who is the leader.

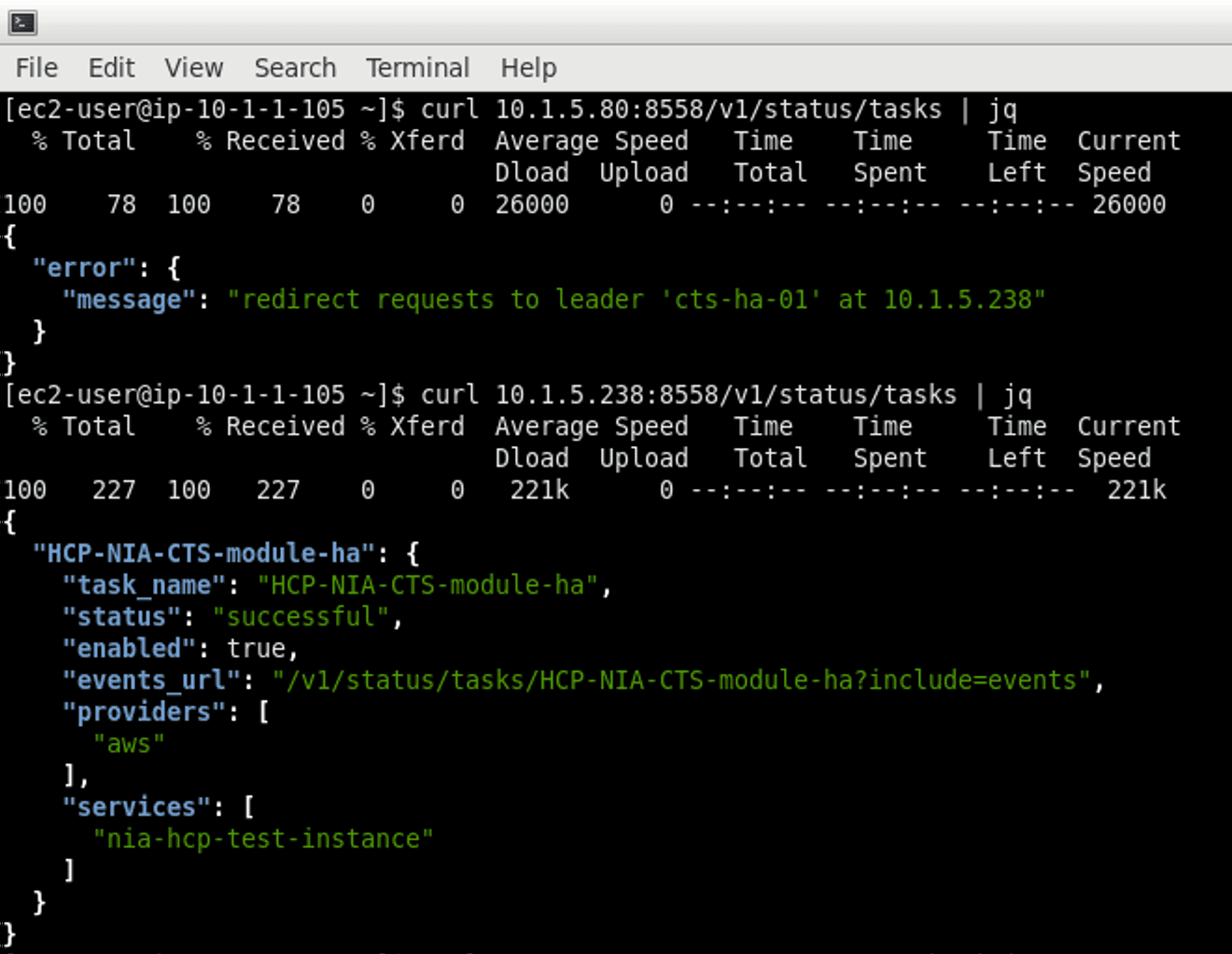

The example below shows a service/user querying a follower for tasks’ statuses and redirecting them to query the correct instance.

»CTS High Availability Failover Behavior

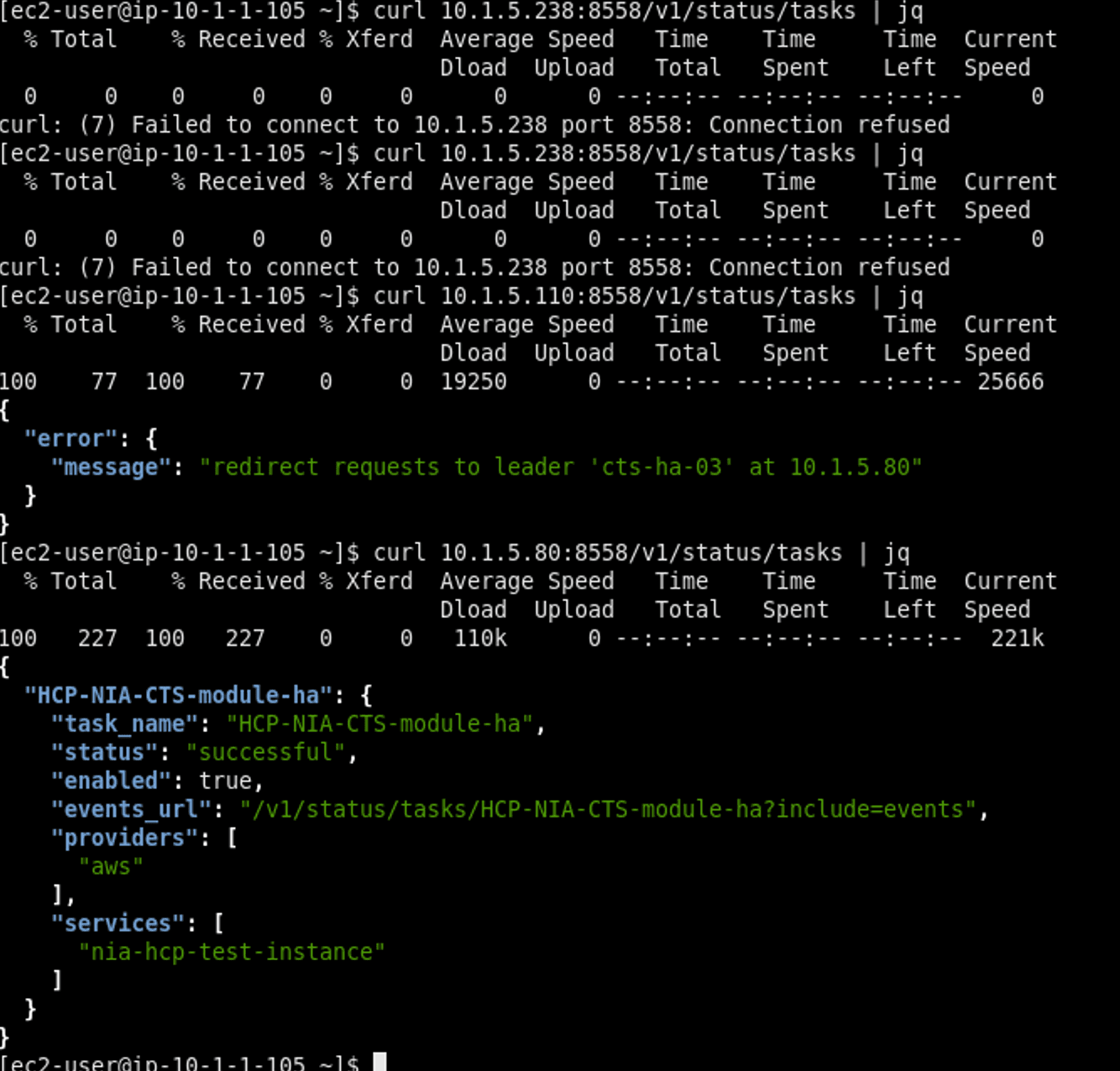

When a leader instance loses leadership (e.g., theinstance goes down), a failover will occur. At this time, all follower instances within the HA cluster will attempt to acquire leadership. All follower instances equally compete for leadership by trying to acquire a lock. The first follower that acquires the lock becomes the new leader. The other followers remain followers.

Here is a sample of what that looks like from the outside: the same user/service gets an error message now when querying the leader, but when they query one of the other instances, they are given the expected response:

Here is what it looks like behind the scenes.New leader cts-ha-03 logs the following indicating that it is now the leader:

2022-08-26T23:43:55.911Z [INFO] ha: attempting to acquire leadership lock: lock_path=cts/nia-ha-hrs-cluster/leader

2022-08-27T00:06:19.751Z [INFO] ha: acquired leadership lock: id=cts-ha-03

It will run through all the tasks in once-mode, log any errors, and continue to operate and be the leader for the cluster:

2022-08-27T00:06:19.754Z [INFO] ctrl: executing all tasks once through

2022-08-27T00:06:19.754Z [INFO] ctrl: running task once: task_name=HCP-NIA-CTS-module-ha

2022-08-27T00:06:19.791Z [INFO] driver.terraform: retrieved 0 Terraform handlers for task: task_name=HCP-NIA-CTS-module-ha

2022-08-27T00:06:20.078Z [INFO] driver.tfc: uploading new configuration version for workspace: workspace_name=HCP-NIA-CTS-module-ha

2022-08-27T00:06:21.261Z [INFO] driver.tfc: uploaded new configuration version for workspace: config_version_id=cv-roNh1eucYw24SWga workspace_name=HCP-NIA-CTS-module-ha

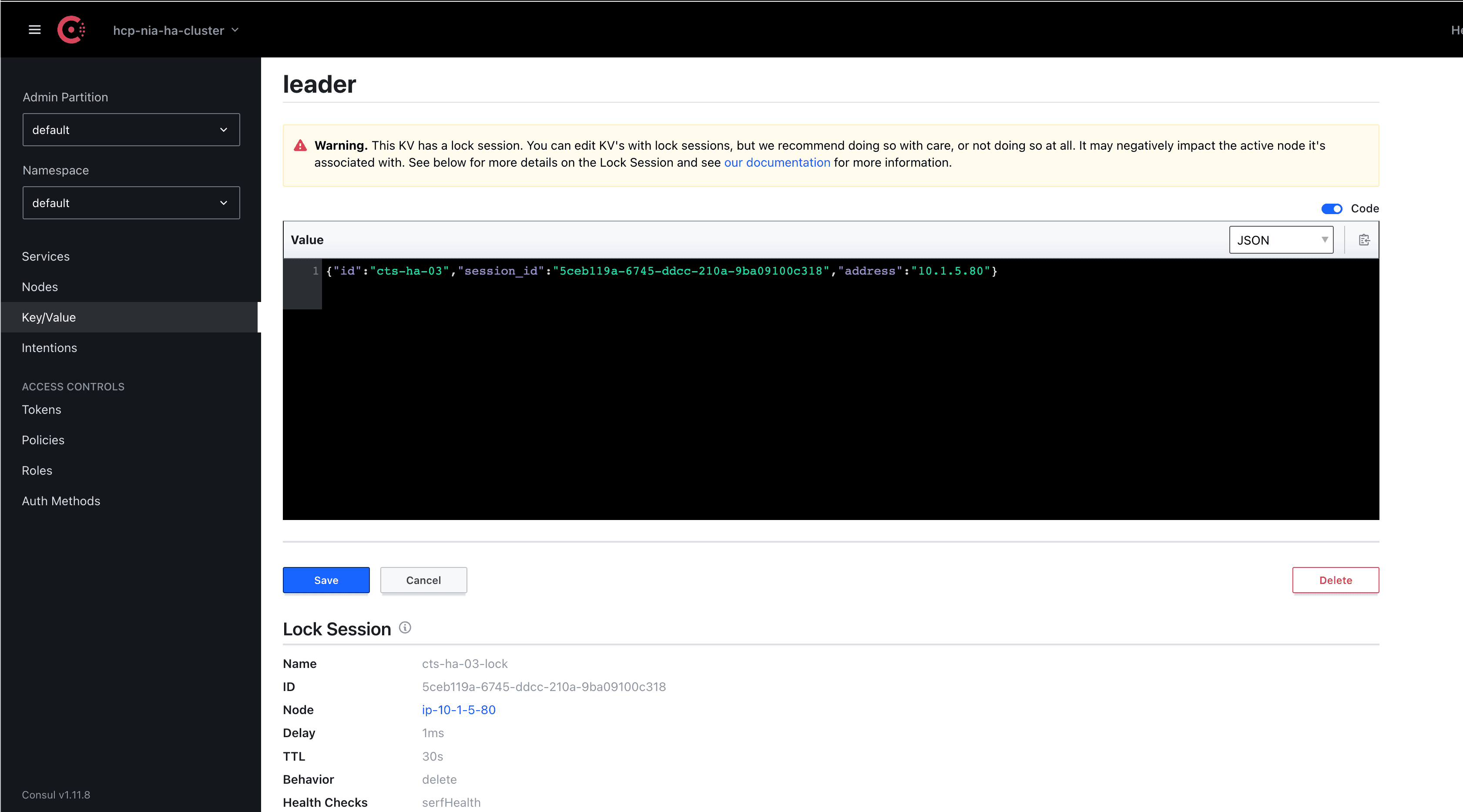

Consul KV also indicates the leader changes:

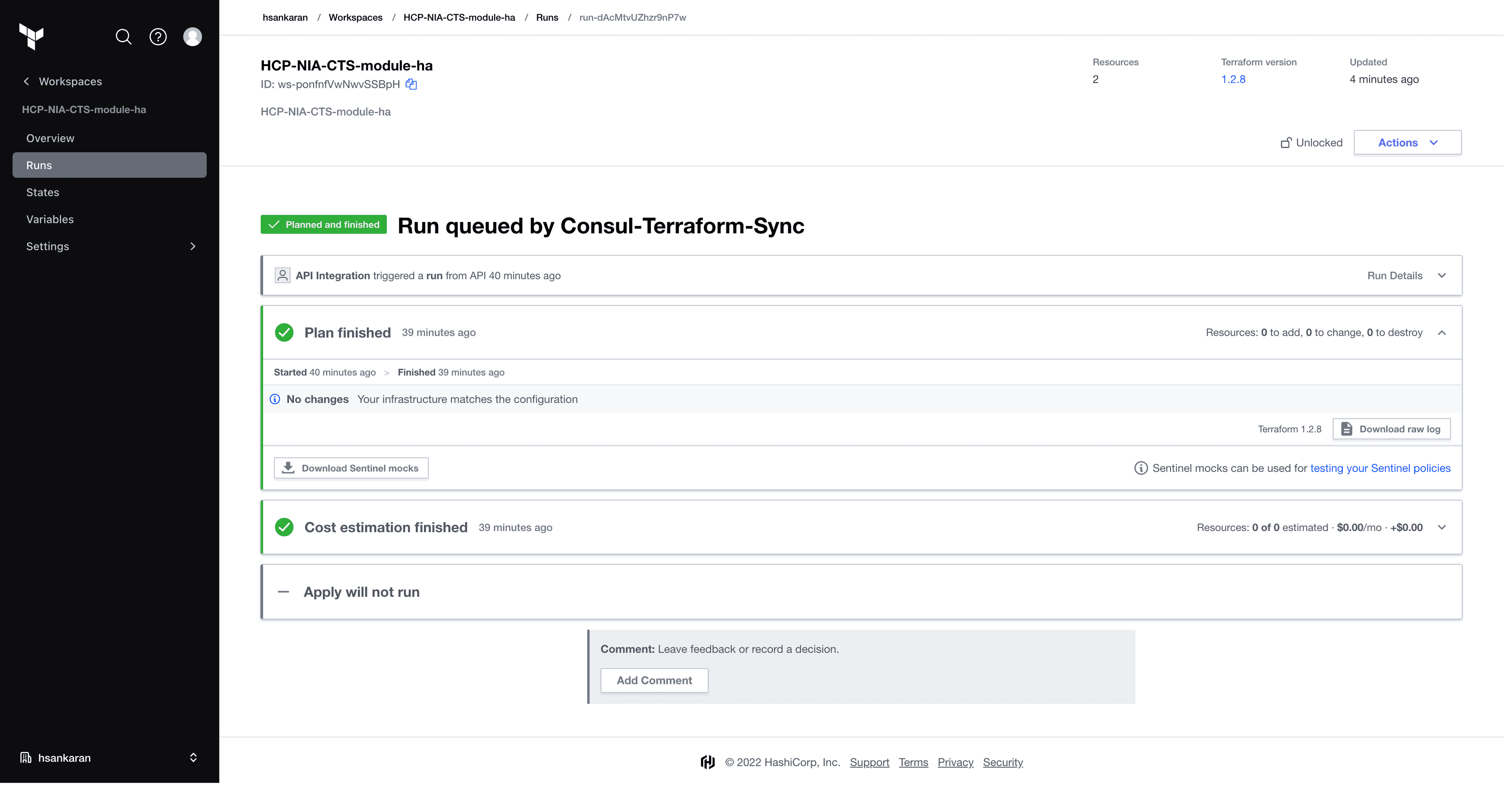

Existing tasks that were executed prior to leader failure should not be affected, and the new leader, as indicated above, will run them all in once-mode to check state. If you are using Terraform Enterprise or Terraform Cloud, you should see a NO-OP (no changes) for these runs as shown below:

Lastly, when the failed instance recovers, it will not prompt to dethrone the current leader and instead will continue to operate as a follower.

»Further Reading on High Availability

Please refer to our documentation on high availability for more information on storage requirements, instance compatibility, and detailed information on how to view status and troubleshoot.

»Getting Started with CTS

Check out these HashiCorp Learn tutorials to help you get started with CTS:

- Network Infrastructure Automation with Consul-Terraform-Sync Intro

- Secure Consul-Terraform-Sync for Production

- Consul-Terraform-Sync and Terraform Enterprise/Cloud Integration

- Build a Custom Consul-Terraform-Sync Module

You can find more CTS tutorials in the CTS tutorial collection at learn.hashicorp.com.

We invite you to try out CTS 0.7 and give us feedback in the issue tracker. You can also stay up to date on CTS by following our public roadmap or checking the release notes. For more information about Consul, please visit the Consul product page. Finally, if you are using or evaluating CTS, we would love to get your feedback through this short survey.