At Hashiconf EU 2019, we announced native Consul Connect integration in Nomad available in a technology preview release. A beta release candidate for Nomad 0.10 that includes Consul Connect integration is now available. This blog post presents an overview of service segmentation, and how to use features in Nomad to enable end-to-end mTLS between services through Consul Connect.

»Background

The transition to cloud environments and a microservices architecture represents a generational challenge for IT. This transition means shifting from largely dedicated servers in a private datacenter to a pool of compute capacity available on demand. The networking layer transitions from being heavily dependent on the physical location and IP address of services and applications to using a dynamic registry of services for discovery, segmentation, and composition. An enterprise IT team does not have the same control over the network or the physical locations of compute resources and must think about service-based connectivity. The runtime layer shifts from deploying artifacts to a static application server to deploying applications to a cluster of resources that are provisioned on-demand.

HashiCorp Nomad’s focus on ease of use, flexibility, and performance, enables operators to deploy a mix of microservice, batch, containerized, and non-containerized applications in a cloud-native environment. Nomad already integrates with HashiCorp Consul to provide dynamic service registration and service configuration capabilities.

Another core challenge is service segmentation. East-West firewalls use IP-based rules to secure ingress and egress traffic. But in a dynamic world where services move across machines and machines are frequently created and destroyed, this perimeter-based approach is difficult to scale as it results in complex network topologies and a sprawl of short-lived firewall rules and proxy configurations.

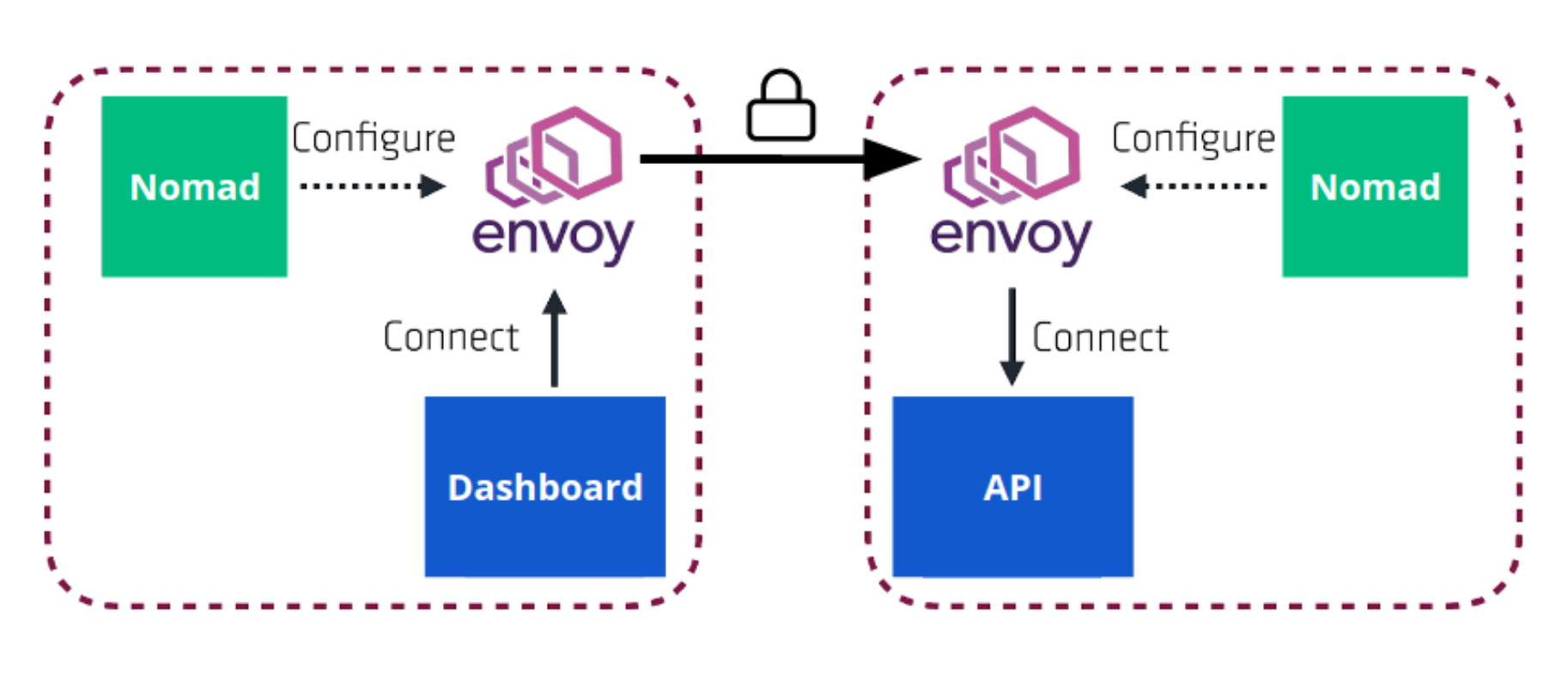

Consul Connect provides service-to-service connection authorization and encryption using mutual Transport Layer Security (mTLS). Applications can use sidecar proxies in a service mesh configuration to automatically establish TLS connections for inbound and outbound connections without being aware of Connect at all. From the application’s point of view, it uses a localhost connection to send outbound traffic, and the details of TLS termination and forwarding to the right destination service are handled by Connect.

Nomad 0.10 will extend Nomad’s Consul integration capabilities to include native Connect integration. This enables services being managed by Nomad to easily opt into mTLS between services, without having to make additional code changes to their application. Developers of microservices can continue to focus on their core business logic while operating in a cloud native environment and realizing the security benefits of service segmentation. Prior to Nomad 0.10, job specification authors would have to directly run and manage Connect proxies and did not get network level isolation between tasks.

Nomad 0.10 introduces two new stanzas to Nomad’s job specification—connect and sidecar_service. The rest of this blog post shows how to leverage Consul Connect with an example dashboard application that communicates with an API service.

»Prerequisites

»Consul

Connect integration with Nomad requires Consul 1.6 or later. The Consul agent can be run in dev mode with the following command:

$ consul agent -dev

»Nomad

Nomad must schedule onto a routable interface in order for the proxies to connect to each other. The following steps show how to start a Nomad dev agent configured for Connect:

$ sudo nomad agent -dev-connect

»CNI Plugins

Nomad uses CNI plugins to configure the task group networks, these need to be downloaded to /opt/cni/bin on the Nomad client nodes.

»Envoy

Nomad launches and manages Envoy, which runs alongside applications that opt into Connect integration. Envoy acts like a proxy to provide secure communication with other applications in the cluster. Nomad will launch Envoy using its official Docker container.

Also, note that the Connect integration in 0.10 works only in Linux environments.

»Example Overview

The example in this blog post enables secure communication between a web application and an API service. The web application and the API service are run and managed by Nomad. Nomad additionally configures Envoy proxies to run alongside these applications. The API service is a simple microservice that increments a count every time it is invoked. It then returns the current count as JSON. The web application is a dashboard that displays the value of the count.

»Architecture Diagram

The following Nomad architecture diagram illustrates the flow of network traffic between the dashboard web application and the API microservice. As shown below, traffic originating from the dashboard to the API is proxied through Envoy and secured via mTLS.

»Networking Model

Prior to Nomad 0.10, Nomad’s networking model optimized for simplicity by running all applications in host networking mode. This means that applications running on the same host could see each other and communicate with each other over localhost.

In order to support security features in Consul Connect, Nomad 0.10 introduces network namespace support. This is a new network model within Nomad where task groups are a single network endpoint and share a network namespace. This is analogous to a Kubernetes Pod. In this model, tasks launched in the same task group share a network stack that is isolated from the host where possible. This means the local IP of the task will be different than the IP of the client node. Users can also configure a port map to expose ports through the host if they wish.

»Configuring Network Stanza

Nomad’s network stanza will become valid at the task group level in addition to the resources stanza of a task. The network stanza will get an additional ‘mode’ option which tells the client what network mode to run in. The following network modes are available:

- “none” - Task group will have an isolated network without any network interfaces.

- “bridge” - Task group will have an isolated network namespace with an interface that is bridged with the host

- “host” - Each task will join the host network namespace and a shared network namespace is not created. This matches the current behavior in Nomad 0.9

Additionally, Nomad’s port stanza now includes a new “to” field. This field allows for configuration of the port to map to inside of the allocation or task. With bridge networking mode, and the network stanza at the task group level, all tasks in the same task group share the network stack including interfaces, routes, and firewall rules. This allows Connect enabled applications to bind only to localhost within the shared network stack, and use the proxy for ingress and egress traffic.

The following is a minimal network stanza for the API service in order to opt into Connect.

network {

mode = "bridge"

}

The following is the network stanza for the web dashboard application, illustrating the use of port mapping.

network {

mode = "bridge"

port "http" {

static = 9002

to = 9002

}

}

»Configuring Connect in the API service

In order to enable Connect in the API service, we will need to specify a network stanza at the group level, and use the connect stanza inside the service definition. The following snippet illustrates this

group "api" {

network {

mode = "bridge"

}

service {

name = "count-api"

port = "9001"

connect {

sidecar_service {}

}

}

task "web" {

driver = "docker"

config {

image = "hashicorpnomad/counter-api:v1"

}

}

Nomad will run Envoy in the same network namespace as the API service, and register it as a proxy with Consul Connect.

»Configuring Upstreams

In order to enable Connect in the web application, we will need to configure the network stanza at the task group level. We will also need to provide details about upstream services it communicates with, which is the API service. More generally, upstreams should be configured for any other service that this application depends on.

The following snippet illustrates this.

group "dashboard" {

network {

mode ="bridge"

port "http" {

static = 9002

to = 9002

}

}

service {

name = "count-dashboard"

port = "9002"

connect {

sidecar_service {

proxy {

upstreams {

destination_name = "count-api"

local_bind_port = 8080

}

}

}

}

}

task "dashboard" {

driver = "docker"

env {

COUNTING_SERVICE_URL = "http://${NOMAD_UPSTREAM_ADDR_count_api}"

}

config {

image = "hashicorpnomad/counter-dashboard:v1"

}

}

}

In the above example, the static = 9002 parameter requests the Nomad scheduler reserve port 9002 on a host network interface. The to = 9002 parameter forwards that host port to port 9002 inside the network namespace. This allows you to connect to the web frontend in a browser by visiting http://<host_ip>:9002.

The web frontend connects to the API service via Consul Connect. The upstreams stanza defines the remote service to access (count-api) and what port to expose that service on inside the network namespace (8080). The web frontend is configured to communicate with the API service with an environment variable, $COUNTING_SERVICE_URL. The upstream's address is interpolated into that environment variable. In this example, $COUNTING_SERVICE_URL will be set to “localhost:8080”.

With this set up, the dashboard application communicates over localhost to the proxy’s upstream local bind port in order to communicate with the API service. The proxy handles mTLS communication using Consul to route traffic to the correct destination IP where the API service runs. The Envoy proxy on the other end terminates TLS and forwards traffic to the API service listening on localhost.

»Job Specification

The following job specification contains both the API service and the web dashboard. You can run this using nomad run connect.nomad after saving the contents to a file named connect.nomad.

job "countdash" {

datacenters = ["dc1"]

group "api" {

network {

mode = "bridge"

}

service {

name = "count-api"

port = "9001"

connect {

sidecar_service {}

}

}

task "web" {

driver = "docker"

config {

image = "hashicorpnomad/counter-api:v1"

}

}

}

group "dashboard" {

network {

mode ="bridge"

port "http" {

static = 9002

to = 9002

}

}

service {

name = "count-dashboard"

port = "9002"

connect {

sidecar_service {

proxy {

upstreams {

destination_name = "count-api"

local_bind_port = 8080

}

}

}

}

}

task "dashboard" {

driver = "docker"

env {

COUNTING_SERVICE_URL = "http://${NOMAD_UPSTREAM_ADDR_count_api}"

}

config {

image = "hashicorpnomad/counter-dashboard:v1"

}

}

}

}

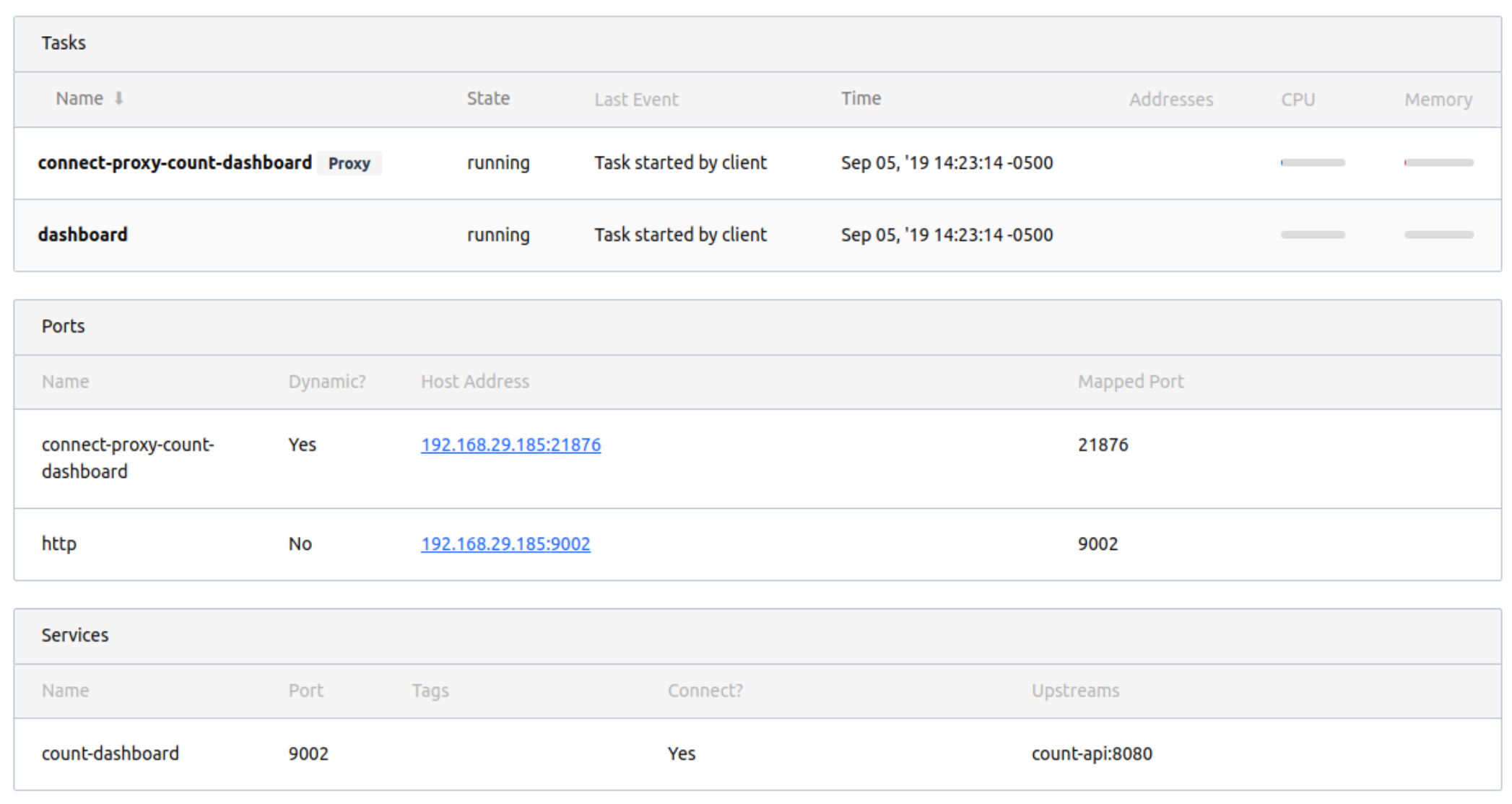

»UI

The web UI in Nomad 0.10 shows details relevant to Connect integration whenever applicable. The allocation details page now shows information about each service that is proxied through Connect.

In the above screenshot from the allocation details page for the dashboard application, the UI shows the Envoy proxy task. It also shows the service (count-dashboard) as well as the name of the upstream (count-api).

»Limitations

- The Consul binary must be present in Nomad's $PATH to run the Envoy proxy sidecar on client nodes.

- Consul Connect Native is not yet supported.

- Consul Connect HTTP and gRPC checks are not yet supported.

- Consul ACLs are not yet supported.

- Only the Docker, exec, and raw exec drivers support network namespaces and Connect.

- Variable interpolation for group services and checks are not yet supported.

»Conclusion

In this blog post, we shared an overview of native Consul Connect integration in Nomad. This enables job specification authors to easily opt in to mTLS across services. For more information, see the Consul Connect guide.