Today at HashiConf Global, we introduced a number of significant enhancements for HashiCorp Consul, our service networking solution that helps users discover and securely connect any application. These updates simplify deployment, boost flexibility, and improve operational efficiency:

- Consul 1.14 (beta)

- Consul dataplane: a new simplified deployment model

- Service mesh traffic management across cluster peers

- Service failover enhancements

- Consul mesh service support for AWS Lambda

- Consul mesh services invoking AWS Lambda functions (GA)

- AWS Lambda functions accessing Consul mesh services (beta)

- Consul service mesh on Windows virtual machines (beta)

- Global visibility and controls in HCP Consul for self-managed and HCP-managed Consul deployments. (Covered in Introducing a New HCP-Based Management Plane for Consul)

Let’s take a closer look at all these announcements:

»Consul 1.14 (Beta)

The latest beta release of Consul, version 1.14, adds a variety of enhancements designed to improve operational efficiency, scalability, and resiliency.

»Simplified Deployments of Consul Service Mesh with Consul Dataplane

Consul 1.14 introduces a simplified deployment architecture that eliminates the need to deploy node-level Consul clients on Kubernetes. The new architecture deploys a new Consul dataplane component that is injected as a sidecar in the Kubernetes workload pod. This dataplane container image packages both an Envoy container and Consul dataplane binary.

»Before Consul Dataplane

Consul's original architecture relies on deploying many Consul clients along with Consul servers. In a more traditional environment where a user has VM-centric workloads, a client agent is deployed to each VM and all the services running on that VM are registered with the client agent. The client agent sees itself as the source of truth for the services running on its node and ensures the server's catalog is kept in sync with its registry.

The client agents and servers all join as part of a single gossip pool, which allows quick detection of node failures. This enables the Consul control plane to respond to service discovery requests, or dynamically alter service mesh routing rules to return only the remaining healthy services or to steer traffic away from the services on the unhealthy node.

This architecture worked well in the past, but as new deployment patterns for Consul have emerged, this architecture has become a source of friction for deploying Consul successfully.

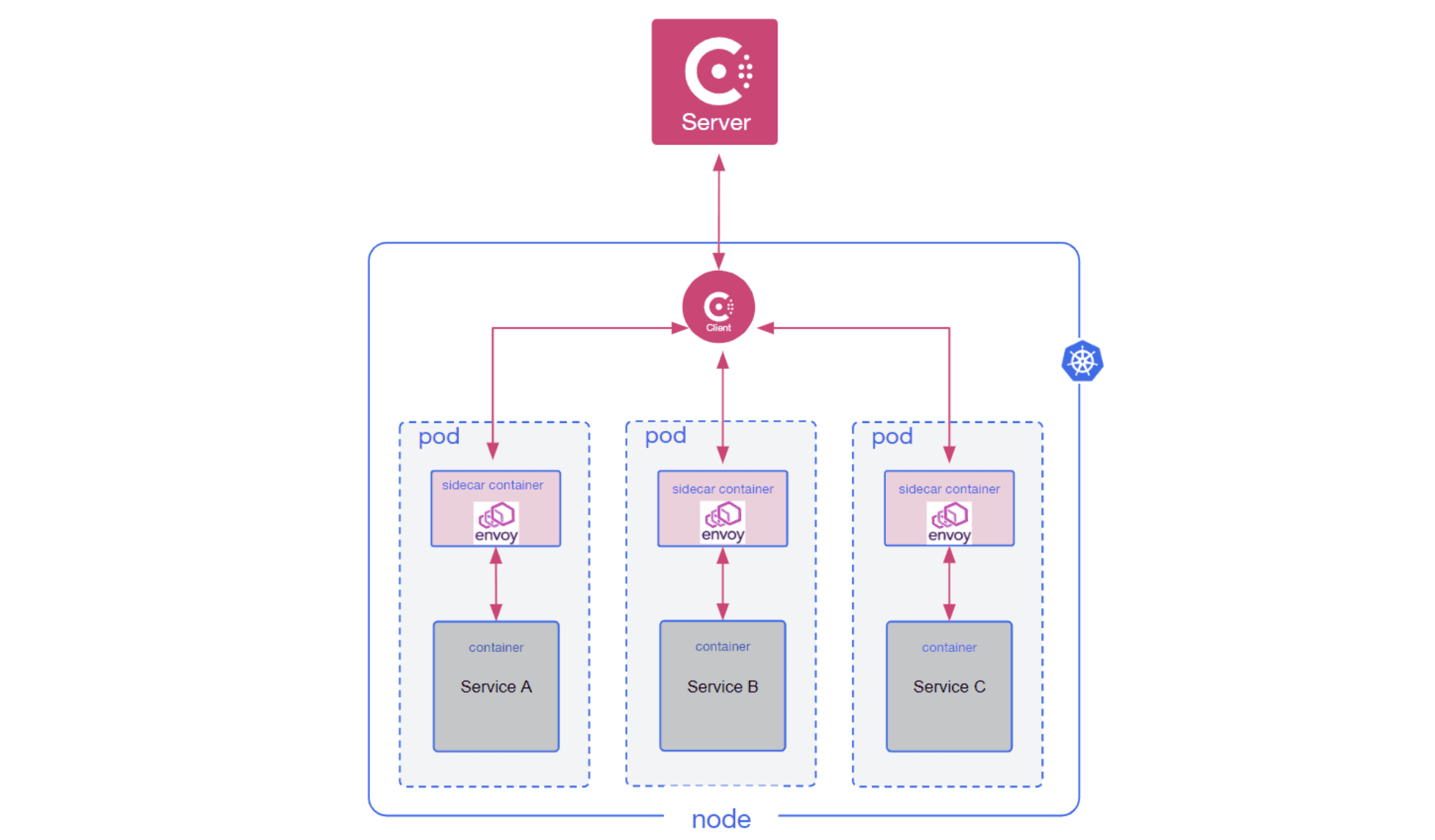

A high-level architectural diagram of Consul on Kubernetes prior to Consul 1.14.

On Kubernetes, a Consul client is deployed on each node and is responsible for registering services and pods for each service. During the service registration process, Envoy sidecars are injected into pods during deployment to enable mTLS and traffic management capabilities for the mesh. Although running clients per node on Kubernetes works well for most use cases, users would occasionally encounter friction due to restrictions on Kubernetes clusters that made it difficult to reliably run clients on all nodes, or due to the gossip network requirements that require low latency between members of a gossip pool.

»After Consul Dataplane

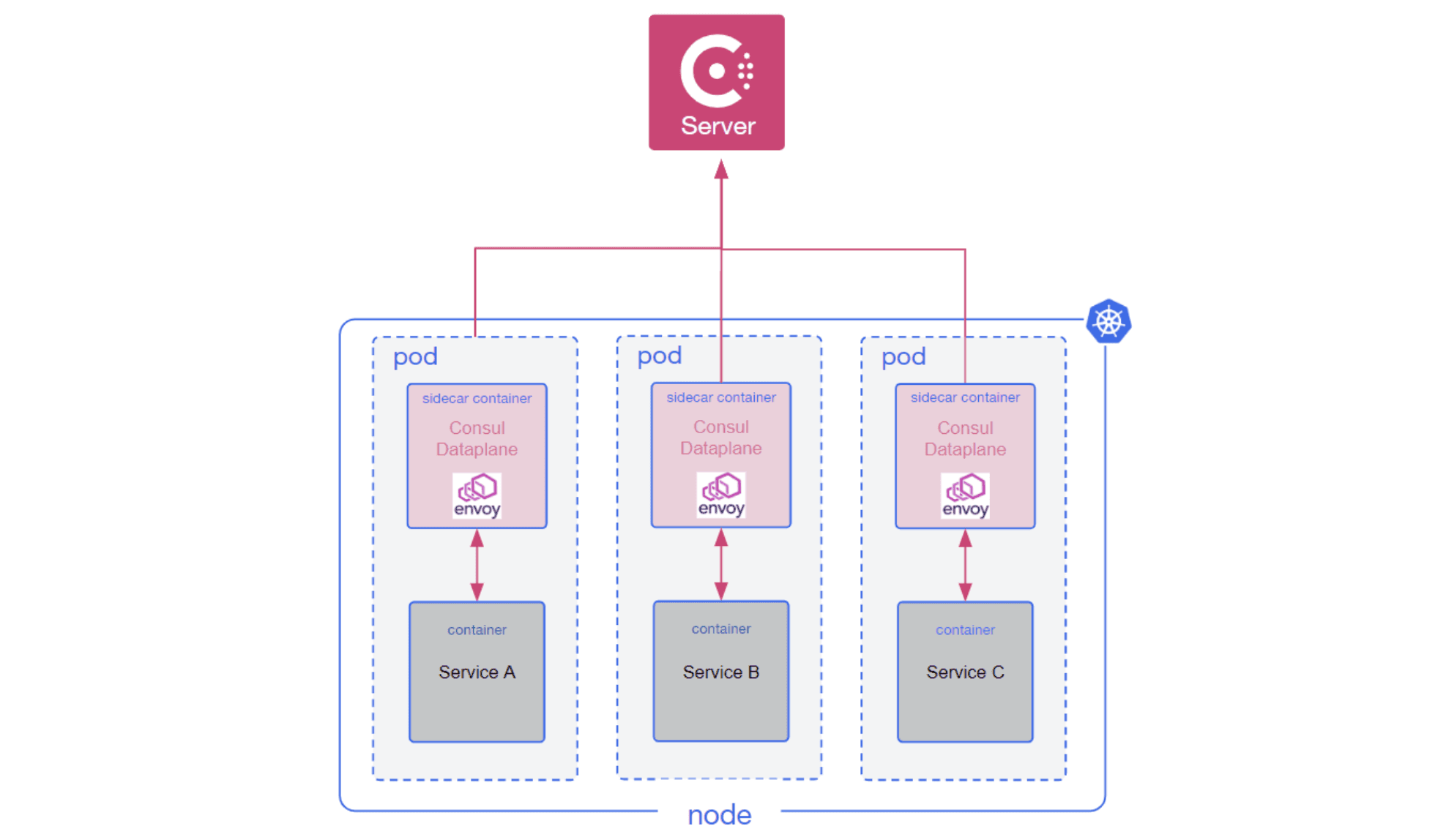

Starting with Consul 1.14, Consul on Kubernetes will deploy Consul dataplane as a sidecar container alongside your workload’s pods, removing the need for running Consul clients using a DaemonSet. The Consul dataplane component will primarily be responsible for discovering and watching the Consul servers that are available to the pod and managing the initial Envoy bootstrap configuration and execution of the process.

Consul dataplane's design removes the need to run Consul client agents. Removing Consul client agents brings multiple benefits:

-

Fewer network requirements: Consul client agents use multiple network protocols and require bi-directional communication between Consul client and server agents for the gossip protocol. Consul dataplane does not use gossip and instead uses a single gRPC connection out to the Consul servers. This means Consul doesn’t need peer networks between the Consul servers and the runtimes running the workloads, improving the overall time to value for users deploying Consul service mesh when Consul servers are managed by HCP Consul.

-

Simplified set up: Consul dataplane does not need to be configured with a gossip encryption key and operators do not need to distribute separate access control list (ACL) tokens for Consul client agents.

-

Additional runtime support: Consul dataplane runs as a sidecar alongside your workload, making it easier to support various runtimes. For example, it runs on serverless platforms that do not provide direct access to a host machine for deploying a Consul client. On Kubernetes, this allows you to run Consul on Kubernetes clusters such as Google Kubernetes Engine (GKE) Autopilot and Amazon Elastic Kubernetes Service (Amazon EKS) on AWS Fargate.

-

Easier upgrades: Deploying new Consul versions no longer requires upgrading Consul client agents. Consul dataplane provides better compatibility across Consul server versions, so you only need to upgrade the Consul servers to take advantage of new Consul features.

This new architecture simplifies overall deployment and removes the Consul client agent from the default deployment of Consul on Kubernetes. Notably, it also removes the requirement to use hostPort on Kubernetes, which was needed to reliably communicate across Consul clients. For deployments outside of Kubernetes, Consul clients will continue to be supported for the foreseeable future for both service discovery and service mesh use cases.

A high-level architectural diagram of Consul on Kubernetes in Consul 1.14.

»Mesh Traffic Management Across Cluster Peers

Consul service mesh allows operators to configure advanced traffic management capabilities such as canary or blue/green deployments, A/B testing, and service failover for high availability. These capabilities are configured using service splitters, routers, and resolvers. Consul supports these traffic management capabilities across WAN-federated datacenters, but we also saw a desire for a more flexible support model.

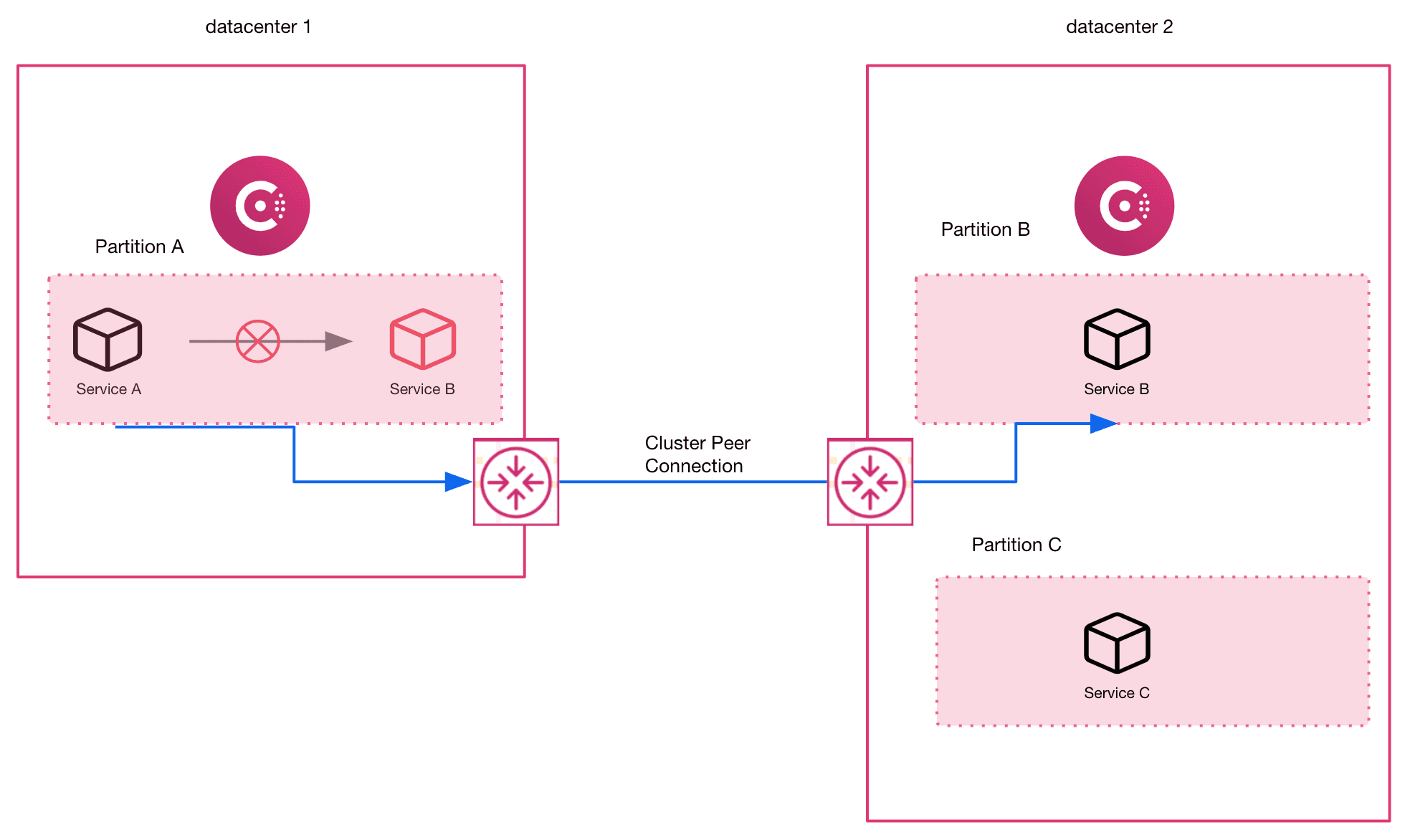

In Consul 1.13, we introduced cluster peering in beta as an alternative to WAN federation for multi-datacenter deployments. Cluster peering is much more flexible than WAN federation, but it did not support cross-datacenter traffic management in Consul 1.13. As of Consul 1.14, cluster peering now supports both cross-datacenter and cross-partition traffic management.

The image below shows service failover to a cluster-peered partition in another datacenter:

The advantages of cluster peering compared to WAN federation include:

- Each cluster (datacenter or Enterprise admin partition) is independent, providing operational autonomy

- Full control over which services can be accessed from which peered clusters

- Support for complex datacenter topologies, including hub-and-spoke

- Support for use of Enterprise admin partitions in multiple connected datacenters

For more information on cluster peering, please refer to the Consul 1.13 release blog or cluster peering documentation. Visit the cluster peering tutorial to learn how to federate Consul datacenters and connect services across peered service meshes. For more information on configuring cross-datacenter traffic management, see the service resolver documentation’s failover and redirect capabilities.

»Service Failover Enhancements

Critical services should always be available, even when infrastructure components fail. Achieving high availability involves:

- Deploying instances of a service across fault domains, such as multiple infrastructure regions and zones

- Configuring service failover; when healthy instances of a service are not available locally, traffic will failover to instances elsewhere

Consul supports configuring service failover, enabling you to create high availability deployments for both service mesh and service discovery use cases. Consul 1.14 includes several failover enhancements.

»General Failover Enhancements

Consul 1.14 further enhances the flexibility of service failover in all Consul deployments, enabling operators to address more-complex failover scenarios in which service failover targets may:

- Exist in WAN-federated datacenters, cluster peers, and local admin partitions

- Have a different service name, service subset, or namespace than the unhealthy local service

»Failover Enhancements Exclusive to Cluster Peering

In Consul 1.14, service mesh failover to cluster peers offers improved resiliency compared to failover to WAN federated datacenters.

Failover involves sending traffic to a different pool of service instances. With WAN federation failover, the control plane triggers service failover by reconfiguring Envoy proxies in the mesh to use the next failover pool, meaning failover depends on control plane availability. In Consul 1.14, cluster peer failover is triggered by Envoy proxies themselves, providing increased failover resiliency.

»Consul Support for AWS Lambda

Consul service mesh now has first-class support for AWS Lambda functions. Many companies operating in the cloud are interested in serverless technologies, but a potential barrier to adoption of serverless is the difficulty of integrating these workloads into the service mesh.

Ideally, serverless workloads should be managed like other workloads in the mesh, so they can take advantage of features like identity-based security, traffic management, consistent workflows, and operational insights. The Consul-Lambda integration addresses two main workflows:

- Mesh services calling AWS Lambda functions (“mesh-to-Lambda”)

- AWS Lambda functions calling mesh services (“Lambda-to-mesh”)

In June we announced the mesh-to-Lambda public beta. Today we are happy to announce that mesh-to-Lambda support is generally available and Lambda-to-mesh support is now in public beta.

Lambda communication in both directions shares the same reliability, observability, and security benefits that the Consul service mesh provides. For example, you can use Consul intentions or traffic management on Lambda services the same way you would use them with other services.

»Integrating AWS Lambda Functions

Registering Lambda functions into Consul is automated using the Consul Lambda service registrator. This integration provides a simple way to register AWS Lambda functions into the service mesh. You use the Consul Lambda registrator Terraform module to deploy Lambda registrator.

The Lambda registrator automatically registers all Lambda functions that you have tagged with serverless.consul.hashicorp.com/v1alpha1/lambda/enabled: true into the service mesh. This happens in four steps:

- Whenever you create, tag, or untag Lambda functions, AWS logs the events into CloudTrail.

- EventBridge routes the events to the Lambda registrator.

- The Lambda registrator registers the Lambda as a Consul service and stores a service-defaults configuration entry.

- The Lambda registrator retrieves the information needed to make mTLS connections into the mesh from Consul and stores this data, encrypted, in AWS Systems Manager Parameter Store.

»Consul Mesh Services Invoking AWS Lambda functions (GA)

Applications that call AWS Lambda functions can do so through a terminating gateway or directly through their Envoy sidecar proxy.

Once registered, you need to set the Lambda service as an upstream for any services that will use it. Then you can call it like any other mesh service:

service {

…

proxy = {

…

upstreams {

local_bind_port = 1234

destination_name = "lambda"

}

}

}

»AWS Lambda Functions Accessing Consul Mesh Services (Beta)

Lambda functions that call into the mesh use the new Consul Lambda extension to make mTLS requests to services through a mesh gateway. The extension runs within the execution environment of your Lambda function and performs three main steps:

- The extension retrieves the mTLS data that the Lambda registrator stores in AWS Systems Manager Parameter Store. The extension periodically updates the mTLS data to ensure that it stays in sync with Consul.

- The extension creates a lightweight TCP proxy that listens on

localhostfor each upstream port defined inCONSUL_SERVICE_UPSTREAMS. - For each request received from the Lambda function on its upstream listeners, the extension establishes an mTLS connection with, and proxies the request through, the mesh gateway configured by

CONSUL_MESH_GATEWAY_URI.

To use the Consul Lambda extension, you add it to your Lambda function as a layer. Then you set environment variables on your function to configure it. Your function doesn’t need to know anything about the extension, so there should be no impact to your existing code.

You can download the Consul Lambda extension from the HashiCorp releases website at the Consul Lambda releases page.

The following Terraform configuration creates a Lambda layer from the consul-lambda-extension zip file and a Lambda function that uses that layer:

resource "aws_lambda_layer_version" "consul_lambda_extension" {

layer_name = "consul-lambda-extension"

filename = "consul-lambda-extension_${var.version}_linux_amd64.zip"

}

resource "aws_lambda_function" "lambda_function" {

function_name = "lambda-function"

...

tags = {"serverless.consul.hashicorp.com/v1alpha1/lambda/enabled":"true"}

layers = [aws_lambda_layer_version.consul_lambda_extension.arn]

environment {

variables = {

CONSUL_EXTENSION_DATA_PREFIX = "/lambda-data"

CONSUL_MESH_GATEWAY_URI = "mesh-gateway.consul:8443"

CONSUL_SERVICE_UPSTREAMS = "example-service:1234"

}

}

}

To call the example-service upstream, the function makes requests to localhost:1234.

»Consul Service Mesh on Windows VMs (Beta)

Windows workloads make up a sizable proportion of critical enterprise applications. As security requirements evolve and network topologies grow more complicated, it's increasingly important to integrate Windows workloads with a service mesh. To this end, we are pleased to announce beta support for Consul service mesh on Windows.

With this support, your Windows workloads can take advantage of Consul's zero trust security, observability, and traffic-management capabilities. In addition, Consul is the only service mesh to support both Windows and Linux, enabling network control from a single pane of glass across hybrid workloads.

This capability is available in beta starting with Consul 1.12 with Windows Server 2019 VMs and Envoy versions 1.22.2, 1.21.4, 1.20.6, and 1.19.5. We plan to add this capability to newer versions of Consul as beta testing continues. The beta version supports all Consul open source features for a single Consul datacenter.

Visit the Consul Windows workloads tutorial to learn how to start using Consul service mesh on Windows.

»Next Steps

We are excited for users to try these new Consul updates and further expand their service mesh implementations. The Consul 1.14 beta includes enhancements for all types of Consul users leveraging the product for service discovery and service mesh across multiple environments, including serverless functions. Our goal with Consul is to enable a consistent enterprise-ready control plane to discover and securely connect any application.

- Learn more in the Consul documentation.

- Get started with the Consul 1.14 beta by installing the latest beta Helm chart provided in our Consul Kubernetes beta documentation.