HashiCorp and Microsoft have partnered to create Terraform modules that follow Microsoft's Azure Well-Architected Framework and best practices. In a previous blog post, we demonstrated how to accelerate AI adoption on Azure with Terraform. This post covers how to use a simple three-step process to build, secure, and enable OpenAI applications on Azure with HashiCorp Terraform and Vault.

The code for this demo can be found on GitHub. You can leverage the Microsoft application outlined in this post and the Microsoft Azure Kubernetes Service (AKS) to integrate with OpenAI. You can also read more about how to deploy an application that uses OpenAI on AKS on the Microsoft website.

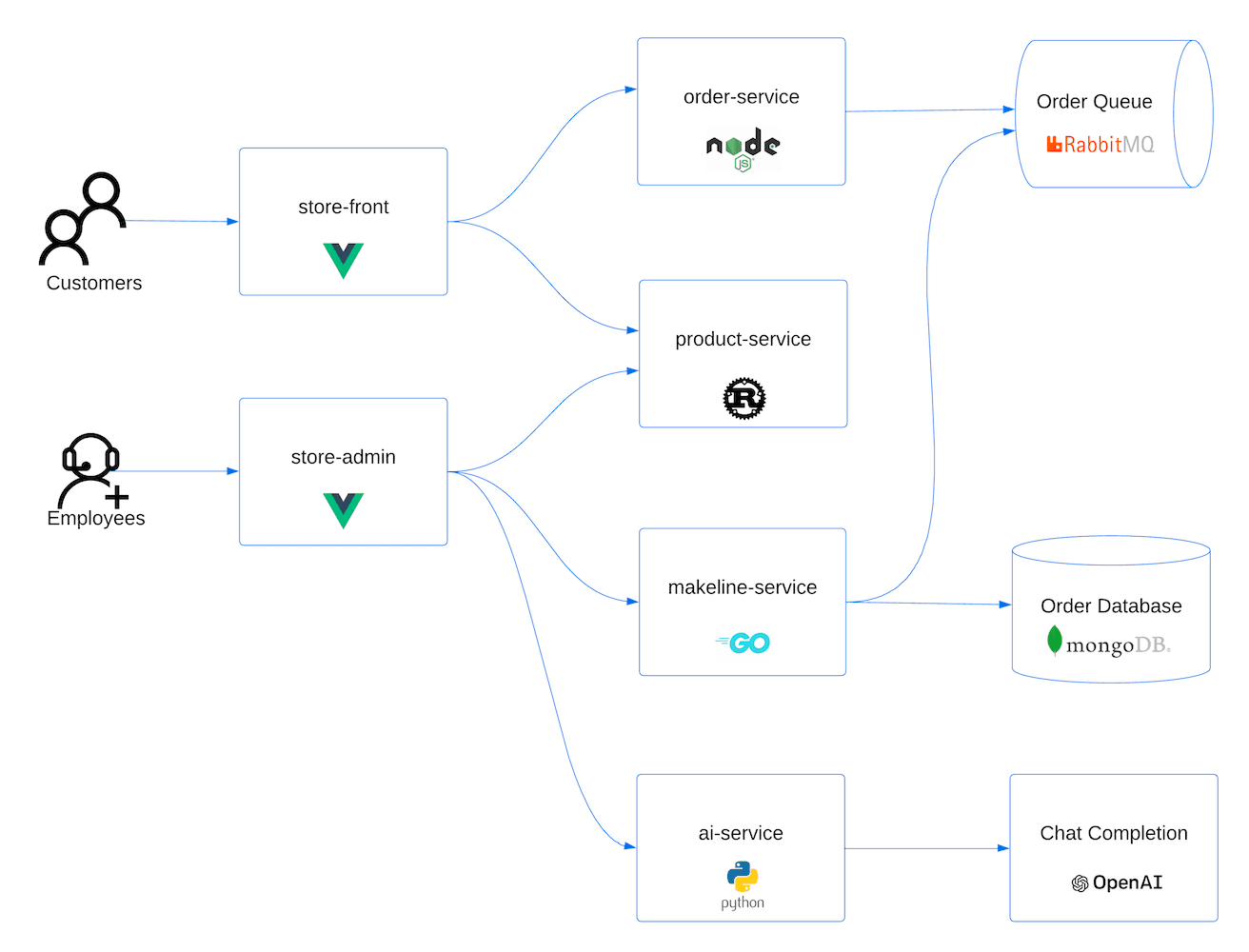

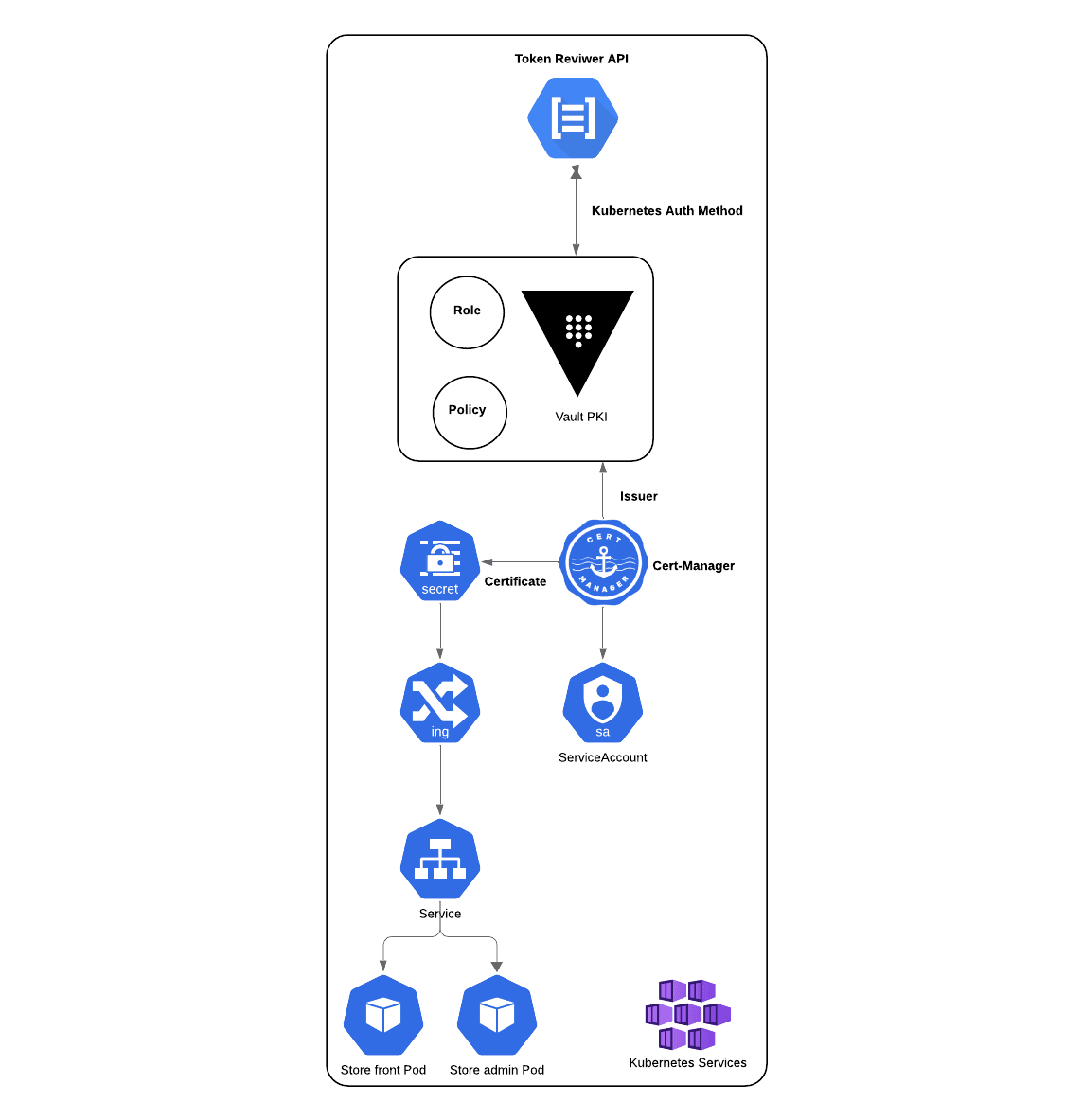

This diagram outlines the elements deployed and how they interact.

»Key considerations of AI

The rise in AI workloads is driving an expansion of cloud operations. Gartner predicts that cloud infrastructure will grow 26.6% in 2024, as organizations deploying generative AI (GenAI) services look to the public cloud. To create a successful AI environment, orchestrating the seamless integration of artificial intelligence and operations demands a focus on security, efficiency, and cost control.

»Security

Data integration, the bedrock of AI, not only requires the harmonious assimilation of diverse data sources but must also include a process to safeguard sensitive information. In this complex landscape, the deployment of public key infrastructure (PKI) and robust secrets management becomes indispensable, adding cryptographic resilience to data transactions and ensuring the secure handling of sensitive information. For more information on the HashiCorp Vault solution, see our use-case page on Automated PKI infrastructure

Machine learning models, pivotal in anomaly detection, predictive analytics, and root-cause analysis, not only provide operational efficiency but also serve as sentinels against potential security threats. Automation and orchestration, facilitated by tools like HashiCorp Terraform, extend beyond efficiency to become critical components in fortifying against security vulnerabilities. Scalability and performance, guided by resilient architectures and vigilant monitoring, ensure adaptability to evolving workloads without compromising on security protocols.

»Efficiency and cost control

In response, platform teams are increasingly adopting infrastructure as code (IaC) to enhance efficiency and help control cloud costs. HashiCorp products underpin some of today’s largest AI workloads, using infrastructure as code to help eliminate idle resources and overprovisioning, and reduce infrastructure risk.

»Automation with Terraform

This post delves into specific Terraform configurations tailored for application deployment within a containerized environment. The first step looks at using IaC principles to deploy infrastructure to efficiently scale AI workloads, reduce manual intervention, and foster a more agile and collaborative AI development lifecycle on the Azure platform. The second step focuses on how to build security and compliance into an AI workflow. The final step shows how to manage application deployment on the newly created resources.

»Prerequisites

For this demo, you can use either Azure OpenAI service or OpenAI service:

- To use Azure OpenAI service, enable it on your Azure subscription using the Request Access to Azure OpenAI Service form.

- To use OpenAI, sign up on the OpenAI website.

»Step one: Build

First let's look at the Helm provider block in main.tf:

main.tf

provider "helm" {

kubernetes {

host = azurerm_kubernetes_cluster.tf-ai-demo.kube_config.0.host

username = azurerm_kubernetes_cluster.tf-ai-demo.kube_config.0.username

password = azurerm_kubernetes_cluster.tf-ai-demo.kube_config.0.password

client_certificate = base64decode(azurerm_kubernetes_cluster.tf-ai-demo.kube_config.0.client_certificate)

client_key = base64decode(azurerm_kubernetes_cluster.tf-ai-demo.kube_config.0.client_key)

cluster_ca_certificate = base64decode(azurerm_kubernetes_cluster.tf-ai-demo.kube_config.0.cluster_ca_certificate)

}

}

This code uses information from the AKS resource to populate the details in the Helm provider, letting you deploy resources into AKS pods using native Helm charts.

With this Helm chart method, you deploy multiple resources using Terraform in the helm_release.tf file. This file sets up HashiCorp Vault, cert-manager, and Traefik Labs’ ingress controller within the pods. The Vault configuration shows the Helm set functionality to customize the deployment:

helm_release.tf

resource "helm_release" "vault" {

name = "vault"

chart = "hashicorp/vault"

set {

name = "server.dev.enabled"

value = "true"

}

set {

name = "server.dev.devRootToken"

value = "AzureA!dem0"

}

set {

name = "ui.enabled"

value = "true"

}

set {

name = "ui.serviceType"

value = "LoadBalancer"

}

set {

name = "ui.serviceNodePort"

value = "null"

}

set {

name = "ui.externalPort"

value = "8200"

}

}

In this demo, the Vault server is customized to be in Dev Mode, have a defined root token, and enable external access to the pod via a load balancer using a specific port.

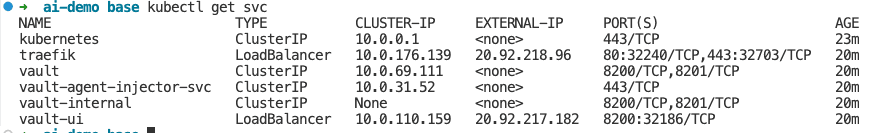

At this stage you should have created a resource group with an AKS cluster and servicebus established. The containerized environment should look like this:

If you want to log in to the Vault server at this stage, use the EXTERNAL-IP load balancer address with port 8200 (like this: http://[EXTERNAL_IP]:8200/) and log in using AzureA!dem0.

»Step two: Secure

Now that you have established a base infrastructure in the cloud and the microservices environment, you are ready to configure Vault resources to integrate PKI into your environment. This centers around the pki_build.tf.second file, which you need to rename to remove the .second extension and make it executable as a Terraform file. After performing a terraform apply, as you are adding to the current infrastructure, add the elements to set up Vault with a root certificate and issue this within the pod.

To do this, use the Vault provider and configure it to define a mount point for the PKI, a root certificate, role cert URL, issuer, and policy needed to build the PKI:

resource "vault_mount" "pki" {

path = "pki"

type = "pki"

description = "This is a PKI mount for the Azure AI demo."

default_lease_ttl_seconds = 86400

max_lease_ttl_seconds = 315360000

}

resource "vault_pki_secret_backend_root_cert" "root_2023" {

backend = vault_mount.pki.path

type = "internal"

common_name = "example.com"

ttl = 315360000

issuer_name = "root-2023"

}

Using the same Vault provider you can also configure Kubernetes authentication to create a role named "issuer" that binds the PKI policy with a Kubernetes service account named issuer:

resource "vault_auth_backend" "kubernetes" {

type = "kubernetes"

}

resource "vault_kubernetes_auth_backend_config" "k8_auth_config" {

backend = vault_auth_backend.kubernetes.path

kubernetes_host = azurerm_kubernetes_cluster.tf-ai-demo.kube_config.0.host

}

resource "vault_kubernetes_auth_backend_role" "k8_role" {

backend = vault_auth_backend.kubernetes.path

role_name = "issuer"

bound_service_account_names = ["issuer"]

bound_service_account_namespaces = ["default","cert-manager"]

token_policies = ["default", "pki"]

token_ttl = 60

token_max_ttl = 120

}

The role connects the Kubernetes service account, issuer, which is created in the default namespace with the PKI Vault policy. The tokens returned after authentication are valid for 60 minutes. The Kubernetes service account name, issuer, is created using the Kubernetes provider, discussed in step three, below. These resources are used to configure the model to use HashiCorp Vault to manage the PKI certification process.

The image below shows how HashiCorp Vault interacts with cert-manager to issue certificates to be used by the application:

»Step three: Enable

The final stage requires another tf apply as you are again adding to the environment. You now use app_build.tf.third to build an application. To do this you need to rename app_build.tf.third to remove the .third extension and make it executable as a Terraform file.

Interestingly, the code in app_build.tf uses the Kubernetes provider resource kubernetes_manifest. The manifest values are the HCL (HashiCorp Configuration Language) representation of a Kubernetes YAML manifest. (We converted an existing manifest from YAML to HCL to get the code needed for this deployment. You can do this using Terraform’s built-in yamldecode() function or the HashiCorp tfk8s tool.)

The code below represents an example of a service manifest used to create a service on port 80 to allow access to the store-admin app that was converted using the tfk8s tool:

app_build.tf

resource "kubernetes_manifest" "service_tls_admin" {

manifest = {

"apiVersion" = "v1"

"kind" = "Service"

"metadata" = {

"name" = "tls-admin"

"namespace" = "default"

}

"spec" = {

"clusterIP" = "10.0.160.208"

"clusterIPs" = [

"10.0.160.208",

]

"internalTrafficPolicy" = "Cluster"

"ipFamilies" = [

"IPv4",

]

"ipFamilyPolicy" = "SingleStack"

"ports" = [

{

"name" = "tls-admin"

"port" = 80

"protocol" = "TCP"

"targetPort" = 8081

},

]

"selector" = {

"app" = "store-admin"

}

"sessionAffinity" = "None"

"type" = "ClusterIP"

}

}

}

»Putting it all together

Once you’ve deployed all the elements and applications, you use the certificate stored in a Kubernetes secret to apply the TLS configuration to inbound HTTPS traffic. In the example below, you associate "example-com-tls" — which includes the certificate created by Vault earlier — with the inbound IngressRoute deployment using the Terraform manifest:

resource "kubernetes_manifest" "ingressroute_admin_ing" {

manifest = {

"apiVersion" = "traefik.containo.us/v1alpha1"

"kind" = "IngressRoute"

"metadata" = {

"name" = "admin-ing"

"namespace" = "default"

}

"spec" = {

"entryPoints" = [

"websecure",

]

"routes" = [

{

"kind" = "Rule"

"match" = "Host(`admin.example.com`)"

"services" = [

{

"name" = "tls-admin"

"port" = 80

},

]

},

]

"tls" = {

"secretName" = "example-com-tls"

}

}

}

}

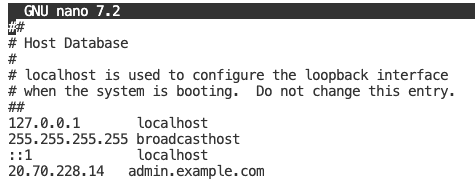

To test access to the OpenAI store-admin site, you need a domain name. You use a FQDN to access the site that you are going to protect using the generated certificate and HTTPS.

To set this up, access your AKS cluster. The Kubernetes command-line client, kubectl, is already installed in your Azure Cloud Shell. You enter:

kubectl get svc

And should get the following output:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hello LoadBalancer 10.0.23.77 20.53.189.251 443:31506/TCP 94s

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 29h

makeline-service ClusterIP 10.0.40.79 <none> 3001/TCP 4h45m

mongodb ClusterIP 10.0.52.32 <none> 27017/TCP 4h45m

order-service ClusterIP 10.0.130.203 <none> 3000/TCP 4h45m

product-service ClusterIP 10.0.59.127 <none> 3002/TCP 4h45m

rabbitmq ClusterIP 10.0.122.75 <none> 5672/TCP,15672/TCP 4h45m

store-admin LoadBalancer 10.0.131.76 20.28.162.45 80:30683/TCP 4h45m

store-front LoadBalancer 10.0.214.72 20.28.162.47 80:32462/TCP 4h45m

traefik LoadBalancer 10.0.176.139 20.92.218.96 80:32240/TCP,443:32703/TCP 29h

vault ClusterIP 10.0.69.111 <none> 8200/TCP,8201/TCP 29h

vault-agent-injector-svc ClusterIP 10.0.31.52 <none> 443/TCP 29h

vault-internal ClusterIP None <none> 8200/TCP,8201/TCP 29h

vault-ui LoadBalancer 10.0.110.159 20.92.217.182 8200:32186/TCP 29h

Look for the traefik entry and note the EXTERNALl-IP (yours will be different than the one shown above). Then, on your local machine, create a localhost entry for admin.example.com to resolve to the address. For example on MacOS, you can use sudo nano /etc/hosts. If you need more help, search “create localhost” for your machine type.

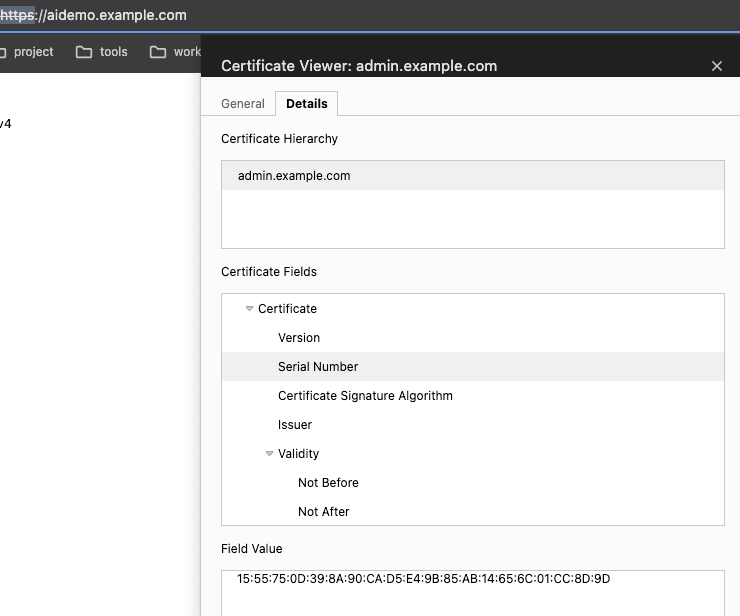

Now you can enter https://admin.example.com in your browser and examine the certificate.

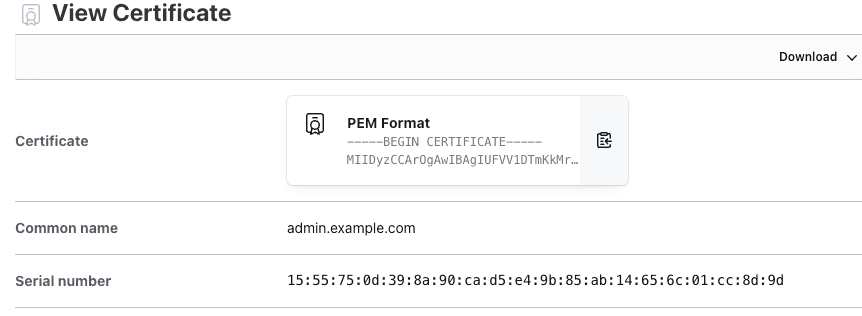

This certificate is built from a root certificate authority (CA) held in Vault (example.com) and is valid against this issuer (admin.example.com) to allow for secure access over HTTPS. To verify the right certificate is being issued, expand the detail on our browser and view the cert name and serial number:

You can then check this in Vault and see if the common name and serial numbers match.

Terraform has configured all of the elements using the three-step approach shown in this post. To test the OpenAI application, follow Microsoft’s instructions. Skip to Step 4 and use https://admin.example.com to access the store-admin and the original store-front load balancer address to access the store-front.

»DevOps for AI app development

To learn more and keep up with the latest trends in DevOps for AI app development, check out this Microsoft Reactor session with HashiCorp Co-Founder and CTO Armon Dadgar: Using DevOps and copilot to simplify and accelerate development of AI apps. It covers how developers can use GitHub Copilot with Terraform to create code modules for faster app development. You can get started by signing up for a free Terraform Cloud account.