As organizations continue to shift their workloads to the cloud, managing the complexity and efficiency of these migrations is becoming increasingly critical. According to the 2022 HashiCorp State of Cloud Strategy survey, 94% of organizations are wasting money in the cloud.

Financial operations teams (FinOps) want to identify and remove sources of cloud waste through governance and cost management programs, but many FinOps practitioners are encountering difficulty in getting engineers to act on cost optimization recommendations as they work to address multiple issues at once. Platform teams, for example, are being asked to expedite the deployment of cloud infrastructure while optimizing for costs, all without compromising the performance and security of their cloud workloads.

Platform teams rely on repeatable templates and code to provision the infrastructure needed to expedite development activities. This shift helps teams work faster; however, it can also escalate cloud costs, security risks, and workstream inefficiencies due to suboptimal infrastructure choices.

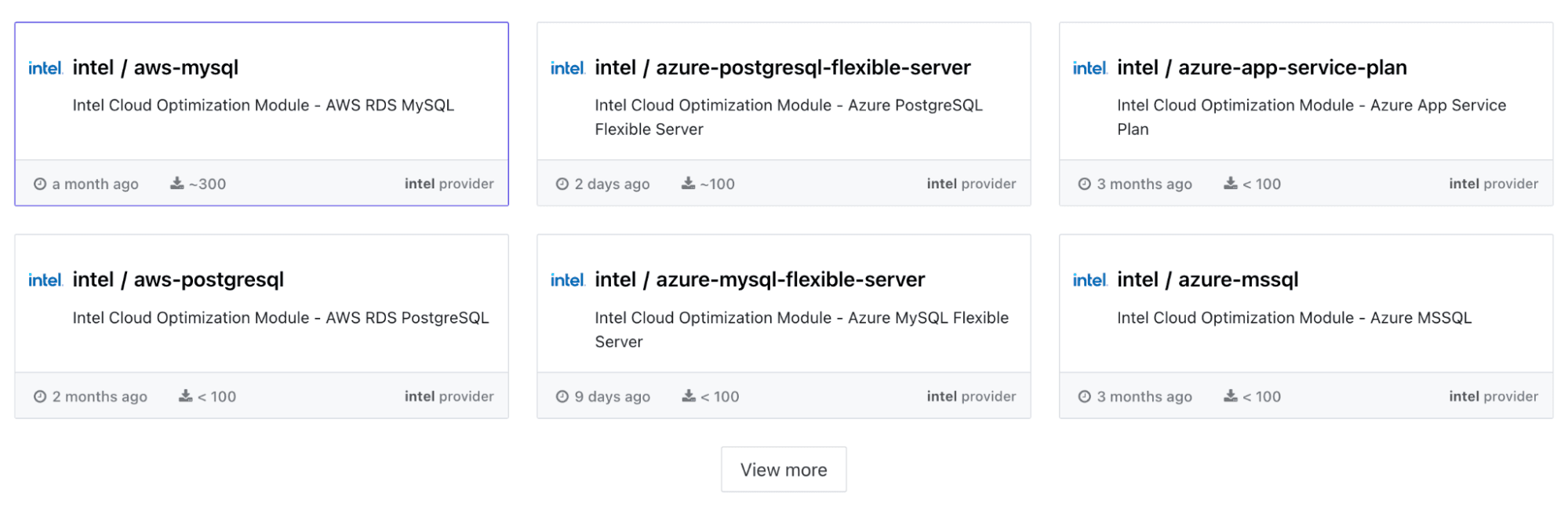

To help with these challenges, Intel and HashiCorp announced Intel Cloud Optimized Modules for Terraform to help organizations minimize costs for cloud infrastructure by automating resource choices (CPU, memory, storage, and networking capacity) according to Intel Tuning Guide best practices, enabling organizations to leverage Intel's unique technology to optimize the price and performance of their cloud workloads.

Intel has also made the second-highest number of policy as code contributions to the Terraform registry, after HashiCorp. Intel’s purpose-built policy as code sets simplify cloud infrastructure optimization alongside their modules.

»Intel Cloud Optimized Modules: Automated performance gains and lower costs

To demonstrate how this works, consider an example where an organization wants to deploy Microsoft Azure Database for MySQL. Adding a new workload can present challenges for both FinOps and platform teams. The platform team needs to choose the appropriate mix of resources for the application — CPU, memory, storage, and networking capacity — while FinOps needs to understand the price-performance options and if any future project changes should be considered.

Intel already provides optimizations for those resources in the form of Intel Tuning Guides, but these recommendations are for manual configurations. That means teams first have to identify what optimizations are available for the workloads they run. Then, using the Tuning Guides, they sift through potentially thousands of cloud instance types and sizes to find the option that uses the appropriate Intel chipset that can take advantage of these optimizations. Once identified, the server would have to be manually provisioned and optimized via the steps outlined in the Tuning Guides. When the next version of Intel optimizations and chipsets are released, they would have to do it all over again.

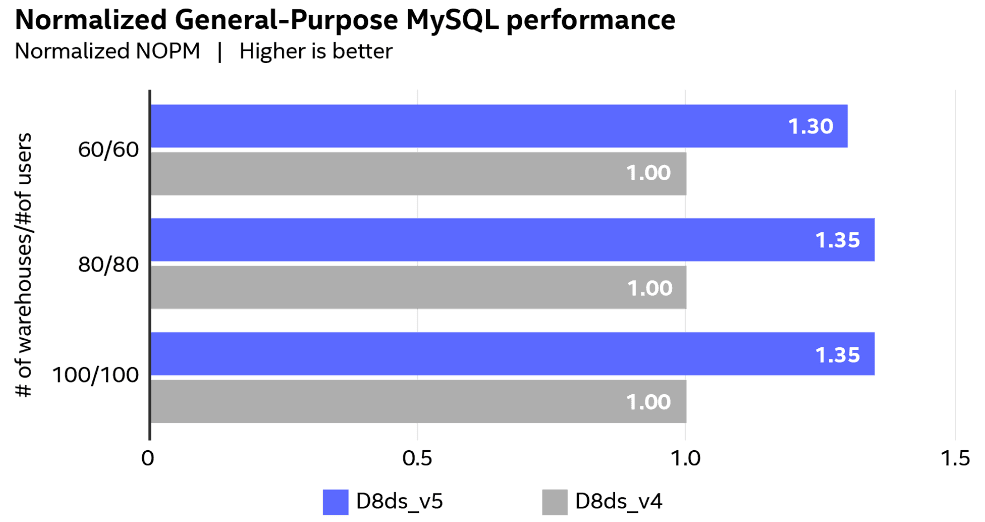

Intel published its Cloud Optimized Modules for Terraform to automate the application of the Intel Tuning Guides. In our example, where an organization wants to deploy Microsoft Azure Database for MySQL, their teams could use the Azure MySQL Flexible Server Module to deploy an Intel-optimized server instance. The instance selection and MySQL combination are included by default in the module code, and the module has been benchmarked to enable up to 34% more MySQL new orders per minute (NOPM) with third-generation Intel Xeon Scalable Processors (Ice Lake). Simply selecting this module and deploying with Terraform would completely avoid the need to manually implement Intel’s Tuning Guides.

Chart comparing MySQL database performance differences between two instance types. A performance increase of 34% more NOPM is realized by using the latest generation of Intel processors and optimizations.

»Intel policy sets

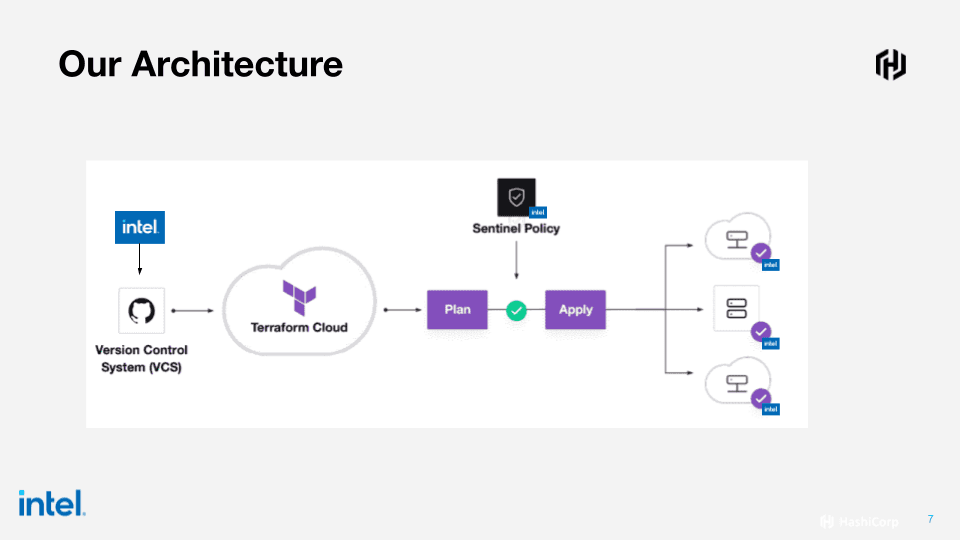

Additionally, the Intel Cloud Optimized Modules for Terraform work in tandem with a number of Sentinel policy sets that Intel has published to the Terraform Registry. These sets ensure only the requisite hardware for Intel’s optimizations is consumed and that your workloads land on the silicon required to take advantage of these optimizations.

An Intel module provisioning workflow with Sentinel policy as code validation between Terraform plan and apply steps.

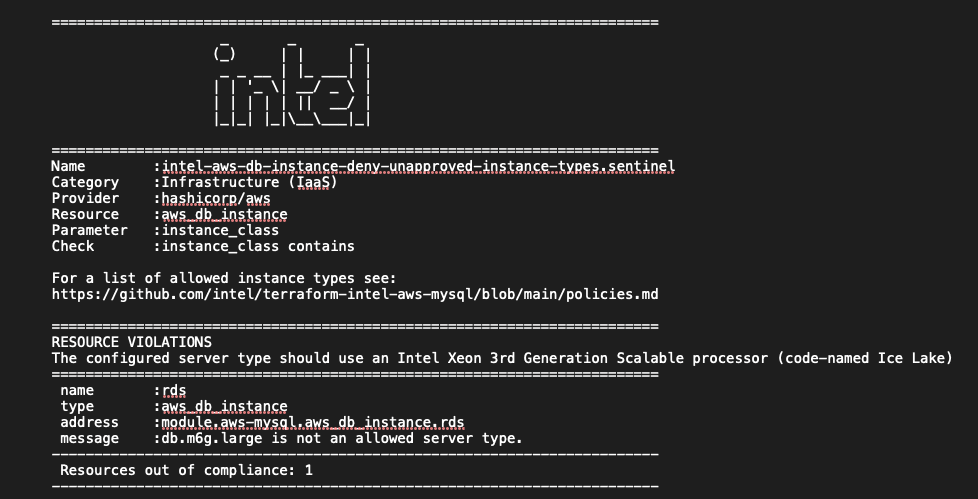

Below is an example output from a real policy module that shows a single AWS RDS instance out of compliance. The policy message shows the resource in violation and links to the appropriate list of instance types that can take advantage of Intel Tuning Guide optimizations. This output is designed to help users easily identify what resources are out of compliance and how to remediate their code.

As new Intel optimizations and built-in accelerators are released, the Cloud Optimized Modules and Intel’s Sentinel policy sets will be updated so that organizations can continue to benefit from Intel’s tuning practices around price and performance without additional development effort.

»Where to find Intel Cloud Optimized Modules and policies

Intel Cloud Optimized Modules and Sentinel policy sets can be found in the Terraform Registry through search or the Intel registry page. Each policy is named to make it easy to identify what type of resources you want to protect. The naming convention is:

intel-<PROVIDER>-<RESOURCE>-<ACTION>

For example, to ensure an AWS RDS MYSQL instance always deploys on the optimal Intel instance type, users would go to the Intel AWS policy library and look for:

intel-aws-db-instance-deny-unapproved-instance-types

The Intel Cloud Optimized Modules for Terraform provide an automated, streamlined solution for enterprises looking to optimize their cloud investments. The modules enable platform and FinOps teams to take advantage of the best price-for-performance optimizations and ensure only the requisite hardware is consumed for business-critical workloads in the cloud.