If you’re reading this, chances are you are in DevOps (or some type of engineering) and you are wondering: “Why on earth do I care about cloud cost optimization? That’s not my job, I’m not in finance, right?” Wrong!

Engineers are becoming the new cloud financial controllers as finance teams begin to lose some of their direct control over new fast-paced, on-demand infrastructure consumption models driven by cloud. So the question becomes: What are the people, processes, and technologies I can use to navigate this sea change?

If you are interested in defining a new role, automating your newfound responsibility, and implementing a process for cloud cost optimization with HCP Terraform, read on.

This guide will provide:

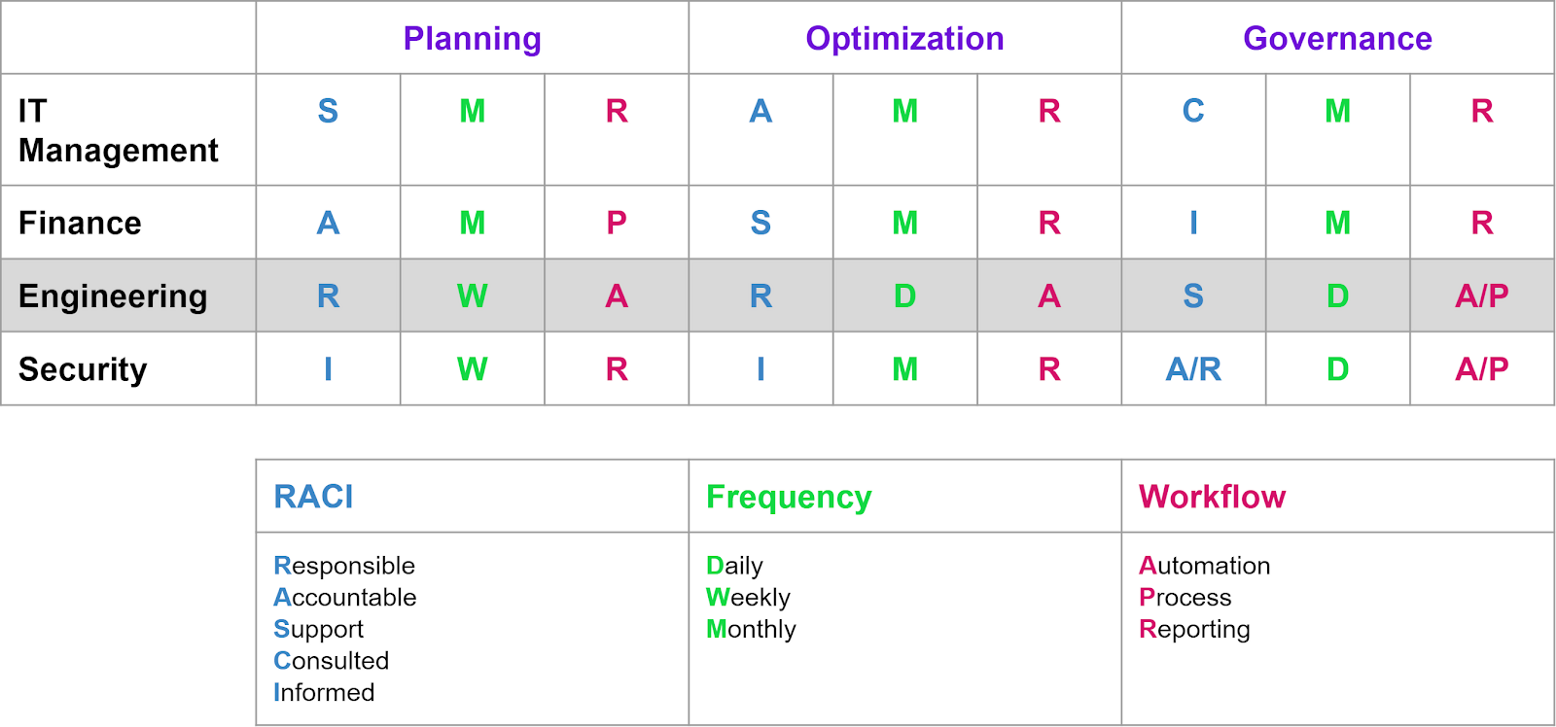

- A RASCI model assigning responsibilities for your team that manages overall cloud posture and costs

- A visualization of the cloud cost management within a Terraform provisioning workflow

- Planning recommendations for cloud cost management and forecasting with Terraform

- Instructions on how to integrate and use cloud-vendor and third-party cost optimization tools in a Terraform workflow

- Examples of Terraform’s policy as code framework, Sentinel, which can automatically block overspending with rules around cost, instance types, and tags.

The majority of features reviewed in this article will focus on Terraform paid functionality such as run task integrations with cost estimation software, along with policy as code guardrails, but the core use case around cost optimization can be achieved solely with the open source version.

»A survey of cloud waste

With the continuous shift to consumption-based cost models for infrastructure and operations; i.e. Cloud Service Providers (CSPs), you pay for what you use but you also pay for what you provision and don’t use. If you do not have a process for continuous governance and optimization, then there is a huge potential for waste.

A recent cloud spending survey found that:

- 45% of the organizations reporting were over budget for their cloud spending.

- More than 55% of the respondents are using either cumbersome manual processes, or simply do not implement actions and changes to optimize their cloud resources.

- 34.15% of respondents believe they can save up to 25% of their cloud spend and 14.51% believe they can save up to 50%. Even worse, 27.46% said, “I don’t know”.

First, let’s unpack why there is an opportunity and then get to the execution.

»Why engineers are becoming the financial controllers of cloud spend

In moving to the cloud, most organizations have put thought into basic governance models where a team, sometimes referred to as the Cloud Center of Excellence, looks over things like strategy, architecture, operations, and, yes, cost. Most of these teams contain a combination of IT management and cloud technical specialists from common IT domains and finance. Finance is primarily charged with cost planning, migration financial forecasting, and optimization.

Due to financial pressures, they tend to say: “We need to get a handle on costs, savings, forecasting, etc.” but have no direct control over costs. It is now engineers that directly manage infrastructure and the costs.

The business case is simple, it is a financial paradigm shift where:

- Engineers are not only responsible for operations but also costs.

- Engineers now have the tools and capabilities to automate and directly manage cost controls.

- Cost planning and estimation for running cloud workloads are not easily understood or forecasted by Finance.

- Traditional forms of financial budgeting and on-prem hardware demand planning (such as contract-based budgets and capitalized purchases) do not account for cost variability in consumption-based (i.e. Cloud) models.

Finance lacks control in the two primary areas of cost-saving:

- Pre-provisioning: Limited governance and control in the resource provisioning phase.

- Post-provisioning: Limited governance and control in enforcing infrastructure changes for cost savings.

In the following sections, we will define the people, processes, and technologies associated with managing cloud financial practices with Terraform.

»A RASCI chart example

To simplify things, we will assume there is some sort of team — i.e. the Cloud Center of Excellence — that is responsible for managing the overall cloud posture.

On this team there are four core roles:

- IT Management

- Finance

- Engineering (consisting of DevOps and Infrastructure & Operations)

- Security

I’ve built a RASCI model that can be used as a baseline of expectations for this team. This is a model I have used, along with similar models, to define the roles and responsibilities of the Cloud Center of Excellence for many organizations.

The three section headings in this table are:

- Planning — Relating to pre-cloud migration & ongoing cost forecasting

- Optimizing — Operationalizing and realizing continuous cost savings

- Governance — Ensuring future cost savings and waste avoidance

As you can see, engineering has a higher level of responsibility in today’s infrastructure operations, and the Frequency and Workflow columns will likely denote significant changes for many organizations.

»Planning, optimization, and governance

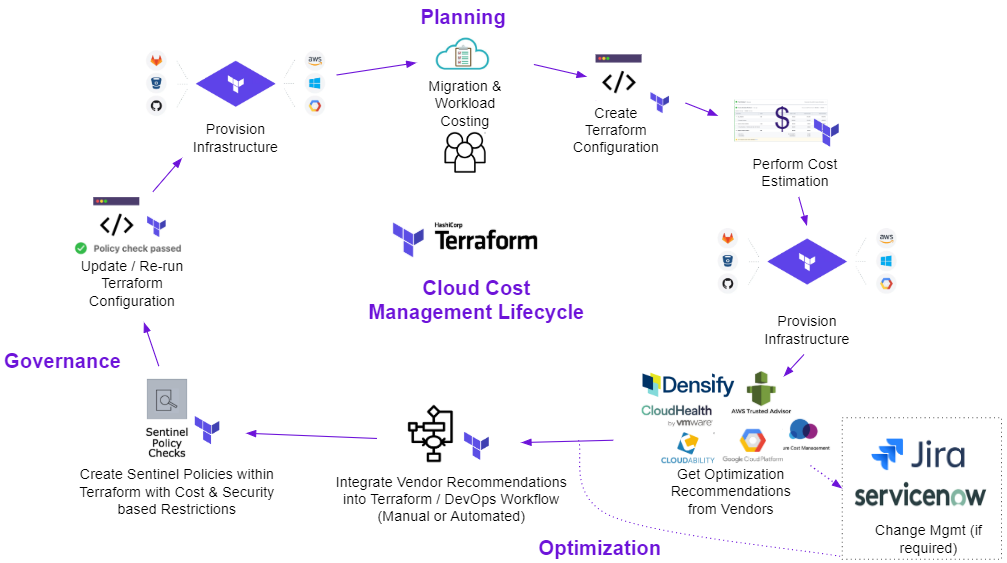

Now the next question: How can engineers use Terraform at each level of the cloud cost management process to deliver value and minimize additional work? To get started, see how the visualization illustrates Terraform’s place in the cloud cost management lifecycle. (Start at the top with the “Planning” phase)

To summarize the steps:

- Start by identifying workloads that are migrating to the cloud

- Create Terraform configuration

- Run

terraform planto perform cost estimation with integrated third-party tools, such as run tasks. - Run

terraform applyto provision the resources - Once provisioned, workloads will run and vendor tools will provide optimization recommendations

- Integrate a vendor’s optimization recommendations into Terraform and/or your CI/CD pipeline

- Investigate/analyze optimization recommendations and implement Terraform’s Sentinel policies for cost and security controls

- Update Terraform configuration and run plan & apply

- Newly optimized and compliant resources are now provisioned

»Planning — Pre-migration and ongoing cost forecasting

Cloud migrations require a multi-point assessment to determine if it makes sense to move an application/workload to the cloud. Primary factors for the assessment are:

- Architecture

- Business case

- Estimated cost for the move

- Ongoing utilization costs budgeted/forecasted for the next 1–3 years on average

Since engineers are now taking on some of these responsibilities, it makes sense to use engineering tools to handle them. Terraform helps engineers take on these new responsibilities.

Using Terraform configuration files as a standard definition of how an application/workload’s cost is estimated, you can now use HCP Terraform & Enterprise APIs to automatically supply finance with estimated cloud financial data or use Terraform’s user interface to provide finance direct access to review costs. By doing this, you can help eliminate many slower oversight processes.

»Planning recommendations:

- Use Terraform configuration files as the standard definition of cloud cost planning and forecasting across AWS, Azure, and GCP, and provide this information via the Terraform API or role-based access controls within the Terraform user interface to provide financial personas a self-service workflow.

- Note: Many organizations conduct planning within Excel, Google Sheets, and Web-based tools. To make data usable within these systems we would recommend using Terraform’s Cost Estimates API to extract the data.

- Use Terraform modules as standard units of defined infrastructure for high-level cost assessments and cloud demand planning

- Example: Define a standard set of modules for a standard Java application, so module A + B + C = $X per month. We plan to move 5 Java apps this year. This can be a quick methodology to assess potential application run costs prior to defining the actual Terraform configuration files.

- Use Terraform to understand application/workload financial growth over time, i.e. cloud sprawl costs.

- Attempt to structurally align Terraform Organization, Workspace, and Resource naming conventions to the financial budgeting/forecasting process.

»Tools for multi-cloud cost visualization

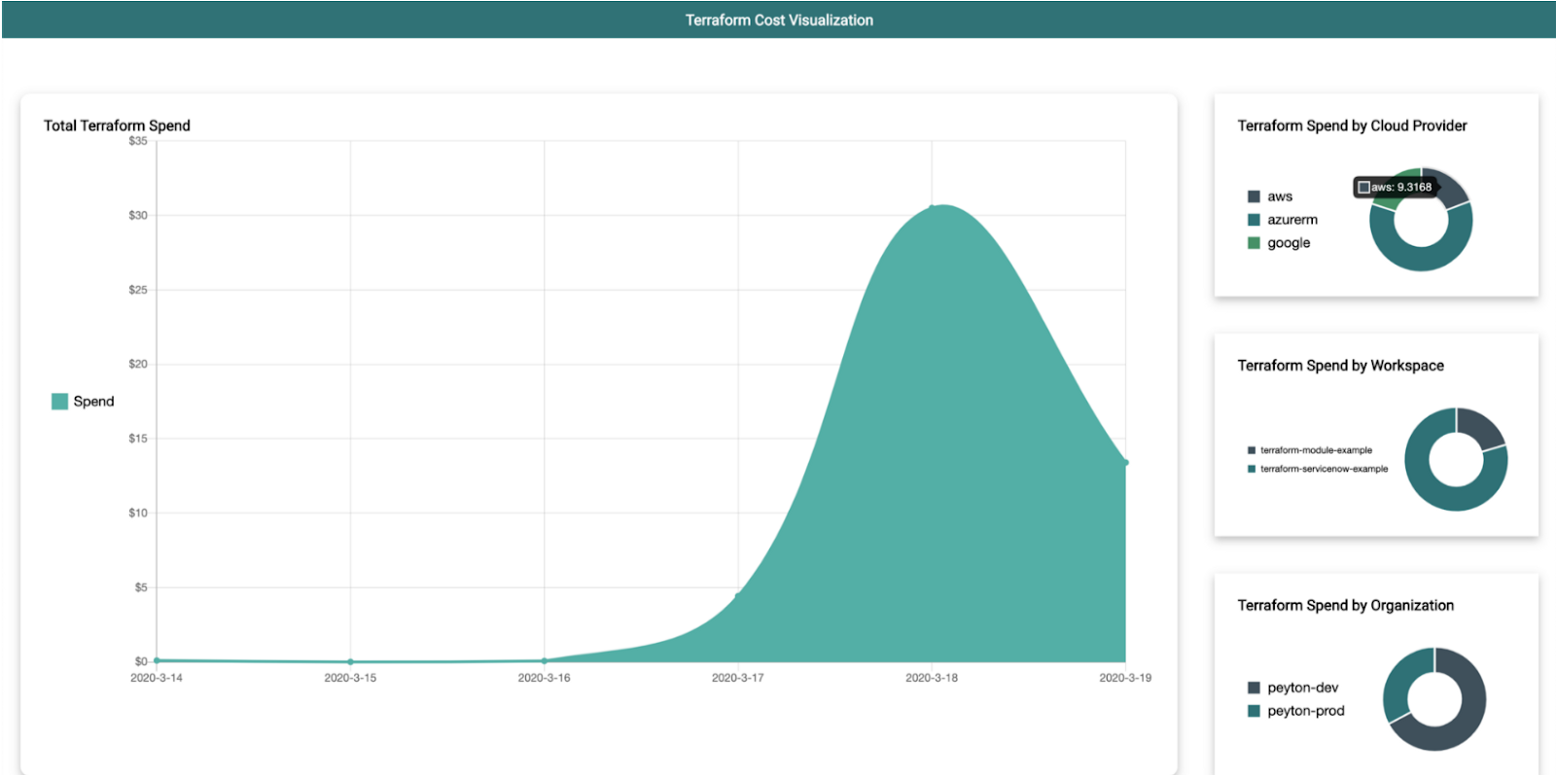

Peyton Casper, a HashiCorp senior solutions engineer has built a simple open source tool that can give you that higher level, cross-workspace view. The tool is called Tint and you can visit this blog post and its GitHub repository to learn how to use it.

Example dashboard from Tint

If you have heavier requirements for reporting, or you already have an existing corporate reporting product (e.g. Microsoft BI, Tableau, etc.), Terraform’s cost estimation data will work with these solutions as well.

»Optimizing — operationalizing and realizing continuous cost savings

Optimization is the continued practice of evaluating the costs and benefits ratio of your current infrastructure usage. The cloud vendors (AWS, Azure, GCP, etc.) and other third-party tools can start you off with some optimization recommendations but some organizations don’t always take advantage of the recommendations.

Engineering does not always engage with these optimization systems, leaving them with no feedback mechanism. If they are engaged with these systems, there is often a high level of manual intervention needed.

»Automating optimization insights into the provisioning workflow

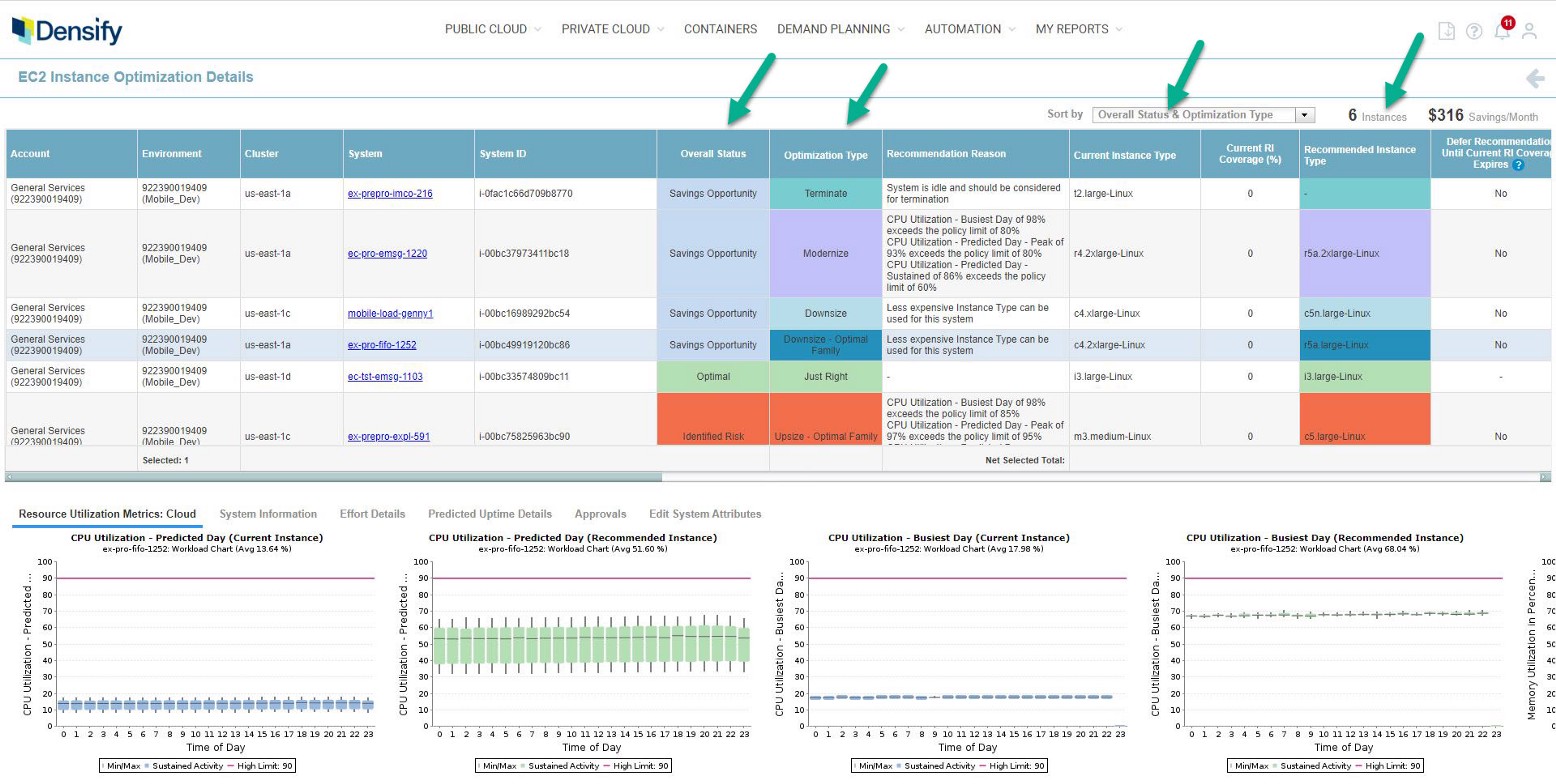

It is safe to say that the major CSPs and the vast majority of third-party tools allow you to export optimization recommendations via an API or an alternative method (references: AWS, Azure, GCP). For the purposes of this guide, we are going to focus on the most basic approach to automate optimization data ingestion, which will come directly from the CSPs or from third parties such as Densify who maintain a Terraform Module. I will use Densify in my examples that follow. (Note that there are also many HashiCorp users that create their own Terraform providers for similar processes.)

Densify EC2 optimization report example

The concepts and code can be used as a model for your own deployments. Please note that each vendor provides a different set of recommendations, but all of them provide insights on compute, so we will focus on that. Any insight that you receive (e.g. compute, storage, DB, etc.) can be consumed based on the pattern below.

»Basic patterns for consuming optimization recommendations

To establish a mechanism for Terraform to access the optimization recommendations, we see several common patterns:

-

Manual Workflow — Review of optimization recommendations from the providers portal and manually update Terraform files. Since there is no automation, this is not optimal, but a feedback loop for optimization must start somewhere!

-

File Workflow — Create a mechanism where optimization recommendations are imported into a local repository via a scheduled process (usually daily).

- For instance, Densify customers use a script to export recommendations into a

densify.auto.tfvarsfile and it is downloaded and stored in a locally accessible repository. Then the Terraform lookup function is used to look up specific optimization updates that have been set as variables.

- For instance, Densify customers use a script to export recommendations into a

-

API Workflow — Create a mechanism for optimization recommendations to be extracted directly from the vendor and stored within an accessible data repository using Terraform’s http data_source functionality to perform the dataset import reference.

-

Ticketing Workflow — This workflow is similar to the file and API workflows but some organizations insert an intermediary step where the optimization recommendations first go to a change control system like ServiceNow or Jira. Within these systems there is workflow and approval logic built-in where a flag is set for acceptable change and is passed as a variable to be consumed later in the process.

»Optimization as code: Terraform code update examples

In any of these cases, especially if automation is taking place, it will be important to maintain key pieces of resource data as variables. The optimization insight tools will provide a size recommendation for resources or services (i.e. compute, DB, storage, etc.). In this example, we will use compute resources, but the example is representative of all.

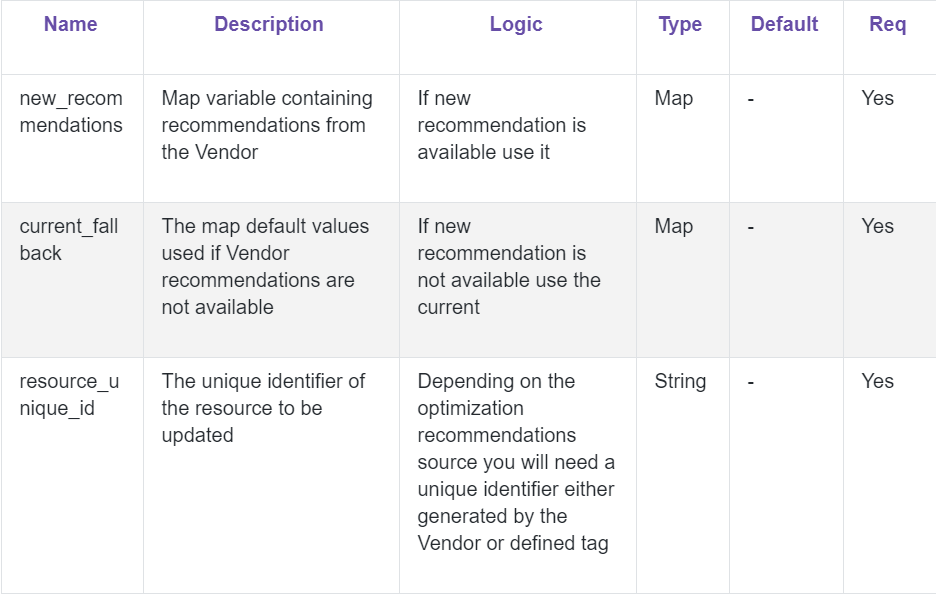

At a minimum, I recommend that you have three variables set to perform the optimization update in Terraform with some basic logic: new_recommendations, current_fallback, and resource_unique_id.

As I mentioned, I’ll be using Densify. You can find the Densify Terraform module via the Terraform Registry and the Densify-dev GitHub repo.

In this first snippet, you will see some basic updates of Terraform code with variables and logic to get started. Below is an example of the variables created.

variable "densify_recommendations"{

description = "Map of maps generated from the Densify Terraform Forwarder. Contains all of the systems with the settings needed to provide details for tagging as Self-Aware and Self-Optimization"

type = "map"

}

variable "densify_unique_id"{

description = "Unique ID that both Terraform and Densify can use to track the systems."

}

variable "densify_fallback"{

description = "Fallback map of settings that are used for new infrastructure or systems that are missing sizing details from Densify."

type = "map"

}

In the next snippet, you will see updates with the Terraform lookup function to look up the local optimization recommendations file — densify.auto.tfvars — for changes. The optimization recommendations can also be auto-delivered by using webhooks and subscription APIs.

locals{

temp_map = "${merge(map(var.densify_unique_id, var.densify_fallback),var.densify_recommendations)}"

densify_spec = "${local.temp_map[var.densify_unique_id]}"

cur_type = "${lookup(local.densify_spec,"currentType","na")}"

rec_type = "${lookup(local.densify_spec,"recommendedType","na")}"

savings = "${lookup(local.densify_spec,"savingsEstimate","na")}"

p_uptime = "${lookup(local.densify_spec,"predictedUptime","na")}"

ri_cover = "${lookup(local.densify_spec,"reservedInstanceCoverage","na")}"

appr_type = "${lookup(local.densify_spec,"approvalType","na")}"

recommendation_type = "${lookup(local.densify_spec,"recommendationType","na")}"

Lastly, you will want to insert some logic to ensure that you are properly handling the usage reference. If a recommendation is available, use it. Otherwise keep the current one.

Densify also adds some code in as part of a change control process for their customers that are using a change control/ticketing system. They have an option to first pass the optimization recommendation to be approved in one of these external systems and then pass an approval flag in as a variable to ensure that it is an approved change.

instance_type = "${local.cur_type == "na" ?

"na" :

local.recommendation_type == "Terminate" ?

local.cur_type:

local.appr_type == "all" ?

local.rec_type :

local.appr_type == local.rec_type ?

local.rec_type :

local.cur_type}"

For customers not using a third party approval system, the recommendation’s changes will be visible on the Terraform plan. Similarly, you can also manually update a variable such as appr_type = false to avoid using the recommendation. Or you can use other similar methods via Feature Flags and conditional expressions in Terraform to control applied functionality.

The takeaway here is that we now have a defined process that can be partially or fully automated to make fast, code-defined changes to our infrastructure environment to optimize and save money.

»Governance — Ensuring future cost savings

The last and critical component of the cloud cost management lifecycle is having guardrails to stop cost overruns and provide a feedback loop. I have had this conversation with many organizations that have done optimization exercises only to have their costs shoot back up because they didn’t put preventative controls in place from the start.

In Terraform, you can automate this feedback loop with Sentinel, a policy as code framework embedded within Terraform for governance & policy (Sentinel can be used in other HashiCorp products as well).

»Cost compliance as code = Sentinel policy as code

Sentinel includes a domain specific language (DSL) to write policy definitions that evaluate any and all data defined within a Terraform file. You can use Sentinel to ensure provisioned resources are: secure, tagged, and are within usage and cost constraints.

For costs, Terraform customers implement policy primarily around three areas: (but remember, you’re not limited to just these three… you can get creative):

Cost Control Areas:

- Amount — Control the amount of spend

- Provisioned size — Control the size/usage of the resource

- Time to live — Control the time-to-live (TTL) of the resource

In all three of these areas, you are able to apply policy controls around things like Terraform workspaces (e.g. apps/workloads), environments (e.g. prod, test, dev), and tags to optimize resources and avoid unnecessary spending.

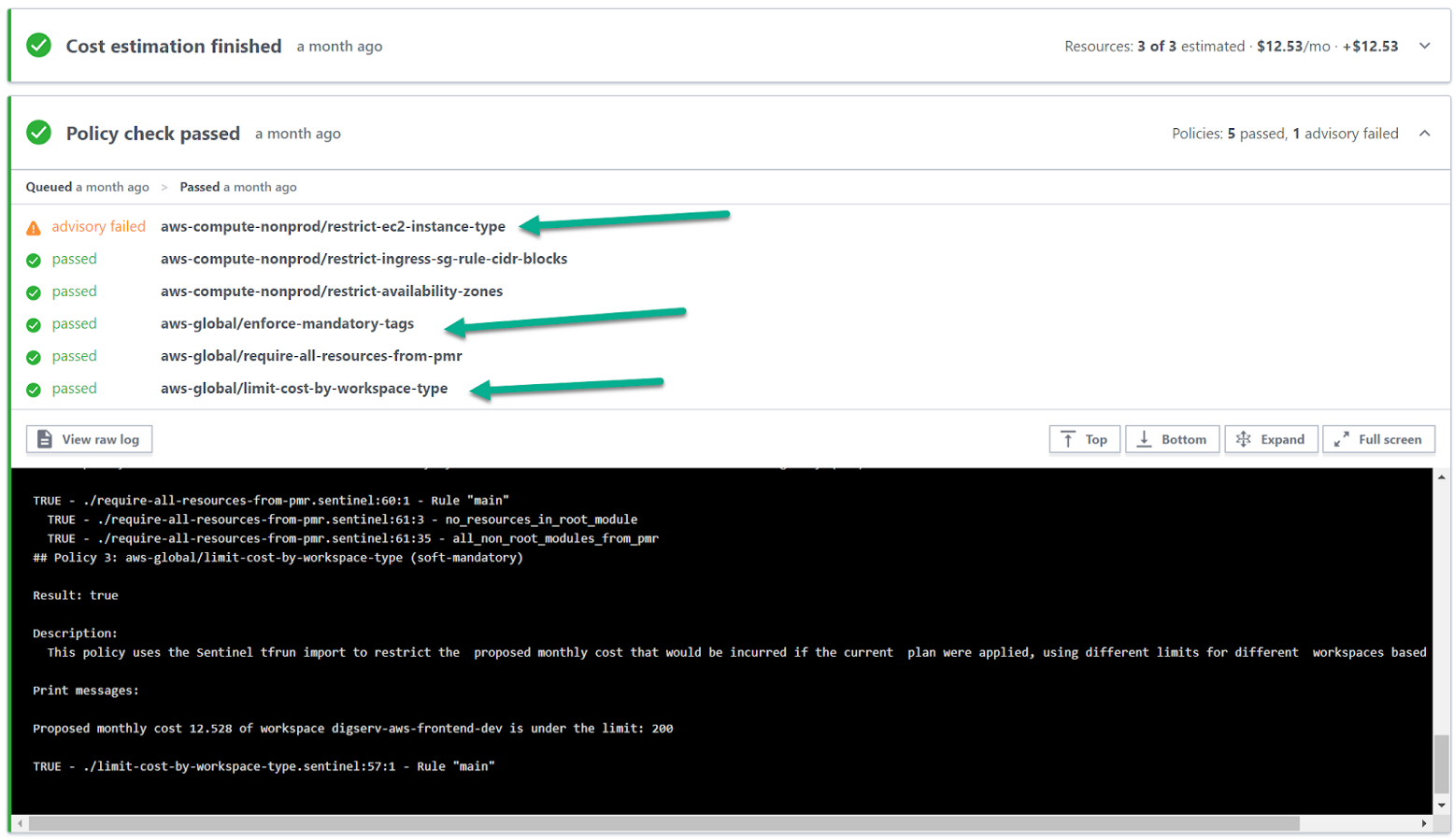

The following is an example Sentinel policy output when running terraform plan. We will focus on three policies:

-

aws-global/limit-cost-by-workspace-type -

aws-compute-nonprod/restrict-ec2-instance-type -

aws-global/enforce-mandatory-tags

Sentinel has three enforcement levels: Advisory, Soft-Mandatory, and Hard-Mandatory — please refer to the provided link for definitions. The enforcement level will dictate workflow and resolution of policy violations.

If you’re using Terraform Cloud for Business or Terraform Enterprise, users may interact with the Terraform UI, CLI, or the API to fully integrate into their CD/CD pipelines for policy workflow control and into VCS systems such as GitLab, GitHub, and BitBucket for policy creation and management.

»Sentinel cost compliance code examples

In the aws-global/limit-cost-by-workspace-type policy defined for this workspace (which can be individual or globally defined) I’ve applied monthly spending limits and an enforcement level. You can see this snippet below which shows cost limits ($200 for development, $500 for QA, and so on). I’ve set the enforcement level to soft mandatory, which means administrators can override policy failures if there is a legitimate reason, but it will block most users from spending up to that amount.

Sentinel cost compliance — Monthly limits

##### Monthly Limits #####

limits = {

"dev": decimal.new(200),

"qa": decimal.new(500),

"prod": decimal.new(1000),

"other": decimal.new(50),

}

policy "limit-cost-by-workspace-type" {

enforcement_level ="soft-mandatory"

Now we have a mechanism to control costs _before_ those resources are provisioned.

Sentinel cost compliance — Instance Types

Here’s another example — for a multitude of reasons including compliance and costs, many customers will restrict what compute instance types can be provisioned and potentially configuration limits based on environment or team. A full example of this type of policy can be seen here: aws-compute-nonprod/restrict-ec2-instance-type.

In the example below, we have a policy that controls instance sizes on non-prod environments to ensure lower costs in these less critical environments.

# Allowed EC2 Instance Types

# We don't include t2.medium or t2.large as they are not allowed in dev or test environments

allowed_types = [

"t2.nano",

"t2.micro",

"t2.small",

]

policy "restrict-ec2-instance-type" {

enforcement_level = "advisory"

Sentinel cost compliance — Enforce tagging

Lastly, tagging is a critical factor in understanding costs. Tagging enables you to group, analyze, and create more granular policy around infrastructure instances. (See how tagging helps our own teams at HashiCorp cull orphaned cloud instances)

Sentinel can enforce tagging at the provisioning phase and during updates to ensure that optimization can be targeted and governed. Tagging is managed in a simple key-value format and can be enforced across all CSPs. Here is a full sample policy for enforcement on AWS.

### List of mandatory tags ###

mandatory_tags = [

"Name",

"ttl",

"owner"

"cost center

"appid",

]

policy "enforce-mandatory-tags" {

enforcement_level ="hard-mandatory"

This gives you the ability to ensure things like owner, cost center, and time-to-live for each infrastructure resource are trackable.

»The way forward for cloud cost management

As organizations increasingly use cloud infrastructure, the DevOps philosophy can no longer be ignored. As silos between developers and operators break, so must the silos between finance and engineering.

Engineers have a lot more autonomy to deploy the infrastructure they need immediately. That means more responsibility to control those costs themselves. Technologies like Terraform and Sentinel give engineering the automated, finance-monitored workflows they need to manage costs and reclaim unused resources — all inside the tooling that most of them already use. This helps organizations avoid a cumbersome, ticket-based approach, while also avoiding the chaos and waste of Shadow IT run amok.

If anyone has worked on projects in this space with Terraform that you would like to highlight or if you want more information on the subject, please feel free to reach out.