What is a Service Mesh?

HashiCorp co-founder and CTO Armon Dadgar explains the concept of a service mesh in plain English.

** For a detailed, technical, written overview of service mesh, visit our newer page: What is a service mesh? **

Everyone is talking about the service mesh as a way to simplify your networking by moving it from a ticket-based, ITIL-style interaction, to an agile, self-service interaction. But what are they? And why are people so interested in them?

In this video, HashiCorp co-founder and CTO Armon Dadgar simplifies the definition of a service mesh by focusing on the challenges it solves and what the day-to-day of using a service mesh looks like.

You can also dive deeper into this topic by watching Demystifying Service Mesh, reading our blog post on Smart networking with Consul and Service Meshes, and visiting our comparison of service meshes.

Speakers

Armon DadgarCo-founder & CTO, HashiCorp

Armon DadgarCo-founder & CTO, HashiCorp

Transcript

The way we describe Consul is as a service mesh for microservices, which then leads to the question: What is a service mesh? When we take a step back and look at the architectural challenges of operating either a microservice architecture or what we used to call a service-oriented architecture, what we tend to find is that what we're really doing is having a collection of different services. We might have our frontend login portal, our ability to check the balance of, let's say a bank account, wire transfers, bill pay, and all of these are discrete services that are part of the larger bank, as an example.

When we talk about these different services, all of them are communicating to each other over a network, fundamentally. So, the challenge becomes: How do these different services that exist at different IP addresses on different machines in our infrastructure, how do they, first of all, talk to each other? How do they discover, and route, and make sure that our login portal knows how to communicate with our check bounce service?

Routing and discovery challenges

This first challenge is routing and discovery. And the way most organizations think about solving that is by putting a load balancer in front of every service. So, we'll have a load balancer that is hardcoded at a particular IP so each of our services will hardcode that IP address of the load balancer, and that load balancer will take us back to the backend service.

Now, the challenge with that approach is you end up with a proliferation of load balancers. You need thousands of them in an environment. But two, that they end up usually being manually managed. So, I end up having to file a ticket and wait five weeks, six weeks for someone to go in and add a new instance to that load balancer before traffic gets to it. So, we're introducing both a cost penalty as well as an agility penalty to how fast our organization can deploy new services, scale up and down, react to machine failures, etc.

Network security challenges

What we start to look at, first of all, is those challenges around routing and discovery. The second set of major challenges: how do I secure all of this? Historically, what you would see is a flat open network. We would put all of our security at the perimeter of the network and define the castle walls, and use firewalls, and WAFs, and SIEMs, and other devices to filter all the traffic coming into the data center.

But then once you're in, in general what you tend to see, is large flat namespaces. Potentially, there are some firewalls in the east-west path, but oftentimes those firewalls are also a management burden. They end up with tens of thousands or millions of rules that are manually managed, and you have this mismatch of agility, which might take me weeks to update my firewall rule, but it only takes me seconds to launch a new application. This creates friction for development teams as they're trying to scale up, scale down, deploy new versions, but are shackled by how quickly firewalls and load balancers can be updated.

Solving discovery challenges with a service registry

Those are some of the problems as we talk about what exists in a microservice or service-oriented architecture. The goal of a service mesh is to solve that problem holistically. What that starts with is having a central registry of all of the services that are running. So, anytime a new application comes online, it populates that central registry, and so we know 'great, service instance B is now available at this IP.' What this lets us do is, anyone who wants to discover that service can query the central registry and say, "where's that service running? What's its IP? How do I talk to it?"

This lets us build a much more dynamic infrastructure whereas servers come and go or we scale up and down, we don't have to file a ticket and wait weeks for load balancers and firewalls to get updated. It comes up, goes in the registry, it can be discovered immediately.

Solving security challenges with service identity

The other side of that problem is, how do we secure the traffic east-west inside the castle walls. It's not enough anymore for us to just say, "this network perimeter is perfectly secure." We have to be realistic and say, "an attacker will get onto that network." And if an attacker does get on that network, we can't have it be wide open. Instead, every service should require explicit authorization. You can only talk to the database if you need to talk to the database.

That becomes the second challenge service meshes look at solving: how do we let you explicitly define rules around which service can talk to what? So, we can centrally define and say, my web server is allowed to talk to my database, but we do it at the logical service level. We're not doing an IP1 can talk to IP2 at a firewall level, and the advantage of this logical service level is it's scale independent. It doesn't matter if we have 1, 10, or 1,000 web servers. The rule, web server to database is the same.

The challenge of this identity-based security is now we need to have service identity, so part of what a service mesh does is it distributes certificates to these end applications. These are standard TLS certificates, much like we use to secure the public internet, but we distribute these to our web servers, and our databases, and our APIs so that they can use it to identify themselves in service-to-service communication.

So, when a web server talks to a database, it's presenting a certificate that says I'm a web server. Likewise the database presents a certificate saying, I'm a database, and what this lets them do is establish mutual TLS. So, both sides verify the identity of the other side, but they also establish an encrypted channel, so all of their traffic going over the network is now encrypted.

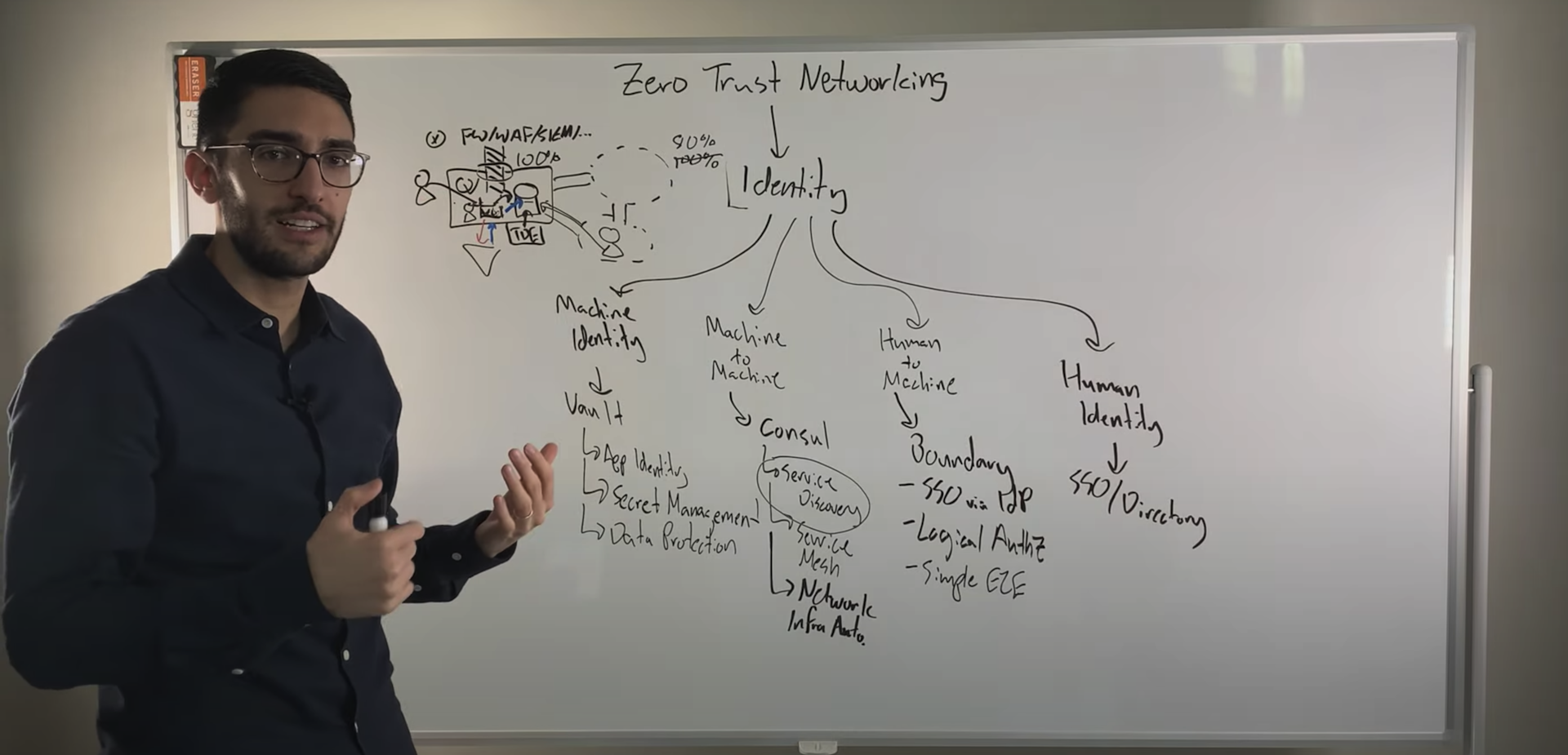

This is the core idea behind zero-trust networking. We're not trusting our network and a TLS layer. We're asserting both identity and encryption for confidentiality.

Compatibility layer challenges

The final part of that is tied into that central rule set. Just because we know a web server's talking to a database doesn't mean that should be allowed. Instead, we have to talk back to that central rule set and determine, should the web server be allowed to talk to the database? So, we can centrally manage these rules as to who's allowed to talk to whom, but then distribute certificates and distribute these rules to the edge so that at the network layer, services can communicate to each other without going over a central bus.

So, unlike something like an enterprise service bus, or technologies that require going through centralized brokers, we can push all of the communication to the edge, and it's all node to node. Authorization decisions are made at the edge without having to come back through a centralized bus.

What this brings us to is, how do we think about the requirements for organizations that are adopting a service mesh? One of those challenges relates back to the fact that we don't want to go back to a centralized broker or a centralized bus. The moment we have that centralization, we've added the single point of failure. Now, all of this traffic potentially has to come back to a central point, and that becomes our bottleneck.

One key thing to look at is, how much of these decisions in terms of authentication and authorization can be pushed to the edge without causing that to come back to a central point? So, there's this question of, what does the scalability of the system look like when we start talking about thousands or tens of thousands of nodes?

A second problem is, the network is fundamentally our compatibility layer, what lets our mainframe talk to our metal, talk to our VM, talk to our container, talk to our serverless function is that they all speak TCP IP. The network is the compatibility layer. As you look at service mesh technologies, you need it to also be a compatibility layer.

A challenge is, how do I make sure that my modern containerized application is still able to talk back to my mainframe? Because if it's not able to, we end up creating these silos on the network where our different services can no longer communicate, breaking what the network has always given us, which is compatibility between different technologies, different operating systems, and different languages. So, that compatibility is key.

The other thing that's important is, how much do our applications have to be aware or be retooled to make use of this technology? If we have thousands of applications, some speaking custom protocols, some of which we don't control the source code to that might be off the shelf, how do we actually make sure they can fit into this environment?

Multi-level network awareness

What's important to understand is, does this service mesh give me just level four (L4) capability? Which is where we really focus on is that level three, level four, to make sure that we get compatibility across every type of protocol. So, we don't have to be protocol aware to be able to proxy the traffic, secure it, authenticate and authorize it.

But as we look further up to level seven (L7), for protocols that we are aware of, can we do things like traffic shaping and more intelligent traffic management at that layer? What we don't want is only a L7 awareness, where now it's impossible to use protocols that we're not L7 aware of. This creates a compatibility problem once again.

The final thing we want to consider is, what does the operation of the system look like? Right? We're starting to put these things at the core of our networks, so taking an outage of these types of systems will impact all of the systems running on top of it. Potentially, our entire data center. So, we want to make sure these are systems that are easy to scale, but easy to make sure that they run reliably, and are 24x7 available. So, that's how we think about the requirements as an organization evaluates different service mesh technologies.